Documentos de Académico

Documentos de Profesional

Documentos de Cultura

Patent For Virtual, Augmented and Mixed Reality Shooting Simulator

Cargado por

James "Chip" Northrup0 calificaciones0% encontró este documento útil (0 votos)

66 vistas34 páginasNorthrup patent for Virtual, Augmented and Mixed Reality Shooting Simulator using a real gun as the game controller

Título original

Patent for Virtual, Augmented and Mixed Reality Shooting Simulator

Derechos de autor

© © All Rights Reserved

Formatos disponibles

PDF o lea en línea desde Scribd

Compartir este documento

Compartir o incrustar documentos

¿Le pareció útil este documento?

¿Este contenido es inapropiado?

Denunciar este documentoNorthrup patent for Virtual, Augmented and Mixed Reality Shooting Simulator using a real gun as the game controller

Copyright:

© All Rights Reserved

Formatos disponibles

Descargue como PDF o lea en línea desde Scribd

0 calificaciones0% encontró este documento útil (0 votos)

66 vistas34 páginasPatent For Virtual, Augmented and Mixed Reality Shooting Simulator

Cargado por

James "Chip" NorthrupNorthrup patent for Virtual, Augmented and Mixed Reality Shooting Simulator using a real gun as the game controller

Copyright:

© All Rights Reserved

Formatos disponibles

Descargue como PDF o lea en línea desde Scribd

Está en la página 1de 34

US 201502

cu») United States

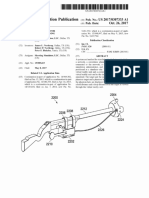

cz) Patent Application Publication — co) Pub. No.: US 2015/0276349 Al

Northrup et al.

(34) SYSTEM AND METHOD FOR

MARKSMANSHIP TRAINING

(7) Applicant: Sho

ws)

lator, LLC, Yaatis, TX.

(72) Inveators: James L. Northrup, Dallas, TX (US)

Robert P, Northrup, Dallas, TX (US);

Peter F Blakeley, Yanis, IX (US)

(73) Assignee: Shoot

ws)

lator, LLC, Yaatis, TX.

Appl. No 14/686,398

Filed: Apr 14,2018

Related

Application Data

(63) Continuation-in-part of application No. 14/140.418.

filedon Jan. 7,2014, which sacontinuaion-in-par of

pplication No, 13890.997, filed on May 9, 2013,

(43) Pub, Date Oct. 1, 2015

Publication Classification

(1) neck

PUG 326 2006.01)

(2) US.CL

fd PAG 32633 2013.01)

on ABSTRACT

A system and method for simulating lead ofa target inchodes

‘network. simulation administrator anda user device con-

‘ected tothe network, a database connected to the simulation

‘administrator, and a st of positon trackers positioned at a

‘Simulator site. The user device includes a Virtual reality ait

anda computer connected ta the set ofvirwal reality unit and

tothenetwork. A generated target is simulated, The tant and

the user are tracked to generate phantom target and a phan-

‘om halo. The phantoms target ax the phantom halo are dis

played on the vital reality unit ata lead distance and a deop

stance from the target as viewed though the virtual reality

Patent Application Publication Oct. 1, 2015 Sheet 1 of21 US 2015/0276349 Al

413

417

111 FIG. 1

118

100.

20.

Patent Application Publication Oct. 1, 2015 Sheet 20f21 US 2015/0276349 Al

219

a_- TY =. ”—”—..

G | >

‘ | /

\ /

\ : /

220

mC I /

\ : / L221

220 | Ags

\ | /

Nae 1 225 /

Patent Application Publication Oct. 1, 2015 Sheet 30f21 US 2015/0276349 Al

FIG. 3B

Patent Application Publication Oct. 1, 2015 Sheet 4 of 21. US 2015/0276349 Al

1

1

1

I

1

FIG.3C + ¥

aa

cd

Al

\l

oo .

'\ 315

y

WN |

1

1

316 !

1313

~--4g_----- =

\

1 .

1 \

; i

FIG.3p } Y

Patent Application Publication Oct. 12015 Sheet Sof 21 US 2015/0276349 Al

FIG. 4A

405

404

406

FIG. 4B

US 2015/0276349 Al

Oct. 1,2015 Sheet 6 of 21

Patent Application Publication

Patent Application Publication Oct. 1, 2015 Sheet 7of21 US 2015/0276349 Al

6804S UseR Device

7600

601

SIMULATION

602~|_ ADMINISTRATOR

1

SIMULATION

603 DATABASE

cer

FIG. 6

SIMULATION ADMINISTRATOR

703~J” NETWORK INTERFACE

io

702

a ba

MEMORY

‘SIMULATION APPLICATION

706-“L_POSITION APPLICATION

707-L_STATISTICSENGINE |} 705

TARGET AND PHANTOM

708~| GENERATOR

FIG. 7

US 2015/0276349 Al

Oct. 1,2015 Sheet 8 of 21

Patent Application Publication

618 —_ sovaesin, }~418

STOMINOD >) S18 [**[ NouLwoINnioo

8 DId 818 918

Y SYOSN3S +>) wossa00ud +] ANOWaW Iv

BOIARC LAAN

v8

eee. -AMOWSIN

£28 ‘WOLVESNSD £18

t WOLNYHd ONY 1308

soveain’ |, f _ soe

NOLLWOINNANOD oe 128 aNonasousuvis }~eH8

tis | | b-o[ wawaas

LNT AvTaSIg J+] YOSSBO0Nd | | AvALLVE NOUWOMda NOILISOd

t NOLWONddW NOLWINNIS O18

vee

Sze. SYOSNSS SOW

le-»[ anoncouoIN

JasqvaH 2 :

7 608 a

? wOss300ud 208 08

zoe t

[108

MSNOVELNOLLISOd -e>y 3OV4NSLNI MHOMLIN 08

‘YaLNdNOo

908

a i

me [REBLLVE FIVER 9g

US 2015/0276349 Al

Oct. 1,2015 Sheet 9 of 21

Patent Application Publication

V6 ‘Old

206

406

‘SYOSN3S-

z16-~| S06

YaLNdNOD

’ _

b16-—~ 906

¥YOSSIOOUd

vl6-*

AMOWSN

el6-

Patent Application Publication Oct. 1, 2015 Sheet 10 of 21 US 2015/0276349 AI

905 oan

C920 g20-

903-J 8 0 th +903

920 915

FIG. 9B

US 2015/0276349 Al

Oct. 1,2015 Sheet 11 of 21

Patent Application Publication

01 ‘Old

walNdiNOo

}

S20l zy,

4H0INO

3OV-UALNI

NOIWOINNWWOD

Sool

xwaLive \

SUOSNES —-9ZOL-~

VOI ‘OI zoo) £001-.

<2

v0 oe

900

zoo +00 201

UOLMNLOY OQ,

Patent Application Publication Oct. 1, 2015 Sheet 12 of 21 US 2015/0276349 Al

FIG. 10C

101 at GYROSCOPE

¥

FIG. 10D

Patent Application Publication Oct. 1, 2015 Sheet 13 of 21 US 2015/0276349 AI

1116 1100

1101 1107

PROCESSOR

1103

BATTERY MEMORY

1106

1105

COMMUNICATION

INTERFACE.

FIG, 11

US 2015/0276349 Al

Oct. 1,2015 Sheet 14 of 21

Patent Application Publication

‘v0Z4

waLNdWOo

7+

i

Patent Application Publication Oct. 1, 2015 Sheet 1S of 21 US 2015/0276349 Al

1300

VIRTUAL REALITY

COMMAND INPUTS:

1301 smutarion tye]

1302 wearonrvee__—_—d

1308 aamunrtion |

1304 taneer ped

1305 —stanowseuect _]

rao 1_brvmenrwooe |

rg

aro-L_nstnreriay

FIG. 13

Patent Application Publication Oct. 1, 2015 Sheet 16 of 21 US 2015/0276349 Al

1400

1401~[” SET BASE PosmTiONS ¢

[AND ORIENTATIONS

_—————

DETERWINE TARGET,

1402~) “ENVIRONMENT, AND

WEAPON DATA

t

1403~]~ GENERATE TARGET

AND ENVIRONMENT.

t

1404S GENERATE WEAPON

t

+1405~ LAUNCH AND DISPLAY

GENERATED TARGET

+1406 -{_DETERMINE USER VIEW

t

GENERATE

1407 ~ | PHANTOMHALO OVERLAY rT)

q MISS

‘OUTPUT PRANTOWHALO

1408 “| AND WEAPON TO DISPLAY 1416

DISPLAY TARGET HIT

VIRTUAL T a

REALTY

RUNTIME RECORD HIT cry

414107 DETERMINE SHOT STRING ene

t TARGET

DISPLAY SHOT STRING

1411 an

ANALYZERESULTS gag

Ose

FIG. 14

Patent Application Publication Oct. 1, 2015 Sheet 17 of 21 US 2015/0276349 AI

FIG. 15A

Patent Application Publication Oct. 1, 2015 Sheet 18 of 21 US 2015/0276349 Al

1513 DETERMINE POSITION DATA

1514~J "DETERMINE MOTION DETECTION DATA

INTEGRATE DATA TO

1515~| DETERMINE x, y, POSITION AND

ROLL, PITCH, YAW ORIENTATION

MAP POSITION AND ORIENTATION

1516] __ TO SIMULATION ENVIRONMENT

1517 -7_DISPLAY SIMULATION ENVIRONMENT

1518“ EX)

FIG. 15B

Patent Application Publication Oct. 1, 2015. Sheet 19 of 21. US 2015/0276349 AI

1 ee

aon RETRIEVE xyz POSITION OF

WEAPON AND x,y ORIENTATION

VECTOR OF WEAPON

1521 CONVERT xyz 70

‘SPHERICAL COORDINATES

4522~| _RENDERWEAPONIN SIMULATION

ENVIRONMENT AT SPHERICAL 4600

POSITION AND ORIENTATION VECTOR [

t

4523-| RETRIEVExy2 POSITION OF USER 4601 EXTRAPOLATE

DEVICE AND xyz ORIENTATION PHANTOM PATH

VECTOR OF USER DEVICE. T

4 1602~[_ DETERNNE

1524 CONVERT xyz T0 PHANTOM HALO

‘SPHERICAL COORDINATES T

i 1603 yanvze conTRast

DETERMINE FIELD OF VIEW

FROM SPHERICAL VECTOR i

1525 ‘COORDINATES AS A SECTOR DETERMINE PHANTOM

OF SIMULATION ENVIRONMENT 1604~7|_COLORICONTRAST

t 7

‘COMPARE SECTOR TO DETERMINE

1526 ‘SIMULATION ENVIRONMENT 4605-7] PHANTOM HALO

7 COLORIGONTRAST.

DISPLAY PORTION OF SIMULATION

1527“|_ ENVIRONMENT WITHIN SECTOR FIG. 16A

No

1528 YES:

DISPLAY WEAPON AT

1529 POSITION AND ORIENTATION

|

FIG. 15C

Patent Application Publication Oct. 1, 2015 Sheet 20 of 21 US 2015/0276349 Al

FIG. 16B

US 2015/0276349 Al

Oct. 1,2015 Sheet 21 of 21

Patent Application Publication

LI DI

US 2015/0276349 Al

SYSTEM AND METHOD FOR

MARKSMANSHIP TRAINING

‘CROSS REFERENCE TO RELATED

"APPLICATION

{0001} This application is « continuation in part of US.

application Ser No. 14/149,418 fled Jan. 17, 2014, which is,

continuation in part of US. application Ser. No. 19/890,997

fled May 9, 2013. Fach ofthe patent applications identified

above is incoporated herein by reference in its entity 10

provide continuity ofdislosure

FIELD OF THE INVENTION

10002], Thepresent invention relates to deviews fr teaching

marksmen how to properly lead a moving tanget with a

‘weapon. More paticulry, the invention relates to optical

projection systems to monitor and simulate trp, skeet, and

sporting clay shooting,

BACKGROUND OF THE INVENTION

10003] Marksmen typically tain and hone their shooting

sills by engaging in skeet, trap or sporting clay shooting ata

shooting mange, The objective for a marksman i to success-

fully hit moving target by tracking at various distances and

angles and anticipating dhe delay time betwoea the shot and

the impact. In order t hit the moving target, the marksman

‘st im the weapon abead ofand abovethemoving tant by

a distance sufficient to allow a projectile fired from the

‘weapon sulicint time to reach the moving target. The pro-

‘ess of aiming the weapon ahead of the moving tage is

known inthe arts “leading the target”. “Lead” is defined as

the distance between the moving target and the siming pont.

The comect lead distance is critical to successfull hit the

moving target. Further, the correct led distance is ineteas-

ingly important a the distance ofthe marksman tothe mov-

ing tangel increases, the speed ofthe moving target increases,

‘and the direction of movement hocomes more oblique

[0004] FIG. 1 depicts the general dimensions of a skeet

shooting range. Skeet shooting range 100 has high house 101

‘and Jow house 102 separated by distance 1. Distance 11 is,

ut 120 feet. Station 103 isadjacent high howse 101 Station

4109 isadjacent low house 102. Station 10s equidistant rom

high house 101 and ow house 102 at distance 112. Distance

12 is about 60 fee. Staion 106 is equidistant from high

house 101 an! low house 102 and generally perpendicular to

distance 111 al distance 113, Distance 113 i845 fet. Station

106i distance 114 from station 103, Distance 114 isabout 75

feet. Stations 104 and 105 are positioned along are 121

between stations 193 and 106. equal ae lengths. Fach of are

Fengths 122, 123, and 124 about 27 feet. Stations 107 and

108 are positioned along are 121 between stations 106 and

109 atogual are lengths. Bach ofarelengs 128,126, and 127

is 26 foot, 894 inches.

10005] -Tarzet ight path 116 extends from high house 101

to marker 17, Marker 117 is positioned about 130 fet from

high house 101 along target Hight pa 115. Target High path

118 extends from low house 102 wo marker 18, Macker 118

jis about 130 fest fom low house 102 along target light path

116, Target fight paths 118 and 116 intersect at taraetcross-

ing point 119. Target erossing point 119s positioned distance

120 from station 110 and is 15 fet above the ground. Dise

tance 120 is 18 fect, Clay targets are launched from high

house 101 andlow house 102 along tanet fight paths 11S and

Oct. 1, 2015

116, respectively. Marksman 128 positioned a any of statis

103, 104, 108, 106, 107.108, 109, and 110 atempis to sho

‘and break the launched clay targets,

[0006] FIG. 2 depicts the general dimensions of a trp

shooting range, Trop shooting range 200 comprises firing

Janes 201 and trap howse 202, Stations 203,204,205, 206, and

207 are positioned along radius 214 from center 218 of ty

house 202, Radius 214 is distance 216 from center 218, Dis

ance 216 is 48 fet. Each of stations 203, 204, 208, 206, and

207 ispositioned at radius 214at equal ac lengths. rc length

213 is 9 feet. Stations 208, 209, 210, 211, and 212 are pos

tioned along radius 215 trom center 218, Radius 213 is dis

tance 217 from center 218. Dstance 217 is SI feet. Bach of

stations 208, 209, 210, 211, and 212 is positioned at radius

215 at equal are lengths. Are length 227 is 12 fet. Field 226

‘has length 221 from center 218 along centerline 220 af trap

house 202 to point 219. Length 221i 150 feet. Boundary line

22extends 150 feet fom center 218 at angle 24 fom center

Tine 220. Boundary ine 223 exten 150 feet from center 218

at angle 225 from center line 220, Angles 224 and 228 are

each 22° fom centerline 220, Trap house 202 launches clay

‘agets at various trajectories within field 226. Marksman 228

positioned at any of stations 203, 204, 208, 206, 207, 208,

209, 210, 241, and 212 attempts to Shoot and break the

Jaunched clay targets.

[0007] FIGS. 34,38, 3C, and 3D depict examples oftaruet

paths and associated projectile pats ilustaing the wide

range of lead distances and distances required ofthe marks-

‘man. The term “projectile,” as usod inthis application, means

any projectile fred from a weapon but more typically ashot-

‘gun round comprised of pellets of varions sizes, For example,

FIG. 3A shows aleft to sight trajectory 308 of target 304 and

left o right intercept tjectory 304 for projectile 302, In this

example the intercept path i oblique, eoquiting the lead tobe

‘greater distance along the positive X axis, FIG, 3B shows a

Ieft to right tajectory 207 of target 308 and intercept tajec-

tory 308 for rjectile 306. In this example, the intercept path

is acute, requiring the lead to be a lesser distance in the

positive X direction. FIG. 3C shoses aright 0 left trajectory

31 of target 309 andintereepting trajectory 312 for projectile

310 Inthisexample,the intercept path is oblique and requires

' greater lead in the negative X direction. FIG. 3D shows

proximal io dlstal ad right to lell ajectory 315 of wrget 13

And intercept trajectory 316 for projectile 314. In this

‘example, te intercept path is acute sind require a lesser lead

inthe negative X direction.

[0008] FIGS. 4A and 4B depict a range of paths ofa clay

target and an associated intercept projectile. The most ypical

projectile used in skeet and tap shooting i a shotgun round,

suchas 12 gauge round ora 20 gavge round. When fired, the

pellets of the round spread out into “shot string” having a

stenerlly circular cross-section. The cross-section increases

asthe flight time of the pellets increases. Referring to FIG.

4A, clay target 401 moves slong path 402. Shot string 403

interoepis target 401, Path 402 is an idea path, ia that no

‘variables are considered that may alter path 402 of eay target

401 once clay tanget 401 is launches

[0009] Referring to PIG. 4, path range-404 depts a range

‘of potential ight paths fora clay target afer being released

‘ona shooting range. The flight path of the elay target is

affected by several variables. Variables include mass, wind,

tag, lift force altitude, humidity, and temperature, resulting

narange of probable ight pats, path rusge 404, Path ange

US 2015/0276349 Al

404 has upper limit 40S and lower limit 406, Path range 404

fiom launch angle 0 is extrapolated using:

Ligee, By

Le By 2

yentratt dae ec,

where xis the clay postion slong the x-axis, x, isthe initial

positon of the clay target along the x-axis, v0 isthe initial

Yelocity slong the x-axis, a, is the acceleration along the

2caxis is ime, and C, isthe drag and Tift variable along the

sais, y isthe elay positon along the y-avs, yi the initial

positon ofthe clay tant along the y-axis, vy isthe initial

Yelocity slong the y-axis, a,, isthe noceleration along the

yaxis, Lis me, and C, isthe drag and Tift variable along the

axis. Upper limit 405 is maximum distance along the

axis with C, ata maximum anda maximum along the y-axis

swith C, ata maximum, Lower limit 406 is minimum dis

tance slong the x-axis with C, aa minimury and a minimum

slong they-axis with, ata miniman, Dragandlift are given

by:

refs

whore Fy. the drag force p isthe density ofthe ait Vis ve,

‘Ais the enoss-setional ate, and C, isthe deag coefficient

Bh

where Pgs thelift force, pis the density ofthe ale vis,

Js the planform area, and C, is the lift coelicent,

[0010] Referring to FIG. 5, an example of lead from the

perspective of the marksman is described. Marksman S01

‘ims weapon 802 at clay target 808 moving along path $04

Jeft to right. In ondr to bit target $03, marksman S01 must

‘anticipate the time delay fora projectile fired from weapon

502 to intercept clay tangct $03 by aiming weapon SO2 ahead

‘of elay target $03 at aim point $08. Aim point $05 is lead

distance $06 ahead of elay target 803 along path S04. Marks=

‘man $01 must anticipate and adjust aim point 808 according

to a best guess atthe anticipated path ofthe target.

[0011] Clay target $03 has inital tajetory’ angles yal

Positional coordinates x,y, anda veloityv,. Aim point U8

has coordinates x, ¥.. Lead distance $06 has x-componet

507 and y-component 808, X-component S07 and y-compo-

rent S08 are calculated by

sere, mS

eres me

‘where Ax isx component 507 and Ay sy component S08. AS

7 increases, Ay must increase. As y increases, AK must

Increase. As (increases, AY must inerease

[0012] The prior art has attempted to adress the problems

‘of teaching proper ead distance with limited success. For

‘example, US Pat. No. 3,748,751 to Breplia etal. discloses 3

laser, automatic fire weapon simulator. The simulator

Oct. 1, 2015

includes a display sereen, a projector for project

picture on the display sercen. housing ltaches to the barrel

‘ofthe Weapon. A camera witha nareow band pas filter os

tioned to view dhe display screen detects and records he laser

Tightand the target shown onthe display sereen, However, the

simulator requires the marksman to am at en invisible objet,

thereby making the learning process of leading a target dif

cnt and ime-consaming,

[0013] US. Pat, No. 3,940,204 to Yokoi discloses a clay

shooting simulation system. The system includes a sereen, a

fist projector providing visible markon the sereen,a second

prsjector providing an infrared! mark on the screen, a mieror

‘adapted to reflect the visible mark and the infrared mark tothe

Sercen, and a mechanical apparais for moving the mirror in

three dimeasions to move the two marks on the soreen sch

that the infrared mark leads the visible mark to simulate

Jead/-sighting point in actual clay shooting, Tight receiver

receives the reflected infrared ight. However, the system in

‘Yokoi requires a complex mechanical deviee to project and

move the iarget onthe screen, which leads to frequent filnre

And increased maintenance

0014} US. Pat, No. 345,133 to Mohon eta. discloses 2

‘weapons (mining simulator utilizing polarized Hight. The

simulator includes a sereen and a projector projecting a two-

layer film. The two-layer film is formed ofa normal film and

‘polarized film. The normal film shows a bockground scene

‘with a target with non-polarized light. The polarized

shows leading target with polarized light. The polarized film

js layered on top ofthe normal non-polacized film. A pola

ied light sensor is mounted on the barrel ofa gun. However,

the weapons traning simulator requires two eameras andl Wo

types of film to produce the two-layered film making the

simulator expensive and time-consuming to build and oper

[0018] U.S. Pat No. 5,194,006 to Zaenglein. Je discloses.

shooting simulator. The simulator ineludes a screen, a pro-

jector for displaying a moving target image on the sereen, and

‘weapon connected tothe projector. When a marksman pulls

the trigger a beam of infrared light is emitted from the

‘weapon. A delay is introduced betwen the time the trigger is

pulled and the bea is emitted. An infrared light sensor

atects the beam of infrared light. However, the training

device in Zaenglein, Je, requires the marksman to aim at an

invisible abject, thereby making the leaning process of lead-

‘nga target dillcul and time-consuming,

[0016] USS. Patent Publication No. 201010201620 to Sar-

sent discloses firearm taining system for moving targets

‘The system includes a firearm, two cameras mounted on the

firearm, a processor, anda display, The two cameras captare

set of stereo images of the moving target along the moving

target’ path when the tigger is pulled, However, the sytem

requires the marksman to aim at an invisible object thereby

‘making the learning process of leading a target difiult and

time-consuming. Further, the system requires two camerts

‘mounted on the firesem making the fiream heavy and di

cil to manipulate leading wo inaccurate aiming and fring by

the marksman when firing live ammunition without the

smonnted cameras

[0017] ‘Thepriorar fails todlisclose or suggestasystemand

‘method for simulating a led for a moving target using gen-

‘mtd images of targets projected atthe same sealeas viewed

in the field and a phanfom target positioned ahead of the

targets having a variable contrast. The priorart further fils to

isclose or suggest a system and method for simulating lead

US 2015/0276349 Al

Jina viral elit system. Therefore, there isa need inthe art

fora shooting simolator that recreates moving targets atthe

same visual scale a seen inthe field witha phantom taget 0

teach proper lead of a moving target ina virtual reality plate

form.

SUMMARY

[0018] A system and method for simulating lead ofa target

‘includes @ network, a simolation administrator connected 10

the network, a database connected to the similation admin-

‘stator and a user device connected tothe network. The uset

device includes a set of vital reality unit, and a computer

‘connected to the virtual reality unit and to the network. A set

‘of postion trackers are connected tothe compte.

[0019] Ina preferred embodiment, 2 target is simulated. In

‘one embodiment, simulated weapon i provided In another

‘embodiment, ast of Sensors is attached toa real weapon. In

nother emibostiment, a set of gloves having set of sensors is

wor by a use. The system generates a simulated target and

displays the simolated target upon launch of the generated

lurget. The computer tricks the position of the generated

target and the position of the viral reality unit and the

weapon to generate a phantom tant anda phntoms halo, The

‘Renerated phantom target and the generated phantom halo are

‘splayed on the viral realty unit ata lead distance and a

‘drop distance from the live target as viewed through the

Virtual eality unt. The eomputer determines ahitora miss of

the generated target using the weapon, the phantom target,

‘and the phanom halo. In one embodiment, the disclosed

system and method is implemented in a two-dimensional

Video game.

[0020] The present disclosure provides a system which

‘embosies significantly moee than an abstract idea including

technical advancements in the field of data processing and a

transformation of data which is diretly related to real workd

‘objecsand situations. The disclosed embodiments ereateand

transform imagery in hardware, for example, a weapon

peripheral and # sensor attachment toa real Weapon,

BRIEF DESCRIPTION OF THE DRAWINGS.

10021] The disclosed embodiments will be described with

reference tothe accompanying drawings

10022] FIG. Lis plan view of a skeet shooting range.

[0023] FIG. 2 isa plan view of a trap shooting range.

[0028] FIG. 3A isa target path and an associate projectile

path

10025] FIG. 38 isa taryet puth und an associated projectile

path

10026] | FIG. 3Cis target path and an associated projectile

path

[0027] FIG. 3D isa target path and an associated projectile

path

[0028] FIG. 44 is an ideal path of a moving tarp.

[0029] FIG. 4B is a range of probable ight paths of a

target

[0030] FIG. Sis a perspective view of marksman aiming

‘ata moving anget

10031] FIG. 6 is a schematic of @ simulator system of @

preferred embodiment

[0032] FIG. 7 isa schematic ofa simulation administrator

‘ofa prefered embodiment.

[0033] FIG. 8isaschematic ofauscrdeviee of a simulator

system ofa preferred embodiment

Oct. 1, 2015

FIG, 9A isa side view of a user device of vet

simulator system ofa prefered embodiment

FIG, 9B isa foat view ofa user device ofa visual

simulator system ofa prefered embodiment

[0036] FIG. 104 isa side view ofa simulated weapon fora

Viral reality system ofa preferred embostimest.

[0037] FIG. 108 isa side view ofa real weapon with a set

fof sensors attachment for a viral reality system of a pre-

tered embodiment

[0038] FIG. 10C is a detail view of a trigger sensor of a

preferred embodiment

[0039] FIG. 10D isa deal view of a set of muzzle sensors

‘off preferred embodiment.

[0040] FIG. 11 is a top view of a glove contller for a

Viral realty system ofa preferred embodiment.

[041] FIG. 12 is a schematic view of a virtual reality

Simulation environment of preferred embodiment

[042] FIG. 13 is a comand input menu for @ virtual

reality simulator system ofa preferred embodiment.

[0043] FIG. 14 is a ow chart of a method for runtime

process of virtual reality simulation system of a prefered

‘embodiment.

{0044} FIG. 154 is top view of @ user and a simulation

environment ofa prefered embodiment

[0045] FIG. 1818 sa flow char ofa method fordetemining

4 View for a user devie with respect to a position and aa

‘orientation of the user device and the weapon,

[046] FIG. 18Cisa flow chartofamethod formapping the

position and orientation ofthe ser device and the weapon to

{he simulation environment for determining a display field of

view a prefered embodiment

[047] FIG. 164 isa Nowschartof'a method for determining

‘phantom and halo of prefered embodiment.

[0048] FIG, 168 isa plan view oF target and a phantomot

1 preferred embodiment.

[049] FIG. 16C is an isometric view of target and

phantom of a prefered embodiment,

[00S0} FIG. 17 isa user point of view of a

Simulation system of a prefered embodiment,

[o034}

ital reality

DETAILED DESCRIPTION

[0051] Iti be appreciated by those skilled inthe at that

aspects of the present disclosure may be illustrated and

‘described herein in any of a umber of patentable clases or

fconlext including any new and uselil process, machine,

‘manufacture, orcompesition of matter, or any new and useful

improvement thereof. Therefore, aspects of the present dis-

closure may’be implemented entirely in hardware entirely in

software (including firmware, resident software, micro-cve,

etc) or combining software and hardware implementa

tat may all generally be refered (0 herein as a “eireuit”

“module,” “component,” or “system.” Further, aspects ofthe

resent disclosure may take the form ofa computer program

product embodied in one or more compriter readable media

having computer readable program code embodied thereon,

0052} Any combination of one or more computer reaable

‘media may be ulized. The computer readable media may’ be

‘computer readable signal medium or a computer readable

storage medium. For example, a computer readable storage

‘medium may’ bo, but not limited to, an electronic, magnetic,

‘optical, electromagnet, or semiconductor system, appara

tus, or device, or any suitable combination of the foregoing,

‘More specific examples of the computer readable storage

vediuat would inciide, but are not limited to: @ portable

US 2015/0276349 Al

‘computer diskette, a hard disk, a random access memory

CRAM"), a read-only memory (*ROM"), an erasable pro-

rammabie reawhonly memory CEPROM™ er Flash

memory), an appropriate optical ber with a repenter. por

table compaet ise read-only memory ("CD-ROM"), an opti

‘al storage device, a magnetic storage device, or any suitable

‘combination of the foregoing, Thus, a computer readable

Storage meditim may be any tangible mevlum that ean con-

‘ain, or store a program for use by or in connection with an

instruction execution system, apparatus, or device

10053] A computer readable signal medium may include &

propagated data signal with computer readable program code

‘embodied therein, for example, in baseband or as part of 3

‘carrier wave. The propagated data signal may take any ofa

variety of forms including, but not limited to, eleetro-mag-

netic, optical, of any suitable combination thereof. A com-

puter readable signal medium may beany computer readable

‘medium that is nota computer readable storage medium and

that can communicate, propagate, or transport a program for

use by or in connection with a struction execution sys

pparstus, or device, Program cade embodied ona computer

readable signal medium may be transmitted using any appro-

Pristemediu, inching bat nt limited to wireles, wireline,

‘optical iber cable, RF, any suitable combination thereof.

10034) | Computer program code for earying out operations

Jor aspects ofthe present disclosure may be written in any

‘combination ofone or more programming languages, includ

ing an object oriented programming Ianguoge such as Java,

Scala, Smalltalk, Fife, JADE, Emerald, C+, C#, VB.NET,

Python or the Tike, conventional procedural” programming

languages, such as the °C” programming language, Visual

Basie, Fortran 2003, Perl, COBOL 2002, PHP, ABAP.

‘dynamic programming languages such as Python, Ruby and

Groovy, or ober programming languages,

10085] | Aspects ofthe present disclosure are described with

reference to flowchart ilustations andor block diagrams of

methods, systems ancl computer program products according

tw embodiments of the diselosure. It will be understood that

‘each block of the flowehiart illustations andor block di

‘grams, and combinations of blocks inthe flowchart ills

tions andor block diagrams, can be implemented by com

puter program instnictioas. These computer program

Instructions may be provided to a processor of a. general

purpose computer, special purpose computer, or other pro-

trammable data processing apparatus to produce a machine,

such thatthe instructions, which execute via the processor of

the computer or other programmable instruction execution

‘apparatus, create « mechanism for implementing the func-

tions/acts specified in the fowehart andlor block diagram

block or blocks,

[0086] These computer program instructions may’also be

sored in a computer readable medium that when executed

‘can dircct a computer, other programmable data processing

‘apparatus, orother devices to Funetion ina particule manner

such that the instructions when stored in the computer read-

able medium produce an article of manuféeture including

instructions which when executed, cause computer 10

Jmplement the function/ct specified inthe Nowelunt andor

block diagram block or blocks. The compiter program

Instructions may also be Toad onto a computer, other pro-

_grammable instrction execution apparatus, or ather devices

To case a series of operational steps to be performed on the

‘computer, other programmable apparatuses or ther devices

to produce a computer implemented process such that the

Oct. 1, 2015

‘structions which execute onthe computer or other rogram

‘able apparatis provide proceser for implementing the

{unctions ses specie in the Mow chart andor losk diagram

‘lok oe blocks

(0057) Referring to FIG. 6, system 600 includes network

601, simulation administrator 602 connected to nework 601

and’ orer device 604 connected to nctwork 6O1. Syston

‘xdminsteator 602s fthereoaneced to siulaton database

603 for storage of relevant dat. For example data incl 3

set of tage dataset of weapon dat, ada se of environ

seat dat.

{0058} In one embodiment, network 601 is a Toca ara

setwork, In another embodiment, network 601 isa wide ace

etwork, sich the inte. nother embodiments, network

{601 includes combination of wide ea netorks ad local

area network incudes celular networks.

[0089] In prefered embodiment, wser device 604 com-

‘unicates wih simtlation administrator 692 to aooess data

base 603 to generate and projet a smlation that includes

target, a panto and aplntom halo acento the targetas

will be rer described below

{0060} In another embodiment, simulation administrator

602 generates simulation that includes a tang phasor,

pnom alo ajacent to the target and awespon image as

willbe fcr dserbd tele and sends the smalation to

tice device for projection

{0061} Refering wo FIG. 7, simulation administrator 701

Includes processor 702, atk interface 703 connected to

processor 702, and memory 76 connected o processor 702,

Simulation application 705 is stored in memory 704 snd

cxscuted by processor 702. Simulation application 708

includes positon application 706, stasis engine 707, and

target nd phantors Benertoe 708,

{0062} "In preiemed embodimen, simulation edministra-

tor TOI ia PowerEdge C6100 server and includes a Power

Page Ci 0x PCle Expension Chassis available from Dall In.

Other sutuble servers, server arrangemeats, and computing

cloves known in the art may be empayed

{0063} Inone embodiment, position application 706 come

:munictes witha positon wacker connevtedtothe user device

to decc the position of the user device for sinlaton op

cation 785, Saistis engine 707 communicates witha daa

base to retrieve relevant data and generate renderings acon!

Jing desired simolation criteria, sUch a8 desired Weapons,

vironments, and target types for simulation application

“708. Target and phantom generator 708 calculates and gen-

crates target along a target path, phastom target, ad

‘Phantom halo fore desired target along a phantom path for

mulation application, s willbe further described Below

[0064] Referrag to FIG. 8, ser device 800 includes com

ter 801 connected to headoet 802, Computer S01 is Frther

onnevted 10 replaceable batery 803, microphone 804,

Sper #05, ad position ticker 806.

{0065} Computer80L icles processor807, memory 809

connected to processor 87, and network interlace #08 con-

fected o processor 807. Simulation aplistion 810s sored

in memory 809 and executed by processor 807. Simulation

aplication 810 iaclades position applicaion 81, states

gine 812 and target and phantom generator 813. In a pro

Temed embodiment, positon application 811 communicates

‘with postion mcksr 806 to detect the postion of headset 802

Torsimlation application B10. Satistes engine B12 coms

cote with a database t tive relevant data and generate

renderings aeconding desired simulation esiteri, such as

US 2015/0276349 Al

desire weapons, exvironments and target types for simula

tion application 810, Tanzet and phaatoon generator 813 cal-

culates and generates a target along a target path, 2 phantom

target, and 2 phantom halo for the desired target along

phantom path for simulation application 810, as will be fur

ther described below.

0066] Input device 814 is connected to computer 801

Input device 814 includes processor 818, memory 816 con

nected to processor 815, communication interface 817 con-

nected (0 procestor 815, a set of sensors 818 connected (©

processor 816, anda set of controls 819 connected 1 proces-

Sor 815

[0067] Inoneembodiment, input device 814 is simulated

‘weapon, such asa shot gun, aril, oF a handgun. In another

‘embodiment, input device B14 is ast of sensors connectedto

a disabled real weapon, such as a shot gun, a rifle, or a

hhandgun, to deveet movement and actions of the real weapon.

Inanother embodiment, input device 814 is a glove having &

set of sensors worn by a user to detect positions and move-

mnts ofa and of a user

10068], Headsec 802 includes processor 820, battery 821

‘connected to processor 820, memory 822 connected 1 pro=

‘cessor 820, communication interface 823 connected 1 pro-

‘cessor 820, display unit 824 connacted to processor 820, and

‘set of sensors 825 connected to processor 820,

10069] Referring to FIGS. 9A and 9B, a prefered imple-

mentation of user device 80 is described as user device 900.

User 901 wears vital reality unit 902 having straps 903 and

‘904. Vital reality unit 902 is connected to computer 906 via

‘connection 905, Computer 906 s preferably a portable com=

puting deviee, such asa laptop of tablet computer, worn by

"ser 901, In other embodiments, computer 906 is desktop

‘computer of a server, not wom by’ the user. Any suitable

‘computing device known in the art may be employed. Con-

nection 90S provides «dats andl power connection fom com-

ter 906 to virtual reality unit 902

10070) Virtual reality unit 902 includes skirt 907 stached 10

Straps 993 and 904 and dispay portion 908 attached to skirt

(907, Skirt 907 covers eyes 908 and 916 of user 901. Display

portion 998 includes processor 911, display unit 910 con-

rected fo procesior 911, a set of sensors 912 connected to

processor 911, communication interfice 913 connected 10

processor 911, and memory 914 connected to processor 911

Lens 909 is positioned adjacent 10 display unit 910 and eye

‘908 of wscr 901, Lens 913 is positioned adjacent to display

unit 910 and eye 916 of user 901. Viral reality nit 902

provides # stereoscopic tnee-dimensional view of images to

ser 901.

[0071] User 901 wears communication device 917. Com-

‘nication device 917 includes earpiece speaker 918 and

ricrophoae 919. Communication device 917 is preferably

‘connected wo computer 96 visa wireless connection such as

‘Bluetooth connection, lather embodiments, other wireless

‘or wired connections ae employed. Communication device

‘917 enables voice activation a voice contol ofa simulation

application stored inthe computer 906 by user 901,

10072] In one embodiment, viral reality unit 902 is the

‘Oculus Rift headset aailabie from Oculus VR, LLC. Ta

nother embodiment, vital reality uit 902 isthe HTC Vive

headset available from HTC Corporation, In this embext-

rent, a set of laser position sensors 920 is attached to an

‘extemal surface virual reality unit 902 1 provide position

ddataof virtual ality wit902, Any suitablevirwal reality unit

know inthe art may be employed,

Oct. 1, 2015

[0073] In a preferred embodiment, «simulation environ-

‘ent that includes @ target is generated by computer 906

Computer 906 further generates pliantom target sind paan-

tom halo in font ofthe generated target based ona generated

‘target fight path, The simulation environment including the

‘gener langet, the pkntom tarp, and the phantom halo

fre transmitted from computer 906 10 vital eaity unit 902

for viewing adjacent eyes 908 and 916 of user 901, as willbe

surter desribed below. The wser aims @ weapon atthe phan-

tom target to atempt to shoot the generated tant

[0074] Referring FIG. 104 in one embodiment, simulated

‘weapon 1001 includes trigger 1002 connected to set of sen-

sors 1003, which is connected o processor 1004. Communi-

cation interface 1005 is connected processor 1008 andl t0

‘computer 1009, Simblated wespon 1001 farther includes sct

‘of controls 1006 attached to an extemal surface of weapon

1001 and coanccted to processor 1004, Set of controls 1006

includes diretional pad 1007 and selection button 1008. Bat-

{ery 1010 is connected to processor 1004, Actuator 1024 is

connected 1 processor 1004 fo provide haptic feedback

[0075] Ina preferred embodiment, simulated weapon 1001

sa shotgun, Itwill be appreciate by those skilled in the art

that any type of weapon may be employed,

[0076] In one embodiment, simulated weapon 1001 is

Delta Six fit person shooter controller available from

Avenger Advantage, LLC, Other suitable simulated weapons

knowin in the art may be employed.

0077] In a preferred embodiment, set of sensors 1003

includes positon sensor for riguer 1002 anda set of motion

sensors to detet an orientation of weapon 1001

[0078] Ina preferred embodiment, the postion sensor is a

all Etfeet senso. In this embodiment, a magnet is attached

to tigger 1002, Any type of Hall Effect sensor or any other

suitable sensor type known in the art may be employed.

[0079] Inaprefered embodiment, theset of motion sensors

js a 9-axis motion tracking systemin-package package sen-

sor, model no. MPL1-9150 availabe from InverSense®, Inc.

In this embodiment, the 9-axis sensor combines a 3-axis

ayroscope, a Sais accelerometer, an on-hoar digital

‘motion processor, and a S-axis digital compass. In other

embodiments, other suitable sensors andor sitable combi-

nations of sensors may be employed.

[O080} Referring to FIGS. 10B, 10C, and 10D in another

embodiment, weapon 1040 includes simulation attachment

101 removably attached tits stock, Simulation attachment

1011 includes on-off switch 1012 and pair buton 1013 to

‘ommisicate with computer 1009 via Bluetooth connection.

Any suitable wireless coanection may be employed. Tigger

sensor 1014 js removably attached to trigger 1022 and in

communication with simulation attachment 1011, A set of

‘muzzle sensors 1015 is attached to a removable pli 1016

\whieh s removable inserted into barrel 1023 of weapon 1010,

Set of muzzle sensors 1013 include processor 1017, battery

1018 connected to processor 1017, gyroscope 1019 con-

ected to processor, accelerometer 1020 connected ta proces-

sor 1017, and compass 1021 connected to processor 1017,

[0081] Tn one embodiment, set of muzzle sensors 1018 and

removable plug 1016 ae positioned panally proaing ont-

Side of hare! 1023 of weapon 1010

0082} In one embodiment, weapon 1010 includes rail

1028 atachedto its stock in aay position. In this embodiment,

sot of muzae sensors 1018 is mounted 10 rail 1028.

[0083] In one embodiment, weapon 1010 fires blanks to

provide kiekback to a user

US 2015/0276349 Al

10084) ell be appreciated by dose sil ia the at at

any weapon may beemployedas weapon 1010 including any

rileorhandgua. Iwill be further appreciated by those skilled

dn thar tha rail 1025 is optionally mounted to any iype of

‘weapon, Sat of muzle sensors 1028 may be mounted in any

Position on Weapon 1010. Any type of mounting means

Known inthe art may be employed

[0088] Referring to FIG. 11, racking glove 1100 inclodes

hand portion 1101 and west portion 102, Wrst portion 1102

includes processor 1103, battery 1104 connecied to procesoe

1103, communication interface 1108, and memory 1106 coa-

nected to processor 1103. Hand portion 1101 ines thamb

Ponton 1109 nd ger portions 1112, 1116, 1120, and 1128

and portion 110 furher includes baeShand sensor 1107

nl palm casor 1108, exch of which is conneete ta proces

sor 1103. Thumb portion 1109 has sensors 1110 and 1111,

‘och of which connected to processor 103, Finger portion

AMID has sensors 1113, 1114, and 1118, each of whichis

‘sonnectedto processor 1103, Finger portion 1116s seasons

1117, 1118, nd 1119, cach of which is connected 0 proses.

sor 1103, Finger porGon 1120 has sensors 1421, 1122, and

1128, each of whichis connected to processor 1108. Finger

potion 1124hassensors 1128, 1126, and 1127 cachof which

Jsconnected io processor 1103

10086) Ina prefered embodiment, hand potion 1101 is 2

polyester nylon silicone, and neprene mixture fabric. La

this embodiment, each of sensors 110, L111, 1113, 114,

1118, 117, 1118, 1119, 1121 1122, 1123, 1138, 1126, and

1127 andeach of backhand sensor 1107 and plm sensor 1108

sewn ito the hand portion. Other suitable fabees nwa in

the a may be employed

10087] In a preferred embodiment, wrist portion 1102

Jncludes a hook and lop strap to secre tacking glove 1100.

‘ter securing means known in the art may be employed

[0088] Ina prefers embodiment, eacho backhand sensoe

1107 an palin sensor 1108 is an INEMO inertial mole

model no, LSMSDSI available from ST Microelectronics

‘Other titable sensors known inthe at nay be employed

10089] In a prefered embodiment, cach of sensors 1110,

1111, 1113, 194, 1115, 1117. 1118, 119, 121, 1122, 123

1125 1126 and 112Tis an NEMO ines module model no.

LSM9DSI available from ST Microolectonics. Other sit

able snsom knowin the art may be employed

10090]. Refering to FIG. 12, in simolaton environment

1200 user 1201 wears use device 1202 connected to com-

puter 1204 and holds wespon 1203, Each of postion trackers

1205 and 1206 is coonected to computer 1204. Postion

tracker 1205 has of view 1207, Position tracker 1206 hae

field of view 1208. User 120 is positon in Hels of view

1207 snd 1208,

{0001} In one embodimnent, weapon 1203 is» simulated

‘weapon. In another embostiment, weapon 1203 is real

‘weapon with simulation attachent. In another embodi-

ment, eapon 1208 jsaeal weapon anduser 1201 wens st

‘of wacking gloves 1210. In other embodiments, ser 1201

‘wears the set of tracking gloves 1210 and uses the simulated

‘weapon oF the ea weapon with the simulation attachment

10092] Inapreteres embodiment, eachor position trackers

1208 and 1206 is » near infrared CMOS sensor having

refresh ate of 0 Hz. Other stable postion tackers known

in the at may be employed.

{093] In. prefered embodiment, position trackers 1208

nl 1206 capt the vertical and horizontal postions af ace

‘vice 1202, weapon 1208 andor set of gloves 1210. For

Oct. 1, 2015

‘example, postion tracker 1208 captures the positions and

movement of user device 1202 and weapon 1208, andor set

ofgloves 1210 in the y-zplane of coordinate system 1209 and

position tracker 1206 captures the positions and movement oF

user device 1202 and weapon 1203 andior set of gloves 1210

in the x-z plane of eoonlinate system 1209. Furler, a hori

‘zontal angle and an inelination angle of the weapon are

{cocked by analyring image data from position trackers 1208

and 1206, Since the horizontal angle andthe inclination angle

fare slicient a desribe the aim point ofthe weapon, the za

point of the weapon is wracked in time

0094} In a preferred embodiment, computer 1204 gener-

ates the set of target data includes a target launch position a

farget launch angle, and a target launch velocity ofthe gen-

crated langet. Computer 1204 retieves a set of Weupon dala

based on a desired weapon, including a weapon type eg 8

shotgun, a rifle ora handgun, a se of weapon dimensions, a

‘weapon caliber or gauge, a shot type including a Toad, 3

caliber, a pelet size, and shot mass, bacrel Tena, a choke

‘ype, and a muzzle velocity. Other weapon data may be

employed. Computer 1204 further retrieves a set of environ-

‘mental data that includes temperature, amount of daylight,

‘amount of clouds, aliude, wind velocity, wind direction,

precipitation type, precipitation amount, humidity, and baro-

metre pressure for desired environmental conditions. Other

types of environmental data may be employed,

[0095] Postion trackers 1208 and 1206 capture a set of

position image data of user device 1202, weapon 1208 and/or

Set of gloves 1210 and the set of images is seat to computer

1204, Sensors inuser device 1202, weapon 1203 and/or setof

loves 1210 detect a set of orientation data and sends the set

of orientation data t0 computer 1204, Computer 1204 then

calculates a generated target flight path for the generated

‘arget based on the set of target data, the set of environment

data, andthe position and orientation of tae user device 1202

Te position and orientation of the user device 1202. the

weapon 1203 andlor set of gloves 1210 are determined from

te set of position image data and the set of orientation data

Computer 1204 generates a phantom target and a phantom

halo based on the generated target Might path and transmits

‘the phantom target and the phantom halo to user device 1202

Forewing sr 1201 User 120 sins wegen 1203.tbe

phantom target and the phantom halo to attempt to

ener rset: Computer 1204 detect tiger pl

‘weapon 1203 by a tigger sensor andlor a finger sensor and

{determines hit ora miss ofthe generated target based on the

timing ofthe trigger pall the set of weapon data the position

and orientation of user device 1202, weapon 1203, andor set

of gloves 1240, the phantom target, and the phantom hal,

[0096] Referring FIG.13, command menu 1300includes

simulation type 1301, weapon type 1302, ammunition 1303,

target type 1304, station select 1308, phantom toggle 1306.

{day/night mode 1307, environmental eonitons L308, froze

frame 1309, instant replay 1340, and start/end simulation

1311 Simolation type 1301 enables a user to seloet different

types of simulations. For example, the simulation type

Includes skeet shooting, rap shooting, sporting clays, and

hunting. Weapon type 1302 enables the user to choose Irom

different weapon types and sizes such as a shot gun, a rifle,

‘and a handgun, and different calibers or gauges ofthe weap.

‘ons ype. The user further entesa weapon sensor location, for

‘example, inthe muzzle oron a ral, and whether the user is

‘ight of left banded. Ammunition 1303 enables the user to

select different types of ammunition forthe selected weapon

US 2015/0276349 Al

‘ype. Target type 1304 enables the user to select diferent

types of tages for the simulation, including a taget size, 2

target color, and a target shape. Station select 1308 enables

the user to choose different stations to shoot from, for

‘example, ina trap shooting range, a skeet shooting range, oF

‘a sporting clays course, The wser furher selects a amber of

shot sequences forthe station select. Ina prfecred embadi-

ment, the number of shot sequences in the set of shot

sequences is determined by the type of shooting range used

snd he numberof target flight path variations tbe generate.

For example, the representative number of shot sequences for

‘a skeet shooting range sa east eight, one shot seguence per

station. More than one shot per station may be wtilized,

10097] Phantom toggle 1306 allows auserto select whether

‘o display a phantom target and a phantom halo during the

simulation, The user funlier selects. phastom color, a paaa-

tom brightness level, anda phantom transpareney level. Day!

night mode 1307 enables the user to switch the environment

between daytime and nightime, Environmental conditions

1308 enables the user to select cifereat simulation envio

‘mental eonditions including temperature, amount of daylight

amount of clouds, altitude, wind velocity, wind direction,

precipitation type, precipitation amount, humidity and baro-

metric pressure. Other types of environmental data may be

‘employes. Freeze fame 1309 allows the wser to “pause the

simulation. Instant replay 1310 enables the user replay the

last shot sequence including the shot attempt by the wer

Staten simolation 131 enables the user to start or end the

Simulation. In one embodiment, selection of 1301, 1302,

41303, 1304, 1305, 1306, 1307, 1308, 109, 1310, and 1311 is

accomplished via voice controls. In another embodiment,

Sletion of 1301, 1302, 1303, 1304, 1305, 1306, 1307, 1308,

1309, 1310, and 1311 is accomplished via asetof contois on

a simulated weapon as previously described

10098] Refering to FIG. 14, runtime method 1400 for @

target simulation will be described. At stop 1401, baseline

position and orientation of the user device and a baseline

Position and orientation of the weapon are set. Inthis sep, the

‘Computer retrieves a set of postion image data fom a st of

Position tackers, asetof orientation data froma set of sensors

tn theuser device, the weapon and/ora set of gloves and saves

the current position and orientation ofthe user device and the

‘weapon into memory. Based on the simulation choice, the

virtal position of the launcher relative to the pestion and

‘orientation ofthe user device is also set. Irth user device is

‘oriented toward the vital location ofthe launcher, viewal

mage ofthe launcher willbe displayed. At sep 1402. set of

target flight data, a set of environment data, and a set of

‘weapon data are determined from a set of envionment sen-

sors and 2 database.

Ina prefered embodiment, the setof weapon datas

ued and saved ino the database based on the type of

‘weapon that in use. Ina preferred embodiment, te set of

‘weapon data includes a weapon ype e.g, shotgun, rile, oF

‘abandgu, a weupon caliber or yatge, «shot ype including

oad, a caliber a pellet size and shot mass, barrel length, &

‘choke type anda muzzle velocity. ther weapon data may be

‘employes

[0100] Ina prefered embodiment, the et of environment

datas retrieve from the database and includes a wind veloc

jt, an air temperature, analtitude a relative ar humidity, and

fan outdoor illuminance. Other types of environmental data

may be employed.

Oct. 1, 2015

[W101 refed enbosime te tof tet ht

js retrieve Irom the database based on the type of target

inuse In preferred embodiment, the set of target Hight data

includes launch angle ofthe target, an inital velocity ofthe

farget, mass ofthe target, a target ght time, a drag Free, a

Tift force, 2 shape of the target, u color of the target, and a

target brightness level

[0102] Atstep 1403, the target and environment are gener-

sted from the set of target fight data and the set of environ-

‘meatal data. At step 1404, a vial weapon image i gener

sted and saved in memory. In this step, images and the set of

‘weapon data of the selected weapon for the simulation is

retrieved from the database, At step 1405, the target is

Junched and the target and enviroamen ae displayed in the

user device, In a prefered embodiment, 2 marksman will

initiate the launch With a voice command sich as “pull”.

[0103] Atstep 1406, a view of the user device with respect

toa vital target launched is determined, as will e Farher

described below.

[0104] Atstep 1407, phantom target and a phantom halo

fare genersed based on & target path and the position and

‘orientation ofthe user, s will he Hrher described below. The

target path is determined from the target postion the target

velocity using Fas. 1-4. Atstep 1408, the zenerated phantom

target and the generted phantom halo are sent to the wer

device and displayed, ifthe user device is oriented toward the

target path. The generated weapon is displayed if the user

device is oriented toward the position of the virtual weapon,

‘At step 1409, whether the trigger on the weapon has been

pled is dltermined rom aset of weapon sensors andlor set

ff glove sensors, If not then method 1400 returns to stp

1408. Ifthe trigger has been pulled, then method 1400 pro~

coeds to stop 1410.

[0108] _Atstep 1410, shot string is determined. Inthis step,

4 set of position trickers capture a set of weapon position

Jimages. In this step, a set of Weapon position data is received

[roma set of weapon sensors, The sh string iscalculated by

iB ne mus

WHEE Ader aon 8 the area OF the shot SHNg, Rigg is the

radius of the sh string. Ray 8 the radius oF the shot as it

Teavesthe weapon, ¥p._gistherateat which the shot spreads,

‘ands the time it aes forthe shot to travel fom the Weapon

to te target. An aim point ofthe weapon is determined from

the set of Weapon positon images and the set of weapon

positon data. A shot string postion is determined from the

Position ofthe weapon a the ime of firing andthe area ofthe

shot sting

[0106] Atstep 1411, i7dhe user device is oriented along the

‘muzzle of the weapon, the shot string is displayed on the user

‘device at the sho string position. Separately 2 gunshot sound

{splayed Atstep 1412, whether the phantom target has been

i determines. The simulation system determines the

postionofthe shot srg, previny dsb Tesi

lation system compares the positon ofthe shot string t the

positon ofthe ph

[0107] Tithe positionof the shot sting overlaps the position

‘ofthe phantom target, then the phantom target is “hit”. I the

position of the shot string does not overlap the phantom

target, thon the phantom target is “missed

[0108] IF the phantom target is hit and the user device is

oriented toward te hit location, then method 1400 displays

imation othe target being destroyed on he user deview

También podría gustarte

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeCalificación: 4 de 5 estrellas4/5 (5794)

- Virtual Reality Gun Controller For TrainingDocumento53 páginasVirtual Reality Gun Controller For TrainingJames "Chip" NorthrupAún no hay calificaciones

- The Yellow House: A Memoir (2019 National Book Award Winner)De EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Calificación: 4 de 5 estrellas4/5 (98)

- Zonal Systems Patent Application 5th For Security Zones, Parking Zones, RoboZonesDocumento153 páginasZonal Systems Patent Application 5th For Security Zones, Parking Zones, RoboZonesJames "Chip" NorthrupAún no hay calificaciones

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryCalificación: 3.5 de 5 estrellas3.5/5 (231)

- Drone Zones: Virtual Geo-Enclosures For Air SpacesDocumento30 páginasDrone Zones: Virtual Geo-Enclosures For Air SpacesJames "Chip" NorthrupAún no hay calificaciones

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceCalificación: 4 de 5 estrellas4/5 (895)

- Zonal Toll RoadsDocumento25 páginasZonal Toll RoadsJames "Chip" NorthrupAún no hay calificaciones

- The Little Book of Hygge: Danish Secrets to Happy LivingDe EverandThe Little Book of Hygge: Danish Secrets to Happy LivingCalificación: 3.5 de 5 estrellas3.5/5 (400)

- Zonal Places - Mobile App Geo-EnclosuresDocumento31 páginasZonal Places - Mobile App Geo-EnclosuresJames "Chip" NorthrupAún no hay calificaciones

- Shoe Dog: A Memoir by the Creator of NikeDe EverandShoe Dog: A Memoir by the Creator of NikeCalificación: 4.5 de 5 estrellas4.5/5 (537)

- Autonomous Vehicle ZonesDocumento31 páginasAutonomous Vehicle ZonesJames "Chip" NorthrupAún no hay calificaciones

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe EverandNever Split the Difference: Negotiating As If Your Life Depended On ItCalificación: 4.5 de 5 estrellas4.5/5 (838)

- Toll Zones - Zonal Toll Roads and Paid ParkingDocumento25 páginasToll Zones - Zonal Toll Roads and Paid ParkingJames "Chip" NorthrupAún no hay calificaciones

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureCalificación: 4.5 de 5 estrellas4.5/5 (474)

- Zonal Systems OverviewDocumento17 páginasZonal Systems OverviewJames "Chip" NorthrupAún no hay calificaciones

- Grit: The Power of Passion and PerseveranceDe EverandGrit: The Power of Passion and PerseveranceCalificación: 4 de 5 estrellas4/5 (588)

- LeadTech Virtual Reality Shotgun Shooting PatentDocumento26 páginasLeadTech Virtual Reality Shotgun Shooting PatentJames "Chip" NorthrupAún no hay calificaciones

- Zonal Control For Autonomous VehiclesDocumento31 páginasZonal Control For Autonomous VehiclesJames "Chip" NorthrupAún no hay calificaciones

- The Emperor of All Maladies: A Biography of CancerDe EverandThe Emperor of All Maladies: A Biography of CancerCalificación: 4.5 de 5 estrellas4.5/5 (271)

- Zonal RetailDocumento30 páginasZonal RetailJames "Chip" NorthrupAún no hay calificaciones

- On Fire: The (Burning) Case for a Green New DealDe EverandOn Fire: The (Burning) Case for a Green New DealCalificación: 4 de 5 estrellas4/5 (74)

- Zonal Systems Control of Remote ActivitiesDocumento30 páginasZonal Systems Control of Remote ActivitiesJames "Chip" NorthrupAún no hay calificaciones

- Team of Rivals: The Political Genius of Abraham LincolnDe EverandTeam of Rivals: The Political Genius of Abraham LincolnCalificación: 4.5 de 5 estrellas4.5/5 (234)

- Patent For Virtual, Augmented and Mixed Reality Shooting Simulator Using A Real GunDocumento34 páginasPatent For Virtual, Augmented and Mixed Reality Shooting Simulator Using A Real GunJames "Chip" NorthrupAún no hay calificaciones

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaCalificación: 4.5 de 5 estrellas4.5/5 (266)

- Zonal Systems Geo-Enclosure Patent For Control of MachinesDocumento127 páginasZonal Systems Geo-Enclosure Patent For Control of MachinesJames "Chip" NorthrupAún no hay calificaciones

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersCalificación: 4.5 de 5 estrellas4.5/5 (344)

- Zonal Control: Automated Control of Geo-ZonesDocumento33 páginasZonal Control: Automated Control of Geo-ZonesJames "Chip" NorthrupAún no hay calificaciones

- Rise of ISIS: A Threat We Can't IgnoreDe EverandRise of ISIS: A Threat We Can't IgnoreCalificación: 3.5 de 5 estrellas3.5/5 (137)

- Virtual Reality Shooting Game PatentDocumento47 páginasVirtual Reality Shooting Game PatentJames "Chip" NorthrupAún no hay calificaciones

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyCalificación: 3.5 de 5 estrellas3.5/5 (2259)

- Zonal Systems US Patent 9317996Documento44 páginasZonal Systems US Patent 9317996James "Chip" NorthrupAún no hay calificaciones

- Rockefeller Climate Change LetterDocumento4 páginasRockefeller Climate Change LetterJames "Chip" NorthrupAún no hay calificaciones

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreCalificación: 4 de 5 estrellas4/5 (1090)

- Radioactive Frack Waste in PennsylvaniaDocumento25 páginasRadioactive Frack Waste in PennsylvaniaJames "Chip" NorthrupAún no hay calificaciones

- Virtual Reality Shotgun Shooting SimulatorDocumento35 páginasVirtual Reality Shotgun Shooting SimulatorJames "Chip" NorthrupAún no hay calificaciones

- Zonal Control US Patent 9319834Documento30 páginasZonal Control US Patent 9319834James "Chip" NorthrupAún no hay calificaciones

- The Unwinding: An Inner History of the New AmericaDe EverandThe Unwinding: An Inner History of the New AmericaCalificación: 4 de 5 estrellas4/5 (45)

- Radioactive Frack Waste in PennsylvaniaDocumento25 páginasRadioactive Frack Waste in PennsylvaniaJames "Chip" NorthrupAún no hay calificaciones

- Texas HB 40 Frack Anywhere CommmentsDocumento2 páginasTexas HB 40 Frack Anywhere CommmentsJames "Chip" NorthrupAún no hay calificaciones

- Gas Pipeline RIght of Way CondemnationDocumento56 páginasGas Pipeline RIght of Way CondemnationJames "Chip" NorthrupAún no hay calificaciones

- Texas Frack Anywhere Commercially Reasonable Test FailDocumento1 páginaTexas Frack Anywhere Commercially Reasonable Test FailJames "Chip" NorthrupAún no hay calificaciones

- Texas Frack Anywhere Bill HB 40Documento2 páginasTexas Frack Anywhere Bill HB 40James "Chip" NorthrupAún no hay calificaciones

- Texas Municipal Frack Reparations House Bill 539Documento4 páginasTexas Municipal Frack Reparations House Bill 539James "Chip" NorthrupAún no hay calificaciones

- Unlawful Gas Lease ExtensionDocumento14 páginasUnlawful Gas Lease ExtensionJames "Chip" NorthrupAún no hay calificaciones

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Calificación: 4.5 de 5 estrellas4.5/5 (121)

- The Perks of Being a WallflowerDe EverandThe Perks of Being a WallflowerCalificación: 4.5 de 5 estrellas4.5/5 (2103)

- Her Body and Other Parties: StoriesDe EverandHer Body and Other Parties: StoriesCalificación: 4 de 5 estrellas4/5 (821)