Documentos de Académico

Documentos de Profesional

Documentos de Cultura

(IJCST-V5I2P10) :T.P.Simi Smirthiga, P.Sowmiya, C.Vimala, Mrs P.Anantha Prabha

Cargado por

EighthSenseGroupTítulo original

Derechos de autor

Formatos disponibles

Compartir este documento

Compartir o incrustar documentos

¿Le pareció útil este documento?

¿Este contenido es inapropiado?

Denunciar este documentoCopyright:

Formatos disponibles

(IJCST-V5I2P10) :T.P.Simi Smirthiga, P.Sowmiya, C.Vimala, Mrs P.Anantha Prabha

Cargado por

EighthSenseGroupCopyright:

Formatos disponibles

International Journal of Computer Science Trends and Technology (IJCST) Volume 5 Issue 2, Mar Apr 2017

RESEARCH ARTICLE OPEN ACCESS

An Efficient Dynamic Slot Allocation Based On Fairness

Consideration for MAPREDUCE Clusters

T. P. Simi Smirthiga [1], P.Sowmiya [2], C.Vimala [3], Mrs P.Anantha Prabha [4]

U.G Scholar [1], [2] & [3], Associate Professor [4]

Department of Computer Science & Engineering

Sri Krishna College of Technology,

Kovaipudur, Coimbatore

Tamil Nadu -India

ABSTRACT

Map Reduce is the popular parallel computing paradigm for large-scale data processing in clusters and data centers.

However, the slot utilization can be low, especially when Hadoop Fair Scheduler is used, due to the pre-allocation of slots

among reduce tasks and map, and the order that map tasks followed by reduce tasks in a typical MapReduce environment.

On permitting slots to be dynamically (re)allocated to either reduce tasks or map depending on their actual requirement this

problem is solved. The proposal of two types of Dynamic Hadoop Fair Scheduler (DHFS), for two different levels of fairness

(i.e., cluster and pool level) improvise the makespan. HFS is a two-level hierarchy, with task slots allocation across pools

at the top level, and slots allocation among multiple jobs within the pool at the second level. On proposing two types of

Dynamic Hadoop Fair Scheduler (DHFS), with the consideration of different levels of fairness (i.e., pool level and cluster-

level). The experimental results show that the proposed DHFS can improve the system performance significantly (by 32% -

55% for a single job and 44% - 68% for multiple jobs) while guaranteeing the fairness.

Keywords:- Mapreduce, Namenode,Jobtracker, Task Tracker, Datanode

I. INTRODUCTION

reduce task starts to execute and produce the final results.

MapReduce has become an important paradigm for The Hadoop implementation closely resembles the

parallel data-intensive cluster programming due to its MapReduce framework. A single master node is adopted

simplicity and flexibility. Essentially, it is a software to manage the distributed slave nodes. The master node

framework that allows a cluster of computers to process a communicates with slave nodes with heartbeat messages

large set of unstructured or structured data in parallel. which consist of status information of slaves. Job

MapReduce users are quickly growing. Apache Hadoop scheduling is performed by a centralized job tracker

is an open source implementation of MapReduce that has routine in the master node. The scheduler assigns tasks to

been widely adopted in the industrial area. With the rise slave nodes which have free resources and response to

of cloud computing, it becomes more convenient for IT heartbeats as well. The resources in each slave node are

business to set a cluster of servers in the cloud and launch represented as map/reduce slots. Each slave node has a

a batch of MapReduce jobs. Therefore, by using the fixed number of slots, and each map slot only processes

MapReduce framework there are a large variety of data- one map task at a moment.

intensive applications. In a classic Hadoop system, each

MapReduce job is partitioned into small tasks which are II. LITERATURE REVIEW

distributed and were executed across multiple machines.

There are two kinds of tasks, i.e., map tasks and reduce

tasks. Each map task applies the same map function to Bi-criteria algorithm for a scheduling job uses a

process a block of the input data and produces a new method for building an efficient algorithm for

intermediate results in the format of key-value pairs. The scheduling jobs in a cluster. Here jobs are considered as

intermediate data will be partitioned by hash functions parallel tasks (PT) which can be scheduled on any

and fetched by the corresponding reduce task as their number of processors. The main thing is to consider two

inputs. Once all the intermediate data has been fetched, a criteria that are optimized together. These criteria are the

ISSN: 2347-8578 www.ijcstjournal.org Page 53

International Journal of Computer Science Trends and Technology (IJCST) Volume 5 Issue 2, Mar Apr 2017

weighted minimal average completion time (minsum) and stage, a job now consists of a set of tasks per stage. For

the makespan. They are chosen for their complementarity, this generalization, consider the problem of minimizing

to be able to represent both system administrator the total flowtime ,given an efficient 12-approximation in

objectives and user-oriented objectives. In this paper, we the offline setting and an online competitive algorithm.

presented a new algorithm for scheduling a set of Motivated by map-reduce, revisit the two-stage flow

independent jobs on a cluster. The main feature is to shop problem, where we give a dynamic program for

optimize two criteria simultaneously. The experiments minimizing the total flowtime when all the jobs arrive at

show that in average the performance ratio is very good, the same time. To provide a simple formalization of the

and the algorithm is fast enough for practical use.[1] scheduling problem in the map-reduce framework, many

issues have been ignored in real systems that often have a

An adoptive disk I/O scheduling on virtualized large effect on the performance. On assuming that the

environment is challenging problem for performance scheduler is aware of the job sizes. These may not be

predictability and system throughput for large-scale immediately available in practice, but in nearly all

virtualized environments in the cloud. Virtualization has circumstances approximate job sizes can be determined

become the fundamental technique for cloud computing. based on historical facts the bottle neck scenario is

As data-intensive applications become popular in the avoided.[6]

cloud, their performance on the virtualized platform calls

for empirical evaluations and technical innovations. In III. PROPOSED METHODOLOGY

this study,by investigating the performance of the

popular data-intensive system, MapReduce, on a A. MapReduce Programming Model

virtualized platform. The detailed study reveals that

different disk pair schedulers within the virtual machine MapReduce is the popular programming model

manager and virtual machines cause a noticeable for processing large data sets, initially proposed by

variation in the Hadoop performance in virtual cluster. Google. Now it has been a de facto standard for large

Address this problem through developing a meta- scale data processing on the cloud. Hadoop is an open-

scheduler for selecting a suitable disk pair schedulers. source java implementation of MapReduce. When a user

Given an application, a program is divided into phases; submits jobs to the Hadoop cluster, Hadoop system

currently coarse-grained phase detection is used, using breaks each job into multiple map tasks and reduce

the program progress. A novel heuristic successively tasks. Each map task processes (i.e. records and scans) a

evaluates solutions (phase-scheduler assignments). The data block and produces intermediate results in the form

program is executed, and the performance score is of key-value pairs. Generally, the number of map tasks

measured. Then, the heuristic evaluates the solution based for a job is determined by the input data. There is one

on the best performance score. Performance results are map task per data block. The execution time for a map

obtained on our local virtual cluster which is based on a task is determined by the data size of an input block. The

Xen hypervisor. It is found that for most of the Hadoop reduce tasks consists of shuffle/sort/reduce phases. In the

benchmarks, using multiple pairs in a single application shuffle phase, the reduce task fetch the intermediate

can provide a better performance score over any single- outputs from each map task. In the sort/reduce phase, the

pair solution. More importantly, our solution provides up reduce tasks sort intermediate data and then aggregate the

to 25% performance improvement over the default virtual intermediate values for each key to produce the final

Hadoop cluster configuration. Moreover, the performance output. The number of reduce tasks for each job is not

improvement is proportional to the data size, VM determined, which depends on the intermediate map

consolidation and system scale.[4] outputs.

The map-reduce paradigm is now standard in MapReduce suffers from a under-utilization of

academia and industry for processing large-scale data. In the respective slots as the number of map and reduce

this work, formalize job scheduling in map-reduce as a tasks varies over time, resulting in occasions where the

novel generalization of the two-stage classical flexible number of slots allocated for map/reduce is smaller than

flow shop (FFS) problems: instead of a single task at each the number of map/reduce tasks. Our dynamic slot

ISSN: 2347-8578 www.ijcstjournal.org Page 54

International Journal of Computer Science Trends and Technology (IJCST) Volume 5 Issue 2, Mar Apr 2017

allocation policy is based on the observation that at In contrast to DHFS that considers the fairness in

different period of time there may be idle map (or reduce) its dynamic slots allocation independent of pools, but

slots. The unused map slots for those overloaded reduce rather across typed-phases, there is another alternative

tasks to improve the performance of the MapReduce fairness consideration for the dynamic slots allocation

workload. For example, at the beginning of MapReduce across pools, as we call Pool-dependent DHFS DHFS). It

workload computation, there will be no computing reduce assumes that each pool, consisting of two parts: map-

tasks and only computing map tasks, i.e., all the phase pool and reduce-phase pool, is selfish. That is, it

computation workload lies in the map-side. In that case, always tries to satisfy its own shared map and reduce

make use of idle reduce slots for running map tasks. That slots for its own needs at the map-phase and reduce-phase

is, we break the implicit assumption for current as much as possible before lending them to other pools

MapReduce framework that the map tasks can only run DHFS will be done with the following two processes:

on map slots and reduce tasks can only run on reduce (1). Intra-Pool dynamic slots allocation. First, each typed

slots. Instead, we modify it as follows: both map and phase pool will receive its share of typed-slots based on

reduce tasks can be run on either map or reduce slots. max-min fairness at each phase. There are four possible

When the user submits jobs to the Hadoop relationships for each pool regarding its demand (denoted

cluster, Hadoop system breaks each job into as map Slots Demand, reduce Slots Demand) and its

multiple map tasks and reduce tasks. share (marked as map Share, reduce Share) between two

Each map task processes (i.e. scans and records) phases.

a data block and produces intermediate results in

the form of key-value pairs.

The execution time for a map task is determined

by the data size of an input block.

The reduce tasks consists of shuffle/sort/reduce

phases. In the shuffle phase, the reduce task

fetch the intermediate outputs from each map

task.

In the sort/reduce phase, the reduce tasks sort

intermediate data and then aggregate the

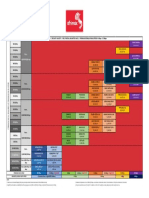

intermediate values for each key to produce the Fig.2. Hadoop Fairness Schedular File

final output.

C. Job Execution

Word Count example reads text files and counts

how often words occur. The input is text files and the

output is text files, each line of which contains a word

and the count of how often it occurred, separated by a tab.

Each mapper take a line as input and breaks it into words.

It then emits a key/value pair of the word and 1. Each

reducer sums the counts for each word and emits a single

key/value with the sum and word. As an optimization, the

reducer is also used as a combiner on the map outputs.

This reduces the amount of data sent across the network

by combining each word into a single record.

Fig.1 MapReduce Installation IV. RESULT

B. Pool-Independent Fairness Scheduler

ISSN: 2347-8578 www.ijcstjournal.org Page 55

International Journal of Computer Science Trends and Technology (IJCST) Volume 5 Issue 2, Mar Apr 2017

The proposed system improves the utilization reduce phase. We can use the unused map slots are those

and performance for MapReduce clusters while overloaded reduce tasks to improve the performance of

guaranteeing the fairness across pools. PI-DHFS and PD- the MapReduce workload, and vice versa. For example, at

DHFS are our first two attempts of achieving this goal the beginning of MapReduce workload computation,

with two different fairness definitions. PI-DHFS follows there will be no computing reduce tasks and only

strictly the definition of fairness given by traditional HFS, computing map tasks, i.e., all the computation the

i.e., the slots are fairly shared across pools within each density-based clustering corresponding to a broad range

phase (i.e., map phase or reduce phase). However, the slot of parameter settings.

allocations are independent across phases. In contrast,

PD-DHFS gives a new definition of fairness from the C. Fairness Calculation

perspective of pools, i.e., each pool shares the total

number of map and reduce slots from the map phase and Fairness is an important metric in Hadoop Fair

reduce phase fairly with other pools. Scheduler. It is fair when all pools have been allocated

with the same amount of resources. In HFS, task slots are

V. PROCESS first allocated across the pools, and then the slots are

allocated to the jobs within the pool. Moreover, a

A. Classify the Slots MapReduce job computation consists of two parts: map-

phase task computation and reduce-phase task

The performance of a MapReduce cluster via computation. One question is about how to define and

optimizing the slots utilization primarily from two ensure fairness under the dynamic slot allocation policy.

perspectives. First, classify the slots into two types, The resource requirements between the map slot and

namely, idle slots (i.e., no running tasks) and busy slots reduce slot are generally different. This is because the

(i.e., with running tasks) . Given the total number of map map task and reduce task often exhibit completely

and reduce slots configured by users, one optimization different execution patterns. Reduce task tends to

approach (i.e., macro-level optimization) is to improve consume much more resources such as memory and

the slot utilization by maximizing the number of busy network bandwidth. Simply allowing reduce tasks to use

slots and reducing the number of idle slots. Second, it is map slots requires configuring each map slot to take more

worth noting that not every busy slot can be efficiently resources, which will consequently reduce the effective

utilized. Thus, our optimization approach (i.e., micro- number of slots on each node, causing resource under-

level optimization) is to improve the utilization efficiency utilized during runtime. Thus, a careful design of

of busy slots after the macrolevel optimization. dynamic allocation policy is important and needed to be

Particularly, we identify two main affecting factors: (1). aware of such difference.

Speculative tasks; (2). Data locality. Based on these, we

propose Dynamic a dynamic utilization optimization

framework for MapReduce, to improve the performance

of a shared Hadoop cluster under a fair scheduling

between users.

B. Dynamic Hadoop Slot Allocation

Current design of MapReduce suffers from a

under-utilization of the respective slots as the number of

map and reduce tasks varies over time, resulting in

occasions where the number of slots allocated for

map/reduce is smaller than the number of map/reduce

tasks. Our dynamic slot allocation policy is based on the

observation at different period of time there may be idle

map (reduce) slots, as the job proceeds from map phase to

ISSN: 2347-8578 www.ijcstjournal.org Page 56

International Journal of Computer Science Trends and Technology (IJCST) Volume 5 Issue 2, Mar Apr 2017

Mapper Task

Reducer

Task

VI. CONCLUSION

The system developed an efficient fairness

scheduler by implementing pool distribution of job arrival

around the clusters. The future step of our research

includes integration of more advance scheduling

management to include resource discovery means for

Fig.3. HDFS File Properties Specification demonstrating the effectiveness of our deadline algorithm

in dynamic node formation. In addition, we aim of

applying the solution into large-scale virtualized grids and

FLOW CHART inter-cloud scenarios to explore the efficiency of the

algorithm in highly dynamic and large-scale cases.

Job Arrival

REFERENCES

HDFS [1] P.-F. Dutot, L. Eyraud, G. Mounie, and D.

Hadoop Map Reducer

Server Trystram, Bi-criteria algorithm for scheduling

jobs on cluster platforms, in Proc. 16th Annu.

ACM Symp. Parallelism Algorithms Archit.,

2004, pp. 125132.

[2] P.-F.Dutot, G.Mounie, and D.

Name Node with Trystram,Scheduling parallel tasks:

Job Tracker Approximation algorithms, in Handbo ok of

Scheduling: Algorithms, Models, and

Performance Analysis, J. T. Leung, d. Boca

Raton, FL, USA: CRC Press, ch. 26, pp. 26-1

Slot allocation

26-24.

[3] J. Gupta, A. Hariri, and C. Potts, Scheduling a

two-stage hybrid flow shop with parallel

machines at the first stage, Ann. Oper. Res.,

Mapper slot vol. 69, pp. 171191, 1997.

[4] S. Ibrahim, H. Jin, L. Lu, B. He, and S. Wu,

Adaptive disk I/O scheduling for mapreduce in

Slot usage virtualized environment, in Proc. Int. Conf.

Parallel Process., Sep. 2011, pp. 335344.

Reducer

slot

ISSN: 2347-8578 www.ijcstjournal.org Page 57

International Journal of Computer Science Trends and Technology (IJCST) Volume 5 Issue 2, Mar Apr 2017

[5] G. J. Kyparisis and C. Koulamas, A note on

makespan minimization in two-stage flexible

flow shops with uniform machines, Eur. J.

Oper. Res., vol. 175, no. 2, pp. 13211327,

2006.

[6] B. Moseley, A. Dasgupta, R. Kumar, and

T.Sarlos, On scheduling in map-reduce and

flow-shops, in Proc. 23rd Annu. ACM Symp.

Parallelism Algorithms Archit., 2011, pp. 289

298.

[7] C. Ouz, M. F. Ercan, T. E. Cheng, and Y. Fung,

Heuristic algorithms for multiprocessor task

scheduling in a two-stage hybrid flow-shop,

Eur. J. Oper. Res., vol. 149, no. 2, pp. 390403,

2003.

[8] J. Polo, Y. Becerra, D. Carrera, M. Steinder, I.

Whalley, J. Torres,and E. Ayguade, Deadline-

based mapreduce workload management, IEEE

Trans. Netw. Service Manage., vol. 10, no. 2, pp.

231244, Jun. 2013.

[9] P. Sanders and J. Speck, Efficient parallel

scheduling of malleable tasks, in Proc. IEEE

Int. Parallel Distrib. Process. Symp., 2011, pp.

11561166.

[10] M. Zaharia, D. Borthakur, J. Sen Sarma, K.

Elmeleegy, S. Shenker, and I. Stoica, Job

scheduling for multi-user mapreduce clusters,

EECS Dept., Univ. California, Berkeley, CA,

USA, Tech. Rep. UCB/EECS-2009-55, Apr.

2009.

ISSN: 2347-8578 www.ijcstjournal.org Page 58

También podría gustarte

- The Yellow House: A Memoir (2019 National Book Award Winner)De EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Calificación: 4 de 5 estrellas4/5 (98)

- (IJCST-V12I1P4) :M. Sharmila, Dr. M. NatarajanDocumento9 páginas(IJCST-V12I1P4) :M. Sharmila, Dr. M. NatarajanEighthSenseGroupAún no hay calificaciones

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeCalificación: 4 de 5 estrellas4/5 (5795)

- (IJCST-V11I6P8) :subhadip KumarDocumento7 páginas(IJCST-V11I6P8) :subhadip KumarEighthSenseGroupAún no hay calificaciones

- Shoe Dog: A Memoir by the Creator of NikeDe EverandShoe Dog: A Memoir by the Creator of NikeCalificación: 4.5 de 5 estrellas4.5/5 (537)

- (IJCST-V11I5P4) :abhirenjini K A, Candiz Rozario, Kripa Treasa, Juvariya Yoosuf, Vidya HariDocumento7 páginas(IJCST-V11I5P4) :abhirenjini K A, Candiz Rozario, Kripa Treasa, Juvariya Yoosuf, Vidya HariEighthSenseGroupAún no hay calificaciones

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureCalificación: 4.5 de 5 estrellas4.5/5 (474)

- (IJCST-V12I1P2) :DR .Elham Hamed HASSAN, Eng - Rahaf Mohamad WANNOUSDocumento11 páginas(IJCST-V12I1P2) :DR .Elham Hamed HASSAN, Eng - Rahaf Mohamad WANNOUSEighthSenseGroupAún no hay calificaciones

- Grit: The Power of Passion and PerseveranceDe EverandGrit: The Power of Passion and PerseveranceCalificación: 4 de 5 estrellas4/5 (588)

- (IJCST-V12I1P7) :tejinder Kaur, Jimmy SinglaDocumento26 páginas(IJCST-V12I1P7) :tejinder Kaur, Jimmy SinglaEighthSenseGroupAún no hay calificaciones

- On Fire: The (Burning) Case for a Green New DealDe EverandOn Fire: The (Burning) Case for a Green New DealCalificación: 4 de 5 estrellas4/5 (74)

- (IJCST-V11I6P5) :A.E.E. El-Alfi, M. E. A. Awad, F. A. A. KhalilDocumento9 páginas(IJCST-V11I6P5) :A.E.E. El-Alfi, M. E. A. Awad, F. A. A. KhalilEighthSenseGroupAún no hay calificaciones

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryCalificación: 3.5 de 5 estrellas3.5/5 (231)

- (IJCST-V11I4P12) :N. Kalyani, G. Pradeep Reddy, K. SandhyaDocumento16 páginas(IJCST-V11I4P12) :N. Kalyani, G. Pradeep Reddy, K. SandhyaEighthSenseGroupAún no hay calificaciones

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceCalificación: 4 de 5 estrellas4/5 (895)

- (Ijcst-V11i4p1) :fidaa Zayna, Waddah HatemDocumento8 páginas(Ijcst-V11i4p1) :fidaa Zayna, Waddah HatemEighthSenseGroupAún no hay calificaciones

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe EverandNever Split the Difference: Negotiating As If Your Life Depended On ItCalificación: 4.5 de 5 estrellas4.5/5 (838)

- (IJCST-V11I3P25) :pooja Patil, Swati J. PatelDocumento5 páginas(IJCST-V11I3P25) :pooja Patil, Swati J. PatelEighthSenseGroupAún no hay calificaciones

- The Little Book of Hygge: Danish Secrets to Happy LivingDe EverandThe Little Book of Hygge: Danish Secrets to Happy LivingCalificación: 3.5 de 5 estrellas3.5/5 (400)

- (IJCST-V11I4P5) :P Jayachandran, P.M Kavitha, Aravind R, S Hari, PR NithishwaranDocumento4 páginas(IJCST-V11I4P5) :P Jayachandran, P.M Kavitha, Aravind R, S Hari, PR NithishwaranEighthSenseGroupAún no hay calificaciones

- (IJCST-V11I3P7) :nikhil K. Pawanikar, R. SrivaramangaiDocumento15 páginas(IJCST-V11I3P7) :nikhil K. Pawanikar, R. SrivaramangaiEighthSenseGroupAún no hay calificaciones

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersCalificación: 4.5 de 5 estrellas4.5/5 (345)

- (IJCST-V11I4P15) :M. I. Elalami, A. E. Amin, S. A. ElsaghierDocumento8 páginas(IJCST-V11I4P15) :M. I. Elalami, A. E. Amin, S. A. ElsaghierEighthSenseGroupAún no hay calificaciones

- (IJCST-V11I4P9) :KiranbenV - Patel, Megha R. Dave, Dr. Harshadkumar P. PatelDocumento18 páginas(IJCST-V11I4P9) :KiranbenV - Patel, Megha R. Dave, Dr. Harshadkumar P. PatelEighthSenseGroupAún no hay calificaciones

- The Unwinding: An Inner History of the New AmericaDe EverandThe Unwinding: An Inner History of the New AmericaCalificación: 4 de 5 estrellas4/5 (45)

- (IJCST-V11I3P21) :ms. Deepali Bhimrao Chavan, Prof. Suraj Shivaji RedekarDocumento4 páginas(IJCST-V11I3P21) :ms. Deepali Bhimrao Chavan, Prof. Suraj Shivaji RedekarEighthSenseGroupAún no hay calificaciones

- Team of Rivals: The Political Genius of Abraham LincolnDe EverandTeam of Rivals: The Political Genius of Abraham LincolnCalificación: 4.5 de 5 estrellas4.5/5 (234)

- (IJCST-V11I4P3) :tankou Tsomo Maurice Eddy, Bell Bitjoka Georges, Ngohe Ekam Paul SalomonDocumento9 páginas(IJCST-V11I4P3) :tankou Tsomo Maurice Eddy, Bell Bitjoka Georges, Ngohe Ekam Paul SalomonEighthSenseGroupAún no hay calificaciones

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyCalificación: 3.5 de 5 estrellas3.5/5 (2259)

- (IJCST-V11I3P11) :Kalaiselvi.P, Vasanth.G, Aravinth.P, Elamugilan.A, Prasanth.SDocumento4 páginas(IJCST-V11I3P11) :Kalaiselvi.P, Vasanth.G, Aravinth.P, Elamugilan.A, Prasanth.SEighthSenseGroupAún no hay calificaciones

- (IJCST-V11I3P13) : Binele Abana Alphonse, Abou Loume Gautier, Djimeli Dtiabou Berline, Bavoua Kenfack Patrick Dany, Tonye EmmanuelDocumento31 páginas(IJCST-V11I3P13) : Binele Abana Alphonse, Abou Loume Gautier, Djimeli Dtiabou Berline, Bavoua Kenfack Patrick Dany, Tonye EmmanuelEighthSenseGroupAún no hay calificaciones

- (IJCST-V11I3P24) :R.Senthilkumar, Dr. R. SankarasubramanianDocumento7 páginas(IJCST-V11I3P24) :R.Senthilkumar, Dr. R. SankarasubramanianEighthSenseGroupAún no hay calificaciones

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaCalificación: 4.5 de 5 estrellas4.5/5 (266)

- (IJCST-V11I3P20) :helmi Mulyadi, Fajar MasyaDocumento8 páginas(IJCST-V11I3P20) :helmi Mulyadi, Fajar MasyaEighthSenseGroupAún no hay calificaciones

- The Emperor of All Maladies: A Biography of CancerDe EverandThe Emperor of All Maladies: A Biography of CancerCalificación: 4.5 de 5 estrellas4.5/5 (271)

- (IJCST-V11I3P9) :raghu Ram Chowdary VelevelaDocumento6 páginas(IJCST-V11I3P9) :raghu Ram Chowdary VelevelaEighthSenseGroupAún no hay calificaciones

- (IJCST-V11I3P12) :prabhjot Kaur, Rupinder Singh, Rachhpal SinghDocumento6 páginas(IJCST-V11I3P12) :prabhjot Kaur, Rupinder Singh, Rachhpal SinghEighthSenseGroupAún no hay calificaciones

- Rise of ISIS: A Threat We Can't IgnoreDe EverandRise of ISIS: A Threat We Can't IgnoreCalificación: 3.5 de 5 estrellas3.5/5 (137)

- (IJCST-V11I3P17) :yash Vishwakarma, Akhilesh A. WaooDocumento8 páginas(IJCST-V11I3P17) :yash Vishwakarma, Akhilesh A. WaooEighthSenseGroupAún no hay calificaciones

- (IJCST-V11I2P19) :nikhil K. Pawanika, R. SrivaramangaiDocumento15 páginas(IJCST-V11I2P19) :nikhil K. Pawanika, R. SrivaramangaiEighthSenseGroupAún no hay calificaciones

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreCalificación: 4 de 5 estrellas4/5 (1090)

- (IJCST-V11I3P5) :P Adhi Lakshmi, M Tharak Ram, CH Sai Teja, M Vamsi Krishna, S Pavan MalyadriDocumento4 páginas(IJCST-V11I3P5) :P Adhi Lakshmi, M Tharak Ram, CH Sai Teja, M Vamsi Krishna, S Pavan MalyadriEighthSenseGroupAún no hay calificaciones

- (IJCST-V11I3P8) :pooja Patil, Swati J. PatelDocumento5 páginas(IJCST-V11I3P8) :pooja Patil, Swati J. PatelEighthSenseGroupAún no hay calificaciones

- (IJCST-V11I3P4) :V Ramya, A Sai Deepika, K Rupa, M Leela Krishna, I Satya GirishDocumento4 páginas(IJCST-V11I3P4) :V Ramya, A Sai Deepika, K Rupa, M Leela Krishna, I Satya GirishEighthSenseGroupAún no hay calificaciones

- (IJCST-V11I2P18) :hanadi Yahya DarwishoDocumento32 páginas(IJCST-V11I2P18) :hanadi Yahya DarwishoEighthSenseGroupAún no hay calificaciones

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Calificación: 4.5 de 5 estrellas4.5/5 (121)

- (IJCST-V11I3P1) :Dr.P.Bhaskar Naidu, P.Mary Harika, J.Pavani, B.Divyadhatri, J.ChandanaDocumento4 páginas(IJCST-V11I3P1) :Dr.P.Bhaskar Naidu, P.Mary Harika, J.Pavani, B.Divyadhatri, J.ChandanaEighthSenseGroupAún no hay calificaciones

- (IJCST-V11I3P2) :K.Vivek, P.Kashi Naga Jyothi, G.Venkatakiran, SK - ShaheedDocumento4 páginas(IJCST-V11I3P2) :K.Vivek, P.Kashi Naga Jyothi, G.Venkatakiran, SK - ShaheedEighthSenseGroupAún no hay calificaciones

- (IJCST-V11I2P17) :raghu Ram Chowdary VelevelaDocumento7 páginas(IJCST-V11I2P17) :raghu Ram Chowdary VelevelaEighthSenseGroupAún no hay calificaciones

- K C Chakrabarty: Financial Inclusion and Banks - Issues and PerspectivesDocumento9 páginasK C Chakrabarty: Financial Inclusion and Banks - Issues and PerspectivesAnamika Rai PandeyAún no hay calificaciones

- 01 IK IESYS e Communications OverviewDocumento12 páginas01 IK IESYS e Communications OverviewHernando AlborAún no hay calificaciones

- SIL99-2C: Service Information LetterDocumento22 páginasSIL99-2C: Service Information LettersandyAún no hay calificaciones

- The Perks of Being a WallflowerDe EverandThe Perks of Being a WallflowerCalificación: 4.5 de 5 estrellas4.5/5 (2104)

- Six Stroke EngineDocumento19 páginasSix Stroke EngineSai deerajAún no hay calificaciones

- Afrimax Pricing Table Feb23 Rel BDocumento1 páginaAfrimax Pricing Table Feb23 Rel BPhadia ShavaAún no hay calificaciones

- Introduction To Turbomachinery Final Exam 1SY 2016-2017Documento1 páginaIntroduction To Turbomachinery Final Exam 1SY 2016-2017Paul RodgersAún no hay calificaciones

- Gold Kacha Flyer 2012Documento2 páginasGold Kacha Flyer 2012gustavus1Aún no hay calificaciones

- Her Body and Other Parties: StoriesDe EverandHer Body and Other Parties: StoriesCalificación: 4 de 5 estrellas4/5 (821)

- Issues in Timing: Digital Integrated Circuits © Prentice Hall 1995 TimingDocumento14 páginasIssues in Timing: Digital Integrated Circuits © Prentice Hall 1995 TimingAbhishek BhardwajAún no hay calificaciones

- True 63Documento2 páginasTrue 63stefanygomezAún no hay calificaciones

- CV Kevin Ikhwan Muhammad Pt. Hasakona BinaciptaDocumento6 páginasCV Kevin Ikhwan Muhammad Pt. Hasakona BinaciptaKevin MuhammadAún no hay calificaciones

- Mechanical Engineering Manufacturing.149Documento1 páginaMechanical Engineering Manufacturing.149Anonymous QvIxEazXGdAún no hay calificaciones

- Familiarization With Rational RoseDocumento12 páginasFamiliarization With Rational RoseKota Venkata Jagadeesh100% (1)

- Oyo Busines ModelDocumento1 páginaOyo Busines ModelVikash KumarAún no hay calificaciones

- Chiller: Asian Paints Khandala PlantDocumento19 páginasChiller: Asian Paints Khandala PlantAditiAún no hay calificaciones

- Ansi C37.72-1987 PDFDocumento25 páginasAnsi C37.72-1987 PDFIvanAún no hay calificaciones

- Bus & Cycle Signs & MarkingsDocumento5 páginasBus & Cycle Signs & MarkingsDaniel YitbarekAún no hay calificaciones

- Recorder Modifications - Alec Loretto 3Documento4 páginasRecorder Modifications - Alec Loretto 3Clown e GregorianoAún no hay calificaciones

- HT Motors Data SheetDocumento3 páginasHT Motors Data SheetSE ESTAún no hay calificaciones

- TX IrhsDocumento1 páginaTX IrhsvelizarkoAún no hay calificaciones

- Phase IIIDocumento11 páginasPhase IIIAjun FranklinAún no hay calificaciones

- Taking Off - RC Works (Complete)Documento4 páginasTaking Off - RC Works (Complete)Wai LapAún no hay calificaciones

- Global Trends 2030 Preview: Interactive Le MenuDocumento5 páginasGlobal Trends 2030 Preview: Interactive Le MenuOffice of the Director of National Intelligence100% (1)

- CT ManualDocumento543 páginasCT ManualwindroidAún no hay calificaciones

- CC 1352 PDocumento61 páginasCC 1352 PGiampaolo CiardielloAún no hay calificaciones

- MEPC 1-Circ 723Documento10 páginasMEPC 1-Circ 723Franco MitranoAún no hay calificaciones

- PAT Drilling Rig List (PAT)Documento24 páginasPAT Drilling Rig List (PAT)Francisco Salvador MondlaneAún no hay calificaciones

- MEEN461 - FA17 - LAB01 - Intro To PLCs and LogixProDocumento10 páginasMEEN461 - FA17 - LAB01 - Intro To PLCs and LogixProAngel Exposito100% (1)

- Initial PID - 19-0379 A01 01Documento39 páginasInitial PID - 19-0379 A01 01rajap2737Aún no hay calificaciones

- How To Draw Circuit DiagramDocumento1 páginaHow To Draw Circuit DiagramSAMARJEETAún no hay calificaciones

- Be It Enacted by The Senate and House of Representative of The Philippines in Congress AssembledDocumento36 páginasBe It Enacted by The Senate and House of Representative of The Philippines in Congress AssembledGina Portuguese GawonAún no hay calificaciones

- Dark Data: Why What You Don’t Know MattersDe EverandDark Data: Why What You Don’t Know MattersCalificación: 4.5 de 5 estrellas4.5/5 (3)

- Optimizing DAX: Improving DAX performance in Microsoft Power BI and Analysis ServicesDe EverandOptimizing DAX: Improving DAX performance in Microsoft Power BI and Analysis ServicesAún no hay calificaciones

- Grokking Algorithms: An illustrated guide for programmers and other curious peopleDe EverandGrokking Algorithms: An illustrated guide for programmers and other curious peopleCalificación: 4 de 5 estrellas4/5 (16)

- Ultimate Snowflake Architecture for Cloud Data Warehousing: Architect, Manage, Secure, and Optimize Your Data Infrastructure Using Snowflake for Actionable Insights and Informed Decisions (English Edition)De EverandUltimate Snowflake Architecture for Cloud Data Warehousing: Architect, Manage, Secure, and Optimize Your Data Infrastructure Using Snowflake for Actionable Insights and Informed Decisions (English Edition)Aún no hay calificaciones

- Modelling Business Information: Entity relationship and class modelling for Business AnalystsDe EverandModelling Business Information: Entity relationship and class modelling for Business AnalystsAún no hay calificaciones