Documentos de Académico

Documentos de Profesional

Documentos de Cultura

Microsoft Exchange Best Practices

Cargado por

Ahmad Fazli Mohd SipinDerechos de autor

Formatos disponibles

Compartir este documento

Compartir o incrustar documentos

¿Le pareció útil este documento?

¿Este contenido es inapropiado?

Denunciar este documentoCopyright:

Formatos disponibles

Microsoft Exchange Best Practices

Cargado por

Ahmad Fazli Mohd SipinCopyright:

Formatos disponibles

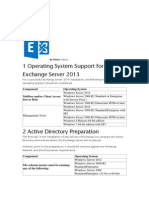

W H I T E PA P E R

Microsoft Exchange

Best Practices

Introduction

Microsoft Exchange is the worlds most widely deployed email and collaboration

solution. Microsoft continues to improve the product with each new revision, pushing

the limits of existing server and storage devices. Microsoft Exchange 2007 is the

latest version of the popular email solution.

The most critical component of an Exchange deployment is the storage subsystem

where the data is held. The data flow from the storage to the Exchange application

tends to be of small block size and random in nature. This type of workload is one

of the most demanding for any storage subsystem to handle without performance

bottlenecks. Incorrect sizing of the storage array or improper deployment practices

at the data layer will lead to serious performance consequences for the Exchange

solution. While Exchange goes far beyond offering just email service and management, the scope of this paper is storage sizing and deployment practices for Microsoft

Exchange 2007 SP1 on the Pillar Data Systems Pillar Axiom in an email environment.

Using the Pillar Axiom for Exchange

The Pillar Axiom is an ideal storage system for Microsoft Exchange 2007 deployments.

Its unique architecture delivers high utilization rates while providing robust performance, and it provides service levels based on application and data priority.

The Pillar Axiom Array

The Pillar Axioms combination of large cache and distributed RAID create an ideal

environment for running Exchange. The effect of the randomness inherent in the

Exchange write I/Os is absorbed by the cache and the random nature of the read

I/Os is masked by spreading data across dozens of spindles such a profile typically

saps the performance of traditional storage arrays without this type of architecture.

The Pillar Axiom is so efficient that most Exchange deployments will work quite well

on a Pillar Axiom with SATA drives; its very rare that a deployment will require an

all-FC solution. It is very common however, to use a mix of FC and SATA drives in

an Exchange solution on a Pillar Axiom.

Storage System Fabric

PX I64

L L A R

D A T A

S Y S T E M S

M I C R O S O F T

E X C H A N G E

B E S T

P R A C T I C E S

Pillar Axiom Architecture

The Pillar Axiom storage system consists of three basic components: pilot, slammer,

and bricks.

Slammer

The slammer is the I/O engine of the Pillar Axiom system. It consists of cache, backend switches, and two control units (CUs) in an active-active asymmetric configuration, and is also where the Pillar AxiomOne software resides. A slammer can be SAN

(FC and/or iSCSI) or NAS (CIFS and/or NFS). A single Pillar Axiom supports up to

four slammers (SAN, NAS or any combination of the two). The slammer does not

perform RAID calculations: its sole responsibility is managing the quality of service

(QoS) of the data flowing to the back-end disk pool. This allows the Pillar Axiom

to scale in capacity without sacrificing performance. In fact, performance actually

improves as capacity grows. Each LUN defined in a slammer is spread across multiple

bricks in the back end.

Bricks

Pillars QoS

Pillar does not believe that all

I/O is created equally, so all LUNs

should not be created equally.

Much like quality of service (QoS)

in a network, Pillars QoS technology allocates system resources and

handles each data flow according

to business priority. With a simple

management interface, IT managers can provision different classes

of service within a single system,

aligning storage resources with

the business value of each data

type and application.

A Brick is a drive tray or shelf with two RAID controllers and is fully populated with

active FC or SATA drives as well as a dedicated hot spare. One or more Bricks makes

up the back-end disk storage pool that is accessed by up to four slammers. Each brick

is connected to the slammer(s) through the Storage System Fabric which is a private

switched network. Only data flowing between the bricks and slammers uses this

fabric. The slammer stores many I/Os in cache and then flushes them to the bricks.

With the distributed RAID controllers in each brick, there is no bottleneck writing

the data. An additional benefit of this type of back-end architecture is that drive

rebuilds occur rapidly and without impact on the performance of the applications

using the Pillar Axiom.

Pilot

The pilot manages and monitors the Pillar Axiom. Even though it plays a supporting

role in day-to-day activities of the storage system, it too is configured as a highlyavailable solution and consists of dual redundant control units. It offloads the overhead associated with management and monitoring of the entire system.

P I L L A R

D A T A

S Y S T E M S

M I C R O S O F T

E X C H A N G E

B E S T

P R A C T I C E S

Exchange Storage Architecture

At the heart of Exchange 2007 is the Information Store (IS), which is made up of one

or more database and log files that are divided into storage groups. The IS can be

split across up to five storage groups for Exchange 2007 Standard Edition, and 50

storage groups in Exchange 2007 Enterprise Edition.

Each storage group can have up to five database files and only one log file. It is a

Microsoft best practice to only use one database per storage group until the storage

group limit is reached, as well as to limit any given database file to 200GB or less in

order to aid backup and recovery. In addition, the LUN hosting the file should have

sufficient space to allow for overhead, typically 20%.

Exchange 2007 I/O Improvements

Exchange 2007 has many improvements over Exchange 2003. Much of this is due to

the move from 32-bit to 64-bit processing, database cache improvements, and a

number of other under the hood modifications.

64-bit Processing

There are numerous advantages to the 64-bit memory architecture over 32-bit, but

there are two main points in relation to Exchange: non-page memory scalability and

increased database cache size.

Non-page Memory Scalability

Non-page memory is a section of memory that cannot be paged to disk and serves

many functions, including filter drivers (anti-virus, anti-malware, appliance replication

agents, and backup agents) and client connections.

On a 32-bit system, the non-page memory is limited to 256 megabytes. Exchange

requires extended memory hence Microsoft recommends as a best practice to use

the /3GB switch. This switch changes the kernel memory allocation from 2GB reserved for system and 2GB reserved application to 1GB System and 3GB for application. This halving of the system memory space directly impacts the non-paged memory allocation from 256MB to 128MB. Microsoft also recommends keeping 30MB of

that 128MB free, leaving fewer than 100MB for Exchange. This is quickly used, and if

a device or filter driver leaks then this can cause a system crash when the 100MB

is exhausted. Also note that some hardware devices (such as some HBAs) will also

reserve space in this area.

In stark contrast to the 256MB limit of non-paged memory supported on a 32-bit

system, the 64-bit server limit is 256GB - subject to physical RAM limits in the server.

This boost in non-paged memory space not only frees up space from a management

and scalability perspective, but also improves performance.

Database Cache

On a 32-bit system the maximum cache size for the information store is 900MB. In

simple terms, when the needed information is available in cache there is no need to

go to disk to service the request. This cache is shared across the user mailboxes on

the server. A 32-bit system hosting 2000 users would effectively have a database

cache of 0.45MB per user. In 64-bit systems this limit is removed. Its not uncommon

for a new server to have 32GB or more of RAM, which would significantly increase

the amount of cache per user. This boost in user cache has significant positive influences on performance.

P I L L A R

D A T A

S Y S T E M S

M I C R O S O F T

E X C H A N G E

B E S T

P R A C T I C E S

Considerations for a Microsoft Exchange Implementation

A key to successful implementation is careful planning. When planning to implement

Microsoft Exchange 2007, you must take the following into consideration:

Storage configuration: DAS vs. SAN

Sizing metrics for Exchange, including user I/O profiles

Capacity requirements

Considerations for Storage Group, Volume, and LUN Design

DAS vs. SAN for Exchange

Microsoft has dramatically moved Exchange forward both in features and performance, and 64-bit processing enables a much lighter demand on the disk storage

system. These improvements have caused some misunderstanding around whether

the ideal storage platform for Exchange is direct-attached storage (DAS) or storage

area network (SAN). For smaller deployments the improvements in performance and

reduction in disk demands means that DAS may be a viable option for Exchange

2007 storage, but SAN is also appropriate as long as the storage array in question

is application aware.

When using SAN technology it is vital to choose an application-aware storage array

to avoid effectively turning a SAN array into an expensive external DAS array. Legacy

arrays do not have the ability to treat any one I/O differently from another I/O, so the

entire array must be dedicated to Exchange, as the load from any other application

on the same system could interfere with the Exchange service. This leads to low disk

capacity utilization, high cost, and the mistaken assumption that Exchange may be

better suited for DAS.

Intelligence Drives Down Costs

The Application-Aware Pillar Axiom storage system drives down acquisition and

operating costs by:

Buying less storage. Buy too few spindles of high capacity storage and performance

will suffer and utilization rates will be low. Buy too many spindles of low capacity

drives and costs increase dramatically. What if you could get differentiated services

from multiple LUNs striped across high-capacity spindles? You get the best of both

worlds: high performance and high utilization; you buy less storage.

Provisioning storage like you provision your servers. By provisioning LUNs by

application much like you provision your virtualized servers management and

maintenance become simpler tasks. No more array read/write cache rebalancing

due to changes in application workload. Ease of management becomes more and

more important as capacity requirements grow.

Dynamically reassigning resources temporarily or permanently. Storage silos

are a thing of the past. Arrays shouldnt be disposable devices bought for a project

and then scrapped at the end of the project or left serving data that is no longer

accessed. Once a project is completed, reassign the priority of the LUNs and start

the new project on the same array. If a database has high IO needs at the end of

each month, dont provision your storage systems for a peak that only comes

every four weeks; simply setup a policy to increase the priority of that application

temporarily, and then reset it.

P I L L A R

D A T A

S Y S T E M S

M I C R O S O F T

E X C H A N G E

B E S T

P R A C T I C E S

By using a Pillar Axiom Application-Aware array system connected to a SAN, not

only is it possible to drive much higher levels of utilization typically up to 80%

but its also possible to co-host other applications on the same array. In fact, you

can place other applications on the same disks with Exchange and still ensure that

each application gets the performance it needs without affecting the others, all

with a level of platform reliability that exceeds that of DAS.

By deploying Microsoft Exchange 2007 on a Pillar Axiom, not only can the needs of

Exchange be met, but also those of Microsoft SQL servers, Active Directory controllers, file and print servers, SharePoint servers, WORM-based email archive, and

more. All in the same array.

Sizing Exchange Metrics: User I/O Profiles

To accurately size a Pillar Axiom storage system for Exchange 2007, you start by developing User I/O Profiles, then determine the number of users, and the size of each

mailbox. This all leads to toward the calculation of an aggregate IOP rate and then

building the matching storage to support the load.

A User I/O Profile is a measure of the work that Exchange must do to support the

user. Table 1 below lists guidelines on calculating how many IOPS (I/Os per second)

will be needed for each type of user. For example, a company with 2000 very

heavy users will require a disk system that can support at least 960 IOPS (2000 mailboxes * .48 IOPS/mailbox = 960 IOPS).

Table 1. Calculating IOPS

Mailbox User Type

Message Profile

Logs Generated/Mailbox/day

I/O per User

Light

5 sent/20 received

.08

Medium

10 sent/40 received

12

.16

Heavy

20 sent/80 received

24

.32

Very Heavy

30 sent/120 received

36

.48

Additional third-party applications will increase the IOPS requirement. For example,

a mailbox with an associated BlackBerry account will need twice the IOPS of a normal

mailbox. More information can be found at http://msexchangeteam.com.

Note that the I/O requirement per mailbox type is dramatically lower for Exchange

2007 when compared to Exchange 2003.

Capacity Requirements

Another important consideration when planning an Exchange deployment is capacity

requirements. Due to a rapid rise in capacity versus a flat trend in disk performance,

Exchange 2007 capacity requirements are almost always overshadowed by IOPS

requirements. When using legacy (non application-aware) arrays this leads to issues

of low utilization and high cost in all but the lowest workload environments. Nearly

all of the time, the number of spindles required to meet the IOPS requirements will

provide more than sufficient capacity for the solution. Legacy arrays can not effectively access this capacity for other applications and it becomes unused capacity.

P I L L A R

D A T A

S Y S T E M S

M I C R O S O F T

E X C H A N G E

B E S T

P R A C T I C E S

However, when using an application-aware storage array with a robust QoS, correct

capacity sizing allows for unused space to be utilized for other tasks such as file servers,

SQL, or backup solutions.

Planning your capacity requirements means taking into account the requirements for

information stores.

Information Stores

The Information Store consists of the database and log files. The first step in calculating the capacity youll need for the information Stores is to calculate the capacity

for the mailboxes. Microsoft provides the following basic equation to calculate storage requirements for mailboxes: Mailbox Capacity = Num_Mailboxes * Mailbox_Size

Table 2 below shows guidelines on calculating typical Mailbox_Size estimates based

on the types of users you are planning for:

Table 2. Typical mailbox sizes

User Type

Message Profile

Light

50MB

Medium

100MB

Heavy

200MB

Very Heavy

500MB 2GB

These values are based upon site averages observed by Microsoft, and specific

site requirements will vary based upon their specific needs. If the implementation

is a technology refresh, the Exchange admin will know the average mailbox sizes.

However, be sure to plan for growth.

Given the Microsoft recommendation of 200GB per database file, calculating the

number of users per database file is simply:

Number of Users per database = 200GB / User Mailbox Size

The next step is to add capacity for logs. Storage for logs should be adequate to

hold three days worth of log files. If no data is available to determine a suitable size

for the Log LUNs, use 50GB per storage group.

P I L L A R

D A T A

S Y S T E M S

M I C R O S O F T

E X C H A N G E

B E S T

P R A C T I C E S

Storage Group, LUN, Partition, and Volume Design Considerations

You must make considerations for storage groups, LUNS, Partitions, and volumes;

adjusting settings as appropriate for your deployment. Microsoft recommendations

are explained here: http://technet.microsoft.com/en-us/library/

bb331954(EXCHG.80).aspx

Storage Group

Microsoft recommends that you place each database in its own storage group

until the maximum number of storage groups (five) is reached. There are several

advantages to this:

Allows spreading of the load of mailboxes across as many databases and storage

groups as possible.

Creates an Exchange storage topology that can be managed more easily.

Decreases database size.

Reduces log files and log traffic conflict and sharing between multiple databases.

Increases I/O managability.

Improves recoverability.

Increases aggregate checkpoint depth per user.

Databases in a storage group are not completely independent because they share

transaction log files. As the number of databases in a storage group increases,

more transaction log files are created during normal operation. This larger number

of transaction log files requires additional time for transaction log replay during

recovery procedures, resulting in longer recovery times.

All storage groups enabled for continuous replication are limited to a single database

per storage group. This includes all storage groups in a cluster continuous replication

(CCR) environment, as well as any storage group enabled for local continuous replication (LCR) and/or standby continuous replication (SCR). A storage group with multiple

databases can not be enabled for LCR or SCR, and after a storage group is enabled

with continuous replication, you cannot add additional databases to it.

LUN, Partition, and Volume

In addition to the storage group, there are three additional objects that have to

be configured: LUN, partition, and volume.

A LUN is the amount of disk storage presented to the Windows operating system

that shows as a physical disk under Disk Management. LUNs are created on the

Pillar Axiom using either the GUI or the Command-Line Interface (CLI) tool.

Partitions are allocations of physical disk space upon which volumes can be created.

There are two types of partition styles: Master Boot Record (MBR) and GUID Partition Table (GPT). An MBR partition can hold up to four primary partitions or up to

three primary and one extended partition; and a GPT can hold up to 128 partitions.

The Volume may take up all of a disk or use just a section of a partition. In Windows

it is also referred to as a Logical Disk. It is here that the operating system creates

file systems.

P I L L A R

D A T A

S Y S T E M S

M I C R O S O F T

E X C H A N G E

B E S T

P R A C T I C E S

Disk Configurations in an Exchange Deployment

The correct choice for configuring a storage system for an Exchange deployment

depends on a number of considerations, including operational simplicity and the

requirements for I/O and capacity. There are many possible configurations that can

be used with the Pillar Axiom and Exchange, including:

One-to-one

One-to-many

Hybrid

One-to-One

A one-to-one configuration is simply one Pillar Axiom LUN to one primary partition

and one volume filling that partition for storage group file, with the LUN size

based on its role as either database or log. One-to-one is the simplest LUN design

to understand and is simple to deploy and manage. It is recommended for deployments where the overall user count is likely to be 1000 users or less. When considering

higher user counts, depending on the user profiles required, it is likely that a

one-to-many design would be more suitable.

One-to-Many

As the required user count grows, it becomes more efficient to host multiple

volumes on a single Pillar Axiom LUN. In deployments where a high number of

storage groups are used, operational simplicity can be maintained by using mount

points rather than drive letters. The ratio of the number of volumes per LUN is

dependent on a number of factors:

I/O profile of the user community of each storage group

The number of users in each storage group

The QoS setting of each LUN

Using the one-to-many model reduces the number of LUNS created on the Pillar

Axiom and presented to the server, but still involves a large numbers of volumes.

While the Pillar Axiom is designed to manage high-contention environments

efficiently, there is a point where adding more databases to the same LUN will

potentially yield less performance benefit.

Hybrid

A hybrid configuration is a mixture of the one-to-one and one-to-many models.

It strays from Microsoft best practice but in some environments may yield other

advantages while delivering a high performance, reliable storage layer. An example

of this would be to create one or more LUNS to hold all volumes associated with

logs. This approach has the advantages of reducing the potential for contention

from different I/O types by holding all sequential write operations of the logs to

a single or few LUNs and isolating the random I/Os of database files to other LUNs.

The choice of backup solution can also affect LUN design. When using a hardware

VSS Provider, the one-to-one configuration must be used. If using a Softwar-based

VSS provider, then any of the configurations may be used.

P I L L A R

D A T A

S Y S T E M S

M I C R O S O F T

E X C H A N G E

B E S T

P R A C T I C E S

How to Choose the Right Design

The Microsoft exchange product team has released a storage design tool which is

useful to ensure all entire data gathering and planning activities are completed. They

can be found here: http://msexchangeteam.com/archive/2007/01/15/432207.aspx

Note that the tool is not designed with any one specific storage vendor in mind and

as such there are a few differences when designing with the Pillar Axiom because it

is application aware.

Deploying Exchange on a Pillar Axiom is achieved with the following steps:

Storage solution sizing

LUN implementation

Solution testing

Storage Solution Sizing

Microsoft has published the following formula to assist in determining Exchange

performance needs:

IOPs = Num_Mailboxes * DB_Volume

Refer to the table in Sizing Exchange Metrics: User I/O Profiles on page 5 of

this document for a table showing how to define the database volume IOPS for

each user. Also take into account the additional I/O load created by features such

as BlackBerry servers, virus scan applications, and email archiving as these can

have a large impact on your numbers.

LUN Implementation

Pillar Axiom LUNs for use with Exchange are created by sizing the LUN, assigning

the proper the QoS values, making LUN-to-volume adjustments, and setting the

queue depth on the server.

LUN Sizing

Since a storage group consists of a database file not to exceed 200GB plus a 20%

working area, each volume needs to be 240GB. Therefore a LUN created to house

a number of volumes should be calculated using:

Pillar Axiom LUN Size = 240GB * number of volumes

QoS Settings

Configuring QoS for LUNs on a Pillar Axiom is a simple part of the LUN creation

process, consisting of setting the priority, expected access pattern, and I/O readwrite bias.

All SATA-based database LUNs should be created with a low priority, mixed access,

and a mixed I/O bias (Figure 1).

Figure 1. QoS settings for SATA-based database LUN

P I L L A R

D A T A

S Y S T E M S

M I C R O S O F T

E X C H A N G E

B E S T

P R A C T I C E S

After the LUN is created, the QoS settings should be changed to a high priority

(Figure 2). Use the Temporary option to keep the data striped in the low band

on the disks but to increase its processing allocation so the LUN has the proper

resources to perform adequately.

Figure 2. Temporary change to QoS settings

Log LUNs should be created exactly like the database LUNs but should be kept

at the low priority. Should any fine tuning be needed, the QoS settings can be

changed on the fly. Under no circumstances should sequential write be used.

In a mixed Fibre Channel/SATA Pillar Axiom solution, the database LUNs should be

configured with a premium priority, mixed access type and mixed IO bias (Figure 3).

This will force these LUNs to reside on FC drives.

Figure 3. QoS settings for mixed FC/SATA-based database LUN

The LUNs for the logs in a mixed Fibre Channel/SATA solution should be with

a low priority, mixed access type and mixed IO bias. As with an all-SATA solution,

the priority of the log LUNs can be fine tuned on the fly if needed.

It is a Microsoft best practice that database and log LUNs should not share the same

spindles if possible to ensure optimal performance. With the Pillar Axiom, this is not

necessary to optimize performance since the LUNs will have plenty of I/O potential.

If this is a requirement, LUN separation at the disk level can be verified using the

storage_allocation command in the Pillar Axiom CLI tool.

LUN to Volume Alignment

Low service time, known as disk IO latency, is critical in Exchange environments.

The Pillar Axiom can write data to the disks in various IO sizes. However a single

RAID controller will receive I/O in segments up to 640KB, and each drive in the

RAID group receives a portions up to 128KB strips per SATA disk, or 64KB for

FC disk. Pillars testing shows minimal difference in IOPS between the default

alignment offset and with an alignment offset of say 128KB or 640KB. However

a 128KB alignment offset does provide the lowest service times for SATA drives.

For FC drives, 64KB or 128KB alignment provides the lowest service times.

Pillar recommends a 128KB alignment offset on all partitions to be used for

Exchange, whether the LUN resides on FC or SATA.

Use the Diskpart command-line tool in Windows to create the partitions for the LUNs

for Exchange storage. Diskpart allows the administrator to set the alignment for each

partition to optimize performance.

P I L L A R

D A T A

S Y S T E M S

10

M I C R O S O F T

E X C H A N G E

B E S T

P R A C T I C E S

To start a Diskpart session, open a Windows command shell and type diskpart.

To create a partition with the proper 128KB alignment on a LUN type:

list disk

Select disk X (where X is the disk number in question)

Type: create partition primary size = 225280 align=128 (where size is in MB and

alignment in KB)

Queue Depth

For windows systems, queue depth (the number of concurrent I/Os) is set via a registry

setting. While the value can be from 1 to 254, it is recommended for Exchange systems

that the queue depth be set between 128 and 254 depending on the number of

servers attached. The following example shows the steps to set the queue depth

for two mailbox servers or less use 254. Pillar Professional Services can be engaged

for specific environments .

Use the following procedure to change the qd parameter for Qlogic cards. For other

FC and iSCSI adapters please see OEM documentation or the OEM website.

1. From the Windows Start menu, select Run, and enter REGEDT32.

2. Select HKEY_LOCAL_MACHINE and follow the tree structure down to the

QLogic driver as follows:

HKEY_LOCAL_MACHINE

SYSTEM

CurrentControlSet

Services

Ql2300

Parameters

Device

3. Double click on DriverParameter:REG_SZ:qd=32.

4. If the string qd= does not exist, append to end of string ;qd=32.

5. Enter a value up to 254 (0xFE). The default value is 32 (0x20).

6. Click OK.

7. Exit the Registry Editor and reboot the system.

Use the HBAAnywhere tool, from Emulex, to set queue depth on Emulex HBAs.

Solution Testing

Once the system has been designed, installed and configured, it is important to

confirm the performance levels of the subsystem with the Microsoft testing tool

Jetstress. This will confirm expected results and determine maximum performance

metrics for the implemented design.

To obtain an accurate evaluation of the configuration, configure the mailbox

count and mailbox size to be indicative of the environment. It may be necessary

to use manual tuning for thread count, but all other settings should remain as the

default. Settings should be consistent between runs while making tuning changes

in the environment.

P I L L A R

D A T A

S Y S T E M S

11

M I C R O S O F T

E X C H A N G E

B E S T

P R A C T I C E S

Other Exchange Considerations

RAID 10 vs. RAID 5

Microsoft often discusses in detail the write penalty of using RAID 5 for Exchange

storage. Pillar does not agree with their out-of-date assessment given the performance

advances in hardware RAID solutions. The Pillar Axiom optimizes I/O on the slammer,

provides benefit from cached writes, and stripes the LUNs across multiple Hardware

RAID sets. It is also simple to change between RAID 5 and RAID 10 protection

scheme on the Pillar Axiom if there is any concern over RAID5 performance. Both

schemes should be tested to determine which best meets the needs of the solution.

Impact of Array-based Cache

Cache in an integrated storage array is always vital for random-write I/O. The ability

to acknowledge a write request while the data remains in mirrored cache will greatly

reduce write service requests. The Pillar Axioms extremely large slammer cache

capability delivers excellent write performance.

Read cache in an Exchange environment, however, has a lesser impact given the

random nature. The ability to use pre-fetch, read-ahead or resuse data yields read

cache hits that are typically below 5%.

Failure Mode

While a solution will run smoothly most of the time, it is important to plan for what

will occur in the event of system failure.

Unlike most storage systems in which two controllers do everything, the Pillar Axiom

distributes the heaviest workload across a shared disk pool, leaving the slammers

to manage data flow and advanced features. The advantages of Pillars approach

is most evident in the event of a failure. Should a disk fail, the rebuild activity is

isolated within the brick, which means only a fraction of the disks and RAID engines

are impacted to complete the drive rebuild. As a result, rebuilds are completed very

quickly with minimal impact to Exchange performance. Further, a SATA drive rebuild

does not impact the applications accessing FC disks.

In a storage system where all activity is managed by just two controllers in the

head unit, at least 50% of processing power is diverted for a disk rebuild, impacting

every LUN on the controller. With the Pillar Axiom, each RAID controller in the brick

is rated to handle all of the drives within that brick. If a RAID controller fails, the other

controller within that brick comfortably handles the I/Os for those drives. If a CU

fails, the remaining CU picks up the load and no RAID processing power is lost.

This makes Pillar Axiom the most resilient system on the market and ideal for critical

applications like Exchange.

Summary

The combination of Microsoft Exchange 2007 and the Pillar Axiom is a class-leading

solution for messaging, collaboration, and unified communications. The Pillar Axiom

not only provides the stable, high-performance storage platform that Exchange

requires but through its Application Aware architecture and QoS, enables other

Microsoft applications to be co-hosted on the same array in perfect harmony.

P I L L A R

D A T A

S Y S T E M S

12

M I C R O S O F T

E X C H A N G E

B E S T

P R A C T I C E S

Pillar Data Systems takes a sensible, customer-centric approach to networked storage. We

started with a simple, yet powerful idea: Build a successful storage company by creating value

that others had promised, but never produced. At Pillar, were on a mission to deliver the most

cost-effective, highly available networked storage solutions on the market. We build reliable,

flexible solutions that, for the first time, seamlessly unite SAN with NAS and enable multiple

tiers of storage on a single platform. In the end, we created an entirely new class of storage.

www.pillardata.com

2008 Pillar Data Systems. All Rights Reserved. Pillar Data Systems, Pillar Axiom, AxiomONE and the Pillar logo are

trademarks or registered trademarks of Pillar Data Systems. Other company and product names may be trademarks

of their respective owners. Specifications are subject to change without notice.

WP-EXCHANGE-0908

También podría gustarte

- VMware Performance and Capacity Management - Second EditionDe EverandVMware Performance and Capacity Management - Second EditionAún no hay calificaciones

- Beginners Guide To SSL CertificatesDocumento8 páginasBeginners Guide To SSL Certificatesf357367Aún no hay calificaciones

- How To Set Up An FTP Server in Windows Server 2003Documento4 páginasHow To Set Up An FTP Server in Windows Server 2003Hello_KatzeAún no hay calificaciones

- (IBM) System Storage SAN Volume Controller Version 6.4.0Documento350 páginas(IBM) System Storage SAN Volume Controller Version 6.4.0hiehie272Aún no hay calificaciones

- When Should I Deploy Fiber Channel Instead of iSCSI?Documento5 páginasWhen Should I Deploy Fiber Channel Instead of iSCSI?Subham MandalAún no hay calificaciones

- 70-740 Exam Simulation-CBT NUGGETS 1Documento83 páginas70-740 Exam Simulation-CBT NUGGETS 1Radu Lucian Mihai50% (2)

- How To Activate Window ServerDocumento3 páginasHow To Activate Window ServerG DragonAún no hay calificaciones

- SCCM Secondary Site vs. BDPDocumento2 páginasSCCM Secondary Site vs. BDPDavid RyderAún no hay calificaciones

- Windows Deployment Services A Complete Guide - 2020 EditionDe EverandWindows Deployment Services A Complete Guide - 2020 EditionAún no hay calificaciones

- Exchange Office 365 Hybrid Configuration WizardDocumento23 páginasExchange Office 365 Hybrid Configuration WizardVăn HảiAún no hay calificaciones

- Pro Exchange 2019 and 2016 Administration: For Exchange On-Premises and Office 365De EverandPro Exchange 2019 and 2016 Administration: For Exchange On-Premises and Office 365Aún no hay calificaciones

- Step by Step Gateway Server Installation - SCOM 2016Documento49 páginasStep by Step Gateway Server Installation - SCOM 2016Horatiu BradeaAún no hay calificaciones

- The Difference Between SCSI and FC ProtocolsDocumento4 páginasThe Difference Between SCSI and FC ProtocolsSrinivas GollanapalliAún no hay calificaciones

- Isilon Troubleshooting Guide: Networking: SmartConnectDocumento35 páginasIsilon Troubleshooting Guide: Networking: SmartConnectNoso OpforuAún no hay calificaciones

- UCS Solution OverviewDocumento36 páginasUCS Solution OverviewayolucaAún no hay calificaciones

- Network Engineer Resume SampleDocumento3 páginasNetwork Engineer Resume Samplemeghnadash29Aún no hay calificaciones

- OS - Windows 10, Windows Server 2016 - Compatibilty List - 8.1Documento37 páginasOS - Windows 10, Windows Server 2016 - Compatibilty List - 8.1Cenaic TaquaritubaAún no hay calificaciones

- Chris Seferlis, Christopher Nellis, Andy Roberts - Practical Guide to Azure Cognitive Services_ Leverage the Power of Azure OpenAI to Optimize Operations, Reduce Costs, And Deliver Cutting-edge AI SolDocumento798 páginasChris Seferlis, Christopher Nellis, Andy Roberts - Practical Guide to Azure Cognitive Services_ Leverage the Power of Azure OpenAI to Optimize Operations, Reduce Costs, And Deliver Cutting-edge AI SolMarcelo VeraAún no hay calificaciones

- IBM Spectrum Virtualize Product FamilyDocumento111 páginasIBM Spectrum Virtualize Product FamilyArmandoAún no hay calificaciones

- Cisco Certified Security Professional A Complete Guide - 2020 EditionDe EverandCisco Certified Security Professional A Complete Guide - 2020 EditionAún no hay calificaciones

- Windows Server 2016: Installation and Configuration: Command ReferencesDocumento8 páginasWindows Server 2016: Installation and Configuration: Command ReferenceslucasAún no hay calificaciones

- How To Sign Up - Admin HelpDocumento579 páginasHow To Sign Up - Admin HelpAlejandro Cortes GarciaAún no hay calificaciones

- How To Install Exchange 2013 On Windows Server 2012Documento18 páginasHow To Install Exchange 2013 On Windows Server 2012Ali Fayez SaharAún no hay calificaciones

- Introduction To Fibre Channel Sans: Student GuideDocumento26 páginasIntroduction To Fibre Channel Sans: Student GuideJavier Romero TenorioAún no hay calificaciones

- Lecture 5 Vlan SanDocumento59 páginasLecture 5 Vlan SanZeyRoX GamingAún no hay calificaciones

- ZFS Admin GuideDocumento276 páginasZFS Admin GuideKhodor AkoumAún no hay calificaciones

- Vsphere Esxi Vcenter Server 703 Configuration GuideDocumento75 páginasVsphere Esxi Vcenter Server 703 Configuration GuideErdem EnustAún no hay calificaciones

- The Real MCTS/MCITP Exam 70-640 Prep Kit: Independent and Complete Self-Paced SolutionsDe EverandThe Real MCTS/MCITP Exam 70-640 Prep Kit: Independent and Complete Self-Paced SolutionsCalificación: 1.5 de 5 estrellas1.5/5 (3)

- ESX Server 3i PresentationDocumento29 páginasESX Server 3i PresentationGeorgi PetrovAún no hay calificaciones

- 70-345 Designing and Deploying Microsoft Exchange Server 2016Documento197 páginas70-345 Designing and Deploying Microsoft Exchange Server 2016SilviuAún no hay calificaciones

- Exchange 2013 Step by StepDocumento26 páginasExchange 2013 Step by StepAshish Kumar100% (1)

- Exchange 2010 To 2013 Migration GuideDocumento38 páginasExchange 2010 To 2013 Migration GuideNAGA YAún no hay calificaciones

- Microsoft Exchange Server 2013 Installation: An Enterprise Grade Email SolutionDocumento23 páginasMicrosoft Exchange Server 2013 Installation: An Enterprise Grade Email SolutionnithinAún no hay calificaciones

- 7 - Net Support SchoolDocumento21 páginas7 - Net Support SchoolAbdul AzizAún no hay calificaciones

- Active Directory Migrations A Complete Guide - 2020 EditionDe EverandActive Directory Migrations A Complete Guide - 2020 EditionAún no hay calificaciones

- The Exchange 2016 Preferred ArchitectureDocumento5 páginasThe Exchange 2016 Preferred ArchitectureNavneetMishraAún no hay calificaciones

- Microsoft Exchange Server: Nutanix Best Practices Version 2.2 - December 2020 - BP-2036Documento52 páginasMicrosoft Exchange Server: Nutanix Best Practices Version 2.2 - December 2020 - BP-2036Mohamed NokairiAún no hay calificaciones

- SAP Hardening and Patch Management Guide For Windows ServerDocumento101 páginasSAP Hardening and Patch Management Guide For Windows ServerhamidmasoodAún no hay calificaciones

- Cisco Ios Basic SkillsDocumento10 páginasCisco Ios Basic SkillsSasa MikiruniAún no hay calificaciones

- ACS 5 X To ISE Migration GuideDocumento45 páginasACS 5 X To ISE Migration GuideKevin Anel Hernández Ruíz100% (1)

- Ebook Windows Admin CenterDocumento170 páginasEbook Windows Admin CenterLuis Reyes ZelayaAún no hay calificaciones

- Windows Admin L2Documento32 páginasWindows Admin L2ranampcAún no hay calificaciones

- Microsoft System Center Orchestrator 2012 R2 EssentialsDe EverandMicrosoft System Center Orchestrator 2012 R2 EssentialsAún no hay calificaciones

- Manual JuniperDocumento242 páginasManual JuniperAndres GarciaAún no hay calificaciones

- GIAC Certified Windows Security Administrator The Ultimate Step-By-Step GuideDe EverandGIAC Certified Windows Security Administrator The Ultimate Step-By-Step GuideAún no hay calificaciones

- Performance Tuning Windows Server 2016Documento199 páginasPerformance Tuning Windows Server 2016maukp100% (1)

- MK3 Harness PinoutDocumento12 páginasMK3 Harness Pinoutluis5107Aún no hay calificaciones

- Mechatronics Eng 07 08 EtDocumento86 páginasMechatronics Eng 07 08 EtVenu Madhav ReddyAún no hay calificaciones

- DV-08-UK (Oct-07)Documento28 páginasDV-08-UK (Oct-07)hepcomotionAún no hay calificaciones

- EngView Folding CartonDocumento89 páginasEngView Folding CartonMarilyn AriasAún no hay calificaciones

- Scanner 2000 Microefm Solutions: Making MeasurementDocumento6 páginasScanner 2000 Microefm Solutions: Making Measurementesteban casanovaAún no hay calificaciones

- 1979 IC MasterDocumento2398 páginas1979 IC MasterIliuta JohnAún no hay calificaciones

- Math5 Q4 Mod11 OrganizingDataInTabularFormAndPresentingThemInALineGraph V1Documento45 páginasMath5 Q4 Mod11 OrganizingDataInTabularFormAndPresentingThemInALineGraph V1ronaldAún no hay calificaciones

- The Impact of Credit Risk On The Financial Performance of Chinese BanksDocumento5 páginasThe Impact of Credit Risk On The Financial Performance of Chinese Banksvandv printsAún no hay calificaciones

- Bsi Bs en 1366-8 Smoke Extraction DuctsDocumento40 páginasBsi Bs en 1366-8 Smoke Extraction DuctsMUSTAFA OKSUZAún no hay calificaciones

- gp2 Speed IncreaserDocumento2 páginasgp2 Speed Increasermayur22785Aún no hay calificaciones

- Thanh Huyen - Week 5 - Final Test AnswerDocumento3 páginasThanh Huyen - Week 5 - Final Test AnswerNguyễn Sapphire Thanh HuyềnAún no hay calificaciones

- Kalviexpress'Xii Cs Full MaterialDocumento136 páginasKalviexpress'Xii Cs Full MaterialMalathi RajaAún no hay calificaciones

- Open Development EnvironmentDocumento16 páginasOpen Development EnvironmentMihaiNeacsuAún no hay calificaciones

- CuClad Laminates Data SheetDocumento4 páginasCuClad Laminates Data SheetDenis CarlosAún no hay calificaciones

- BFC3042 BFC31802Documento13 páginasBFC3042 BFC31802Zuliyah ZakariaAún no hay calificaciones

- 2.motor Starters - Enclosed VersionsDocumento106 páginas2.motor Starters - Enclosed VersionsRAmesh SrinivasanAún no hay calificaciones

- 04 Spec Sheet PWM Controller ChipDocumento16 páginas04 Spec Sheet PWM Controller Chipxuanhiendk2Aún no hay calificaciones

- Minimization of Blast Furnace Fuel Rate by Optimizing Burden and Gas DistributionsDocumento1 páginaMinimization of Blast Furnace Fuel Rate by Optimizing Burden and Gas DistributionsOsama AlwakkafAún no hay calificaciones

- Chapter 08Documento30 páginasChapter 08MaxAún no hay calificaciones

- CLT2Documento13 páginasCLT2Yagnik KalariyaAún no hay calificaciones

- Grade Beam Design CalculationDocumento3 páginasGrade Beam Design CalculationArnold VercelesAún no hay calificaciones

- DSP Floating Point FormatsDocumento29 páginasDSP Floating Point FormatsManjot KaurAún no hay calificaciones

- StatisticsDocumento2 páginasStatisticsAnish JohnAún no hay calificaciones

- MFTDocumento63 páginasMFTvenkatwsAún no hay calificaciones

- Importance of ForecastingDocumento37 páginasImportance of ForecastingFaizan TafzilAún no hay calificaciones

- Mill Test Certificate: 唐山中厚板材有限公司 Tangshan Heavy Plate Co.,Documento1 páginaMill Test Certificate: 唐山中厚板材有限公司 Tangshan Heavy Plate Co.,engbilal.qaqc786Aún no hay calificaciones

- ICT & ICT LabDocumento22 páginasICT & ICT LabM Asif 72 2MAún no hay calificaciones

- Quarter I Subject: GENERAL Mathematics Date: - Content Standard Performance Standard Learning Competency M11GM-Ia-4Documento4 páginasQuarter I Subject: GENERAL Mathematics Date: - Content Standard Performance Standard Learning Competency M11GM-Ia-4PatzAlzateParaguyaAún no hay calificaciones

- Cert Piping W54.5Documento2 páginasCert Piping W54.5SANU0% (1)

- 526 - 20-80-20-103 (Emenda 3 Fios) 02 - ThiagoDocumento16 páginas526 - 20-80-20-103 (Emenda 3 Fios) 02 - ThiagoThiago Cesar MachadoAún no hay calificaciones