Documentos de Académico

Documentos de Profesional

Documentos de Cultura

09 Alphabeta

Cargado por

hoinongdan2005Título original

Derechos de autor

Formatos disponibles

Compartir este documento

Compartir o incrustar documentos

¿Le pareció útil este documento?

¿Este contenido es inapropiado?

Denunciar este documentoCopyright:

Formatos disponibles

09 Alphabeta

Cargado por

hoinongdan2005Copyright:

Formatos disponibles

4/13/2012

Space of Search Strategies

CSE 473: Artificial Intelligence

Blind Search

Spring 2012

DFS, BFS, IDS

Informed Search

Ad

Adversarial

i l Search:

S

h -

Pruning

P i

Systematic: Uniform cost, greedy, A*, IDA*

Stochastic: Hill climbing w/ random walk & restarts

Constraint Satisfaction

Dan Weld

Backtracking=DFS, FC, k-consistency, exploiting structure

Adversary Search

Based on slides from

Dan Klein, Stuart Russell, Andrew Moore and Luke Zettlemoyer

1

Mini-max

Alpha-beta

Evaluation functions

Expecti-max

Types of Games

Logistics

Programming 2 out today

Due Wed 4/25

stratego

Number of Players? 1, 2, ?

Tic-tac-toe Game Tree

Mini-Max

Assumptions

High scoring leaf == good for you (bad for opp)

Opponent is super-smart, rational; never errs

Will play optimally against you

Idea

Exhaustive search

Alternate: best move for you; best for opponent

Max

Min

Guarantee

Will find best move for you (given assumptions)

4/13/2012

Minimax Example

Minimax Example

max

max

min

min

Minimax Example

max

min

max

min

Minimax Example

max

min

Minimax Example

Minimax Search

4/13/2012

Minimax Properties

Do We Need to Evaluate Every Node?

Optimal?

Yes, against perfect player. Otherwise?

max

Time complexity?

O(bm)

min

Space complexity?

O(bm)

10

For chess, b 35, m 100

10

100

Exact solution is completely infeasible

But, do we need to explore the whole tree?

- Pruning Example

- Pruning Example

What do we know about this node?

3

Progress of search

Progress of search

- Pruning Example

- Pruning Example

Progress of search

14, 5

Progress of search

4/13/2012

- Pruning Example

- Pruning General Case

Add bounds to each node

is the best value that MAX

can get at any choice point

along the current path

3

2

Player

Opponent

If value of n becomes worse

than , MAX will avoid it, so Player

can stop considering ns other

Opponent

children

Define similarly for MIN

Alpha-Beta Pruning Example

Alpha-Beta Pseudocode

function MAX-VALUE(state,,)

if TERMINAL-TEST((state)) then

return UTILITY(state)

v

for a, s in SUCCESSORS(state) do

v MAX(v, MIN-VALUE(s,,))

if v then return v

MAX(,v)

return v

=3

=2

=3

=+

12

=3

=+

1

=3

=+

=3

=1

=3 =3

=14 =5

14

At min node:

Prune if v;

Update

=3

=+

=- =- =-

=3 =3 =3

=-

=3

Alpha-Beta Pruning Example

=3

=+

3

=-

=+

At min node:

Prune if v;

Update

3

=-

=+

function MIN-VALUE(state,,)

if TERMINAL-TEST((state)) then

return UTILITY(state)

v +

for a, s in SUCCESSORS(state) do

v MIN(v, MAX-VALUE(s,,))

if v then return v

MIN(,v)

return v

At max node:

Prune if v;

Update

=-

=+

At max node:

Prune if v;

Update

inputs: state, current game state

, value of best alternative for MAX on path to state

, value of best alternative for MIN on path to state

returns: a utility value

is MAXs best alternative here or above

is MINs best alternative here or above

=8

=3

Alpha-Beta Pruning Example

is MAXs best alternative here or above

is MINs best alternative here or above

is MAXs best alternative here or above

is MINs best alternative here or above

4/13/2012

Alpha-Beta Pruning Properties

This pruning has no effect on final result at the root

Resource Limits

Cannot search to leaves

Depth-limited search

Values of intermediate nodes might be wrong!

but, they are bounds

Good child ordering improves effectiveness of pruning

With perfect ordering:

Time complexity drops to O(bm/2)

Doubles solvable depth!

Full search of, e.g. chess, is still hopeless

-2

Instead, search a limited depth of tree

Replace terminal utilities with heuristic

eval function for non-terminal p

positions

-1

max

4

-2

min

9

Guarantee of optimal play is gone

Example:

Suppose we have 100 seconds, can

explore 10K nodes / sec

So can check 1M nodes per move

reaches about depth 8

decent chess program

?

Heuristic Evaluation Function

4

min

Evaluation for Pacman

Function which scores non-terminals

Ideal function: returns the utility of the position

In practice: typically weighted linear sum of features:

e.g. f1(s) = (num white queens num black queens), etc.

Which algorithm?

-, depth 4, simple eval fun

QuickTime and a

GIF decompressor

are needed to see this picture.

What features would be good for Pacman?

Which algorithm?

-, depth 4, better eval fun

QuickTime and a

GIF decompressor

are needed to see this picture.

4/13/2012

Why Pacman Starves

Stochastic Single-Player

What if we dont know what the

result of an action will be? E.g.,

He knows his score will go

up by eating the dot now

He knows his score will go

up just as much by eating

the dot later on

There are no point-scoring

opportunities after eating

the dot

Therefore, waiting seems

just as good as eating

g

average

Can do expectimax search

Chance nodes, like actions

except the environment controls

the action chosen

Max nodes as before

Chance nodes take average

(expectation) of value of children

Which Algorithms?

Expectimax

max

In solitaire, shuffle is unknown

In minesweeper, mine

locations

Minimax

QuickTime and a

GIF decompressor

are needed to see this picture.

QuickTime and a

GIF decompressor

are needed to see this picture.

10

Maximum Expected Utility

Why should we average utilities? Why not minimax?

Principle of maximum expected utility: an agent should

chose the action which maximizes its expected utility,

given its knowledge

General principle for decision making

Often taken as the definition of rationality

Well see this idea over and over in this course!

Lets decompress this definition

3 ply look ahead, ghosts move randomly

Reminder: Probabilities

A random variable represents an event whose outcome is unknown

A probability distribution is an assignment of weights to outcomes

Example: traffic on freeway?

Random variable: T = whether theres traffic

Outcomes: T in {none

{none, light

light, heavy}

Distribution: P(T=none) = 0.25, P(T=light) = 0.55, P(T=heavy) = 0.20

Some laws of probability (more later):

Probabilities are always non-negative

Probabilities over all possible outcomes sum to one

As we get more evidence, probabilities may change:

P(T=heavy) = 0.20, P(T=heavy | Hour=8am) = 0.60

Well talk about methods for reasoning and updating probabilities later

What are Probabilities?

Objectivist / frequentist answer:

Averages over repeated experiments

E.g. empirically estimating P(rain) from historical observation

E.g. pacmans estimate of what the ghost will do, given what it

has done in the past

Assertion about how future experiments

p

will g

go ((in the limit))

Makes one think of inherently random events, like rolling dice

Subjectivist / Bayesian answer:

Degrees of belief about unobserved variables

E.g. an agents belief that its raining, given the temperature

E.g. pacmans belief that the ghost will turn left, given the state

Often learn probabilities from past experiences (more later)

New evidence updates beliefs (more later)

4/13/2012

Uncertainty Everywhere

Not just for games of chance!

Im sick: will I sneeze this minute?

Email contains FREE!: is it spam?

Tooth hurts: have cavity?

60 min enough to get to the airport?

Robot rotated wheel three times,

times how far did it advance?

Safe to cross street? (Look both ways!)

Reminder: Expectations

We can define function f(X) of a random variable X

The expected value of a function is its average value,

weighted by the probability distribution over inputs

p How long

g to g

get to the airport?

p

Example:

Length of driving time as a function of traffic:

L(none) = 20, L(light) = 30, L(heavy) = 60

Sources of uncertainty in random variables:

Inherently random process (dice, etc)

Insufficient or weak evidence

Ignorance of underlying processes

Unmodeled variables

The worlds just noisy it doesnt behave according to plan!

Utilities

Utilities are functions from outcomes (states of the

world) to real numbers that describe an agents

preferences

Where do utilities come from?

In a game

game, may be simple (+1/

(+1/-1)

1)

Utilities summarize the agents goals

Theorem: any set of preferences between outcomes can be

summarized as a utility function (provided the preferences meet

certain conditions)

In general, we hard-wire utilities and let actions emerge

(why dont we let agents decide their own utilities?)

What is my expected driving time?

Notation: EP(T)[ L(T) ]

Remember, P(T) = {none: 0.25, light: 0.5, heavy: 0.25}

E[ L(T) ] = L(none) * P(none) + L(light) * P(light) + L(heavy) * P(heavy)

E[ L(T) ] = (20 * 0.25) + (30 * 0.5) + (60 * 0.25) = 35

Stochastic Two-Player

E.g. backgammon

Expectiminimax (!)

Environment is an

extra player that

moves after each

agent

Chance nodes take

expectations,

otherwise like minimax

More on utilities soon

Stochastic Two-Player

Dice rolls increase b: 21 possible rolls

with 2 dice

Backgammon 20 legal moves

Depth 4 = 20 x (21 x 20)3 = 1.2 x 109

As depth increases, probability of

reaching

hi a given

i

node

d shrinks

hi k

So value of lookahead is diminished

So limiting depth is less damaging

But pruning is less possible

TDGammon uses depth-2 search +

very good eval function +

reinforcement learning: worldchampion level play

Expectimax Search Trees

What if we dont know what the

result of an action will be? E.g.,

In solitaire, next card is unknown

In minesweeper, mine locations

In pacman, the ghosts act randomly

max

Can do expectimax search

Chance nodes,

nodes like min nodes

nodes,

except the outcome is uncertain

Calculate expected utilities

Max nodes as in minimax search

Chance nodes take average

(expectation) of value of children

chance

10

Later, well learn how to formalize the

underlying problem as a Markov

Decision Process

4/13/2012

Which Algorithm?

Minimax: no point in trying

Which Algorithm?

Expectimax: wins some of the time

QuickTime and a

GIF decompressor

are needed to see this picture.

QuickTime and a

GIF decompressor

are needed to see this picture.

3 ply look ahead, ghosts move randomly

3 ply look ahead, ghosts move randomly

Expectimax Search

Expectimax Pseudocode

In expectimax search, we have a

probabilistic model of how the

opponent (or environment) will

behave in any state

Model could be a simple uniform

distribution (roll a die)

Model could be sophisticated and

require a great deal of computation

We have a node for every outcome

out of our control: opponent or

environment

The model might say that adversarial

actions are likely!

For now, assume for any state we

magically have a distribution to assign

probabilities to opponent actions /

environment outcomes

def value(s)

if s is a max node return maxValue(s)

if s is an exp node return expValue(s)

if s is a terminal node return evaluation(s)

def maxValue(s)

values = [value(s) for s in successors(s)]

return max(values)

def expValue(s)

values = [value(s) for s in successors(s)]

weights = [probability(s, s) for s in successors(s)]

return expectation(values, weights)

Expectimax for Pacman

Notice that weve gotten away from thinking that the

ghosts are trying to minimize pacmans score

Instead, they are now a part of the environment

Pacman has a belief (distribution) over how they will

act

Quiz: Can we see minimax as a special case of

expectimax?

Quiz: what would pacmans computation look like if

we assumed that the ghosts were doing 1-ply

minimax and taking the result 80% of the time,

otherwise moving randomly?

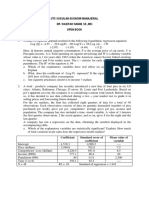

Expectimax for Pacman

Results from playing 5 games

Minimizing

Ghost

Random

Ghost

Minimax

Pacman

Won 5/5

Avg. Score:

493

Avg. Score:

Expectimax

Pacman

Won 1/5

Avg. Score:

-303

Won 5/5

Avg. Score:

503

Won 5/5

483

Pacman does depth 4 search with an eval function that avoids trouble

Minimizing ghost does depth 2 search with an eval function that seeks Pacman

4/13/2012

Expectimax Pruning?

Expectimax Evaluation

Evaluation functions quickly return an estimate for a

nodes true value (which value, expectimax or

minimax?)

For minimax, evaluation function scale doesnt matter

We just want better states to have higher evaluations

(get the ordering right)

We call this insensitivity to monotonic transformations

For expectimax, we need magnitudes to be meaningful

Not easy

exact: need bounds on possible values

approximate: sample high-probability branches

Mixed Layer Types

40

20

30

x2

1600

400

900

Stochastic Two-Player

E.g. Backgammon

Expectiminimax

Dice rolls increase b: 21 possible rolls

with 2 dice

Backgammon 20 legal moves

Depth 4 = 20 x (21 x 20)3 1.2 x 109

Environment is an

extra player that

moves after each

agent

Chance nodes take

expectations,

otherwise like minimax

As depth increases, probability of

reaching

hi a given

i

node

d shrinks

hi k

So value of lookahead is diminished

So limiting depth is less damaging

But pruning is less possible

TDGammon uses depth-2 search +

very good eval function +

reinforcement learning: worldchampion level play

Multi-player Non-Zero-Sum Games

Similar to

minimax:

Utilities are now

tuples

Each player

maximizes their

own entry at

each node

Propagate (or

back up) nodes

from children

Can give rise to

cooperation and

competition

dynamically

1,2,6

4,3,2

6,1,2

7,4,1

5,1,1

1,5,2

7,7,1

5,4,5

También podría gustarte

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeCalificación: 4 de 5 estrellas4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreCalificación: 4 de 5 estrellas4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe EverandNever Split the Difference: Negotiating As If Your Life Depended On ItCalificación: 4.5 de 5 estrellas4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceCalificación: 4 de 5 estrellas4/5 (890)

- Grit: The Power of Passion and PerseveranceDe EverandGrit: The Power of Passion and PerseveranceCalificación: 4 de 5 estrellas4/5 (587)

- Shoe Dog: A Memoir by the Creator of NikeDe EverandShoe Dog: A Memoir by the Creator of NikeCalificación: 4.5 de 5 estrellas4.5/5 (537)

- The Perks of Being a WallflowerDe EverandThe Perks of Being a WallflowerCalificación: 4.5 de 5 estrellas4.5/5 (2099)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureCalificación: 4.5 de 5 estrellas4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersCalificación: 4.5 de 5 estrellas4.5/5 (344)

- Her Body and Other Parties: StoriesDe EverandHer Body and Other Parties: StoriesCalificación: 4 de 5 estrellas4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Calificación: 4.5 de 5 estrellas4.5/5 (119)

- The Emperor of All Maladies: A Biography of CancerDe EverandThe Emperor of All Maladies: A Biography of CancerCalificación: 4.5 de 5 estrellas4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe EverandThe Little Book of Hygge: Danish Secrets to Happy LivingCalificación: 3.5 de 5 estrellas3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyCalificación: 3.5 de 5 estrellas3.5/5 (2219)

- The Yellow House: A Memoir (2019 National Book Award Winner)De EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Calificación: 4 de 5 estrellas4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaCalificación: 4.5 de 5 estrellas4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryCalificación: 3.5 de 5 estrellas3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDe EverandTeam of Rivals: The Political Genius of Abraham LincolnCalificación: 4.5 de 5 estrellas4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealDe EverandOn Fire: The (Burning) Case for a Green New DealCalificación: 4 de 5 estrellas4/5 (73)

- The Unwinding: An Inner History of the New AmericaDe EverandThe Unwinding: An Inner History of the New AmericaCalificación: 4 de 5 estrellas4/5 (45)

- AP Statistics 3.5Documento7 páginasAP Statistics 3.5Eric0% (1)

- Rise of ISIS: A Threat We Can't IgnoreDe EverandRise of ISIS: A Threat We Can't IgnoreCalificación: 3.5 de 5 estrellas3.5/5 (137)

- Eci 561 Documentation For PortfolioDocumento11 páginasEci 561 Documentation For Portfolioapi-370429595100% (1)

- IoIT Presentation 2016Documento36 páginasIoIT Presentation 2016hoinongdan2005Aún no hay calificaciones

- RegtodfapresentationDocumento30 páginasRegtodfapresentationVaibhav KalyaniAún no hay calificaciones

- DFA To Regular Expressions: PropositionDocumento15 páginasDFA To Regular Expressions: PropositionranjankAún no hay calificaciones

- The A Algorithm: Héctor Muñoz-AvilaDocumento20 páginasThe A Algorithm: Héctor Muñoz-AvilaBinoy NambiarAún no hay calificaciones

- CIS730 Fall 2007 MP2Documento3 páginasCIS730 Fall 2007 MP2hoinongdan2005Aún no hay calificaciones

- C.installDSpace4 1UbuntuLTS12 04desktopDocumento3 páginasC.installDSpace4 1UbuntuLTS12 04desktophoinongdan2005Aún no hay calificaciones

- JD SR Sap MM Consultant PDFDocumento2 páginasJD SR Sap MM Consultant PDFSrinivas DsAún no hay calificaciones

- UTS SusulanDocumento4 páginasUTS SusulanHavis AkbarAún no hay calificaciones

- Harris' Chapter 4: StatisticsDocumento22 páginasHarris' Chapter 4: StatisticskarolinebritoAún no hay calificaciones

- Transport and Traffic Safety 1Documento3 páginasTransport and Traffic Safety 1api-541958559Aún no hay calificaciones

- Research ProposalDocumento33 páginasResearch ProposalTIZITAW MASRESHA100% (4)

- IBSDocumento31 páginasIBSKhairul Umar Hamzah100% (1)

- Project AaDocumento99 páginasProject AaLawrence Emmanuel100% (1)

- 124-Article Text-887-1-10-20230330Documento7 páginas124-Article Text-887-1-10-20230330Elvi 0909Aún no hay calificaciones

- Introduction to Behavioural EconomicsDocumento7 páginasIntroduction to Behavioural EconomicsInemi StephenAún no hay calificaciones

- Spiritualism, Mesmerism and The Occult 1800-1920 Colour LeafletDocumento4 páginasSpiritualism, Mesmerism and The Occult 1800-1920 Colour LeafletPickering and ChattoAún no hay calificaciones

- Intersectionality Tool Job Aid ENDocumento2 páginasIntersectionality Tool Job Aid ENTomAún no hay calificaciones

- Connelly - 2007 - Obesity ReviewDocumento8 páginasConnelly - 2007 - Obesity ReviewNicolas BavarescoAún no hay calificaciones

- The Effect of TQM on Organizational PerformanceDocumento89 páginasThe Effect of TQM on Organizational PerformanceAregash MandieAún no hay calificaciones

- Unit 2 Project Identification and SelectionDocumento30 páginasUnit 2 Project Identification and SelectionDeepak Rao RaoAún no hay calificaciones

- An Empirical Study On Audit Expectation Gap Role of Auditing Education in BangladeshDocumento17 páginasAn Empirical Study On Audit Expectation Gap Role of Auditing Education in Bangladeshrajeshaisdu009100% (1)

- BS OHSAS 18001 Implementation GuideDocumento12 páginasBS OHSAS 18001 Implementation GuideAditya BagrolaAún no hay calificaciones

- Branding & Marketing Strategies of Top Jewellery BrandsDocumento6 páginasBranding & Marketing Strategies of Top Jewellery BrandsAjayRamiAún no hay calificaciones

- MLP Project - VarunDocumento6 páginasMLP Project - VarunTangirala Ashwini100% (1)

- Research Paper SMPDocumento5 páginasResearch Paper SMPDevashishGuptaAún no hay calificaciones

- The 42 V's of Big Data and Data Science PDFDocumento6 páginasThe 42 V's of Big Data and Data Science PDF5oscilantesAún no hay calificaciones

- Extensional Flow Properties of Polymers Using Stretching Flow MethodsDocumento78 páginasExtensional Flow Properties of Polymers Using Stretching Flow MethodsRobin ChenAún no hay calificaciones

- Analysis of code mixing in Boy William vlogsDocumento7 páginasAnalysis of code mixing in Boy William vlogsKKNT Belaras2019Aún no hay calificaciones

- Machine Learning Vs StatisticsDocumento5 páginasMachine Learning Vs StatisticsJuan Guillermo Ferrer VelandoAún no hay calificaciones

- Open Position Mensa GroupDocumento2 páginasOpen Position Mensa GrouppermencokelatAún no hay calificaciones

- Interpretation in HistoryDocumento9 páginasInterpretation in HistoryΚΟΥΣΑΡΙΔΗΣ ΚΩΝΣΤΑΝΤΙΝΟΣAún no hay calificaciones

- 4.1 Gestión Equipos-Organización y DecisionesDocumento7 páginas4.1 Gestión Equipos-Organización y DecisionesRafael Alonso David PeñalvaAún no hay calificaciones

- Syllabus Themes in Global Environmental History BhattacharyyaDocumento14 páginasSyllabus Themes in Global Environmental History BhattacharyyaLingaraj GJAún no hay calificaciones

- Biotechnology Entrepreneurship Book OutlineDocumento10 páginasBiotechnology Entrepreneurship Book OutlineUsman SanaAún no hay calificaciones