Documentos de Académico

Documentos de Profesional

Documentos de Cultura

Int

Int

Cargado por

roshan97860 calificaciones0% encontró este documento útil (0 votos)

39 vistas18 páginasinterv

Derechos de autor

© © All Rights Reserved

Formatos disponibles

DOCX, PDF, TXT o lea en línea desde Scribd

Compartir este documento

Compartir o incrustar documentos

¿Le pareció útil este documento?

¿Este contenido es inapropiado?

Denunciar este documentointerv

Copyright:

© All Rights Reserved

Formatos disponibles

Descargue como DOCX, PDF, TXT o lea en línea desde Scribd

0 calificaciones0% encontró este documento útil (0 votos)

39 vistas18 páginasInt

Int

Cargado por

roshan9786interv

Copyright:

© All Rights Reserved

Formatos disponibles

Descargue como DOCX, PDF, TXT o lea en línea desde Scribd

Está en la página 1de 18

66 job interview questions for data scientists

Posted by Vincent Granville on February 13, 2013 at 8:00pm

View Blog

We are now at 86 questions. These are mostly open-ended questions, to assess the

technical horizontal knowledge of a senior candidate for a rather high level position,

e.g. director.

1. What is the biggest data set that you processed, and how did you process it,

what were the results?

2. Tell me two success stories about your analytic or computer science projects?

How was lift (or success) measured?

3. What is: lift, KPI, robustness, model fitting, design of experiments, 80/20 rule?

4. What is: collaborative filtering, n-grams, map reduce, cosine distance?

5. How to optimize a web crawler to run much faster, extract better information,

and better summarize data to produce cleaner databases?

6. How would you come up with a solution to identify plagiarism?

7. How to detect individual paid accounts shared by multiple users?

8. Should click data be handled in real time? Why? In which contexts?

9. What is better: good data or good models? And how do you define "good"? Is

there a universal good model? Are there any models that are definitely not so

good?

10. What is probabilistic merging (AKA fuzzy merging)? Is it easier to handle with

SQL or other languages? Which languages would you choose for semi-

structured text data reconciliation?

11. How do you handle missing data? What imputation techniques do you

recommend?

12. What is your favorite programming language / vendor? why?

13. Tell me 3 things positive and 3 things negative about your favorite statistical

software.

14. Compare SAS, R, Python, Perl

15. What is the curse of big data?

16. Have you been involved in database design and data modeling?

17. Have you been involved in dashboard creation and metric selection? What do

you think about Birt?

18. What features of Teradata do you like?

19. You are about to send one million email (marketing campaign). How do you

optimze delivery? How do you optimize response? Can you optimize both

separately? (answer: not really)

20. Toad or Brio or any other similar clients are quite inefficient to query Oracle

databases. Why? How would you do to increase speed by a factor 10, and be

able to handle far bigger outputs?

21. How would you turn unstructured data into structured data? Is it really

necessary? Is it OK to store data as flat text files rather than in an SQL-

powered RDBMS?

22. What are hash table collisions? How is it avoided? How frequently does it

happen?

23. How to make sure a mapreduce application has good load balance? What is

load balance?

24. Examples where mapreduce does not work? Examples where it works very

well? What are the security issues involved with the cloud? What do you think

of EMC's solution offering an hybrid approach - both internal and external cloud

- to mitigate the risks and offer other advantages (which ones)?

25. Is it better to have 100 small hash tables or one big hash table, in memory, in

terms of access speed (assuming both fit within RAM)? What do you think

about in-database analytics?

26. Why is naive Bayes so bad? How would you improve a spam detection

algorithm that uses naive Bayes?

27. Have you been working with white lists? Positive rules? (In the context of fraud

or spam detection)

28. What is star schema? Lookup tables?

29. Can you perform logistic regression with Excel? (yes) How? (use linest on log-

transformed data)? Would the result be good? (Excel has numerical issues, but

it's very interactive)

30. Have you optimized code or algorithms for speed: in SQL, Perl, C++, Python

etc. How, and by how much?

31. Is it better to spend 5 days developing a 90% accurate solution, or 10 days for

100% accuracy? Depends on the context?

32. Define: quality assurance, six sigma, design of experiments. Give examples of

good and bad designs of experiments.

33. What are the drawbacks of general linear model? Are you familiar with

alternatives (Lasso, ridge regression, boosted trees)?

34. Do you think 50 small decision trees are better than a large one? Why?

35. Is actuarial science not a branch of statistics (survival analysis)? If not, how so?

36. Give examples of data that does not have a Gaussian distribution, nor log-

normal. Give examples of data that has a very chaotic distribution?

37. Why is mean square error a bad measure of model performance? What would

you suggest instead?

38. How can you prove that one improvement you've brought to an algorithm is

really an improvement over not doing anything? Are you familiar with A/B

testing?

39. What is sensitivity analysis? Is it better to have low sensitivity (that is, great

robustness) and low predictive power, or the other way around? How to perform

good cross-validation? What do you think about the idea of injecting noise in

your data set to test the sensitivity of your models?

40. Compare logistic regression w. decision trees, neural networks. How have

these technologies been vastly improved over the last 15 years?

41. Do you know / used data reduction techniques other than PCA? What do you

think of step-wise regression? What kind of step-wise techniques are you

familiar with? When is full data better than reduced data or sample?

42. How would you build non parametric confidence intervals, e.g. for scores? (see

the AnalyticBridge theorem)

43. Are you familiar either with extreme value theory, monte carlo simulations or

mathematical statistics (or anything else) to correctly estimate the chance of a

very rare event?

44. What is root cause analysis? How to identify a cause vs. a correlation? Give

examples.

45. How would you define and measure the predictive power of a metric?

46. How to detect the best rule set for a fraud detection scoring technology? How

do you deal with rule redundancy, rule discovery, and the combinatorial nature

of the problem (for finding optimum rule set - the one with best predictive

power)? Can an approximate solution to the rule set problem be OK? How

would you find an OK approximate solution? How would you decide it is good

enough and stop looking for a better one?

47. How to create a keyword taxonomy?

48. What is a Botnet? How can it be detected?

49. Any experience with using API's? Programming API's? Google or Amazon

API's? AaaS (Analytics as a service)?

50. When is it better to write your own code than using a data science software

package?

51. Which tools do you use for visualization? What do you think of Tableau? R?

SAS? (for graphs). How to efficiently represent 5 dimension in a chart (or in a

video)?

52. What is POC (proof of concept)?

53. What types of clients have you been working with: internal, external, sales /

finance / marketing / IT people? Consulting experience? Dealing with vendors,

including vendor selection and testing?

54. Are you familiar with software life cycle? With IT project life cycle - from

gathering requests to maintenance?

55. What is a cron job?

56. Are you a lone coder? A production guy (developer)? Or a designer (architect)?

57. Is it better to have too many false positives, or too many false negatives?

58. Are you familiar with pricing optimization, price elasticity, inventory

management, competitive intelligence? Give examples.

59. How does Zillow's algorithm work? (to estimate the value of any home in US)

60. How to detect bogus reviews, or bogus Facebook accounts used for bad

purposes?

61. How would you create a new anonymous digital currency?

62. Have you ever thought about creating a startup? Around which idea / concept?

63. Do you think that typed login / password will disappear? How could they be

replaced?

64. Have you used time series models? Cross-correlations with time lags?

Correlograms? Spectral analysis? Signal processing and filtering techniques?

In which context?

65. Which data scientists do you admire most? which startups?

66. How did you become interested in data science?

67. What is an efficiency curve? What are its drawbacks, and how can they be

overcome?

68. What is a recommendation engine? How does it work?

69. What is an exact test? How and when can simulations help us when we do not

use an exact test?

70. What do you think makes a good data scientist?

71. Do you think data science is an art or a science?

72. What is the computational complexity of a good, fast clustering algorithm? What

is a good clustering algorithm? How do you determine the number of clusters?

How would you perform clustering on one million unique keywords, assuming

you have 10 million data points - each one consisting of two keywords, and a

metric measuring how similar these two keywords are? How would you create

this 10 million data points table in the first place?

73. Give a few examples of "best practices" in data science.

74. What could make a chart misleading, difficult to read or interpret? What

features should a useful chart have?

75. Do you know a few "rules of thumb" used in statistical or computer science? Or

in business analytics?

76. What are your top 5 predictions for the next 20 years?

77. How do you immediately know when statistics published in an article (e.g.

newspaper) are either wrong or presented to support the author's point of view,

rather than correct, comprehensive factual information on a specific subject?

For instance, what do you think about the official monthly unemployment

statistics regularly discussed in the press? What could make them more

accurate?

78. Testing your analytic intuition: look at these three charts. Two of them exhibit

patterns. Which ones? Do you know that these charts are called scatter-plots?

Are there other ways to visually represent this type of data?

79. You design a robust non-parametric statistic (metric) to replace correlation or R

square, that (1) is independent of sample size, (2) always between -1 and +1,

and (3) based on rank statistics. How do you normalize for sample size? Write

an algorithm that computes all permutations of n elements. How do you sample

permutations (that is, generate tons of random permutations) when n is large, to

estimate the asymptotic distribution for your newly created metric? You may

use this asymptotic distribution for normalizing your metric. Do you think that an

exact theoretical distribution might exist, and therefore, we should find it, and

use it rather than wasting our time trying to estimate the asymptotic distribution

using simulations?

80. More difficult, technical question related to previous one. There is an obvious

one-to-one correspondence between permutations of n elements and integers

between 1 and n! Design an algorithm that encodes an integer less than n! as a

permutation of n elements. What would be the reverse algorithm, used to

decode a permutation and transform it back into a number? Hint: An

intermediate step is to use the factorial number system representation of an integer.

Feel free to check this reference online to answer the question. Even better,

feel free to browse the web to find the full answer to the question (this will test

the candidate's ability to quickly search online and find a solution to a problem

without spending hours reinventing the wheel).

81. How many "useful" votes will a Yelp review receive? My answer: Eliminate bogus

accounts (read this article), or competitor reviews (how to detect them: use

taxonomy to classify users, and location - two Italian restaurants in same Zip

code could badmouth each other and write great comments for themselves).

Detect fake likes: some companies (e.g. FanMeNow.com) will charge you to

produce fake accounts and fake likes. Eliminate prolific users who like

everything, those who hate everything. Have a blacklist of keywords to filter

fake reviews. See if IP address or IP block of reviewer is in a blacklist such as

"Stop Forum Spam". Create honeypot to catch fraudsters. Also watch out for

disgruntled employees badmouthing their former employer. Watch out for 2 or 3

similar comments posted the same day by 3 users regarding a company that

receives very few reviews. Is it a brand new company? Add more weight to

trusted users (create a category of trusted users). Flag all reviews that are

identical (or nearly identical) and come from same IP address or same user.

Create a metric to measure distance between two pieces of text

(reviews). Create a review or reviewer taxonomy. Use hidden decision trees to rate or

score review and reviewers.

82. What did you do today? Or what did you do this week / last week?

83. What/when is the latest data mining book / article you read? What/when is the

latest data mining conference / webinar / class / workshop / training you

attended? What/when is the most recent programming skill that you acquired?

84. What are your favorite data science websites? Who do you admire most in the

data science community, and why? Which company do you admire most?

85. What/when/where is the last data science blog post you wrote?

86. In your opinion, what is data science? Machine learning? Data mining?

87. Who are the best people you recruited and where are they today?

88. Can you estimate and forecast sales for any book, based on Amazon public

data? Hint: read this article.

89. What's wrong with this picture?

90. Should removing stop words be Step 1 rather than Step 3, in the search engine

algorithm described here? Answer: Have you thought about the fact that mine and

yours could also be stop words? So in a bad implementation, data mining would

become data mine after stemming, then data. In practice, you remove stop

words before stemming. So Step 3 should indeed become step 1.

91. Experimental design and a bit of computer science with Lego's

Related articles:

Fast clustering algorithms for massive datasets

The curse of big data

What Map Reduce can't do

53.5 billion clicks dataset available for benchmarking and testing

Eight worst predictive modeling techniques

Another example of misuse of statistical science

The curse of dimensionality (it got worse with big data)

Data Science eBook

Data Science Apprenticeship

Debunking lack of analytic talent

Causation vs. Correlation

AnalyticTalent.com

Data Science dictionary

How and why to build a data dictionary

Data Science tools

A new random number generator

Modern books on multiple programming languages

Assessing efficiency of approximate vs. exact algorithms (coming soon)

Statistical comic strip

Fake data science

Most popular blog posts

Mention the difference between Data Mining and Machine learning?

Machine learning relates with the study, design and development of the algorithms that give

computers the capability to learn without being explicitly programmed. While, data mining can be

defined as the process in which the unstructured data tries to extract knowledge or unknown

interesting patterns. During this process machine, learning algorithms are used.

3) What is Overfitting in Machine learning?

In machine learning, when a statistical model describes random error or noise instead of underlying

relationship overfitting occurs. When a model is excessively complex, overfitting is normally

observed, because of having too many parameters with respect to the number of training data types.

The model exhibits poor performance which has been overfit.

4) Why overfitting happens?

The possibility of overfitting exists as the criteria used for training the model is not the same as the

criteria used to judge the efficacy of a model.

5) How can you avoid overfitting ?

By using a lot of data overfitting can be avoided, overfitting happens relatively as you have a small

dataset, and you try to learn from it. But if you have a small database and you are forced to come

with a model based on that. In such situation, you can use a technique known as cross validation. In

this method the dataset splits into two section, testing and training datasets, the testing dataset will

only test the model while, in training dataset, the datapoints will come up with the model.

In this technique, a model is usually given a dataset of a known data on which training (training data

set) is run and a dataset of unknown data against which the model is tested. The idea of cross

validation is to define a dataset to test the model in the training phase.

6) What is inductive machine learning?

The inductive machine learning involves the process of learning by examples, where a system, from a

set of observed instances tries to induce a general rule.

7) What are the five popular algorithms of Machine Learning?

a) Decision Trees

b) Neural Networks (back propagation)

c) Probabilistic networks

d) Nearest Neighbor

e) Support vector machines

8) What are the different Algorithm techniques in Machine Learning?

The different types of techniques in Machine Learning are

a) Supervised Learning

b) Unsupervised Learning

c) Semi-supervised Learning

d) Reinforcement Learning

e) Transduction

f) Learning to Learn

9) What are the three stages to build the hypotheses or model in machine learning?

a) Model building

b) Model testing

c) Applying the model

10) What is the standard approach to supervised learning?

The standard approach to supervised learning is to split the set of example into the training set and

the test.

11) What is Training set and Test set?

In various areas of information science like machine learning, a set of data is used to discover the

potentially predictive relationship known as Training Set. Training set is an examples given to the

learner, while Test set is used to test the accuracy of the hypotheses generated by the learner, and it

is the set of example held back from the learner. Training set are distinct from Test set.

12) List down various approaches for machine learning?

The different approaches in Machine Learning are

a) Concept Vs Classification Learning

b) Symbolic Vs Statistical Learning

c) Inductive Vs Analytical Learning

13) What is not Machine Learning?

a) Artificial Intelligence

b) Rule based inference

14) Explain what is the function of Unsupervised Learning?

a) Find clusters of the data

b) Find low-dimensional representations of the data

c) Find interesting directions in data

d) Interesting coordinates and correlations

e) Find novel observations/ database cleaning

15) Explain what is the function of Supervised Learning?

a) Classifications

b) Speech recognition

c) Regression

d) Predict time series

e) Annotate strings

16) What is algorithm independent machine learning?

Machine learning in where mathematical foundations is independent of any particular classifier or

learning algorithm is referred as algorithm independent machine learning?

17) What is the difference between artificial learning and machine learning?

Designing and developing algorithms according to the behaviours based on empirical data are known

as Machine Learning. While artificial intelligence in addition to machine learning, it also covers other

aspects like knowledge representation, natural language processing, planning, robotics etc.

18) What is classifier in machine learning?

A classifier in a Machine Learning is a system that inputs a vector of discrete or continuous feature

values and outputs a single discrete value, the class.

19) What are the advantages of Naive Bayes?

In Nave Bayes classifier will converge quicker than discriminative models like logistic regression, so

you need less training data. The main advantage is that it cant learn interactions between features.

20) In what areas Pattern Recognition is used?

Pattern Recognition can be used in

a) Computer Vision

b) Speech Recognition

c) Data Mining

d) Statistics

e) Informal Retrieval

f) Bio-Informatics

21) What is Genetic Programming?

Genetic programming is one of the two techniques used in machine learning. The model is based on

the testing and selecting the best choice among a set of results.

22) What is Inductive Logic Programming in Machine Learning?

Inductive Logic Programming (ILP) is a subfield of machine learning which uses logical programming

representing background knowledge and examples.

23) What is Model Selection in Machine Learning?

The process of selecting models among different mathematical models, which are used to describe

the same data set is known as Model Selection. Model selection is applied to the fields of statistics,

machine learning and data mining.

24) What are the two methods used for the calibration in Supervised Learning?

The two methods used for predicting good probabilities in Supervised Learning are

a) Platt Calibration

b) Isotonic Regression

These methods are designed for binary classification, and it is not trivial.

25) Which method is frequently used to prevent overfitting?

When there is sufficient data Isotonic Regression is used to prevent an overfitting issue.

What is the difference between heuristic for rule learning and heuristics for decision trees?

The difference is that the heuristics for decision trees evaluate the average quality of a number of

disjointed sets while rule learners only evaluate the quality of the set of instances that is covered

with the candidate rule.

27) What is Perceptron in Machine Learning?

In Machine Learning, Perceptron is an algorithm for supervised classification of the input into one of

several possible non-binary outputs.

28) Explain the two components of Bayesian logic program?

Bayesian logic program consists of two components. The first component is a logical one ; it consists

of a set of Bayesian Clauses, which captures the qualitative structure of the domain. The second

component is a quantitative one, it encodes the quantitative information about the domain.

29) What are Bayesian Networks (BN) ?

Bayesian Network is used to represent the graphical model for probability relationship among a set

of variables .

30) Why instance based learning algorithm sometimes referred as Lazy learning algorithm?

Instance based learning algorithm is also referred as Lazy learning algorithm as they delay the

induction or generalization process until classification is performed.

31) What are the two classification methods that SVM ( Support Vector Machine) can handle?

a) Combining binary classifiers

b) Modifying binary to incorporate multiclass learning

32) What is ensemble learning?

To solve a particular computational program, multiple models such as classifiers or experts are

strategically generated and combined. This process is known as ensemble learning.

33) Why ensemble learning is used?

Ensemble learning is used to improve the classification, prediction, function approximation etc of a

model.

34) When to use ensemble learning?

Ensemble learning is used when you build component classifiers that are more accurate and

independent from each other.

35) What are the two paradigms of ensemble methods?

The two paradigms of ensemble methods are

a) Sequential ensemble methods

b) Parallel ensemble methods

36) What is the general principle of an ensemble method and what is bagging and boosting in

ensemble method?

The general principle of an ensemble method is to combine the predictions of several models built

with a given learning algorithm in order to improve robustness over a single model. Bagging is a

method in ensemble for improving unstable estimation or classification schemes. While boosting

method are used sequentially to reduce the bias of the combined model. Boosting and Bagging both

can reduce errors by reducing the variance term.

37) What is bias-variance decomposition of classification error in ensemble method?

The expected error of a learning algorithm can be decomposed into bias and variance. A bias term

measures how closely the average classifier produced by the learning algorithm matches the target

function. The variance term measures how much the learning algorithms prediction fluctuates for

different training sets.

38) What is an Incremental Learning algorithm in ensemble?

Incremental learning method is the ability of an algorithm to learn from new data that may be

available after classifier has already been generated from already available dataset.

39) What is PCA, KPCA and ICA used for?

PCA (Principal Components Analysis), KPCA ( Kernel based Principal Component Analysis) and ICA (

Independent Component Analysis) are important feature extraction techniques used for

dimensionality reduction.

40) What is dimension reduction in Machine Learning?

In Machine Learning and statistics, dimension reduction is the process of reducing the number of

random variables under considerations and can be divided into feature selection and feature

extraction

41) What are support vector machines?

Support vector machines are supervised learning algorithms used for classification and regression

analysis.

42) What are the components of relational evaluation techniques?

The important components of relational evaluation techniques are

a) Data Acquisition

b) Ground Truth Acquisition

c) Cross Validation Technique

d) Query Type

e) Scoring Metric

f) Significance Test

43) What are the different methods for Sequential Supervised Learning?

The different methods to solve Sequential Supervised Learning problems are

a) Sliding-window methods

b) Recurrent sliding windows

c) Hidden Markow models

d) Maximum entropy Markow models

e) Conditional random fields

f) Graph transformer networks

44) What are the areas in robotics and information processing where sequential prediction problem

arises?

The areas in robotics and information processing where sequential prediction problem arises are

a) Imitation Learning

b) Structured prediction

c) Model based reinforcement learning

45) What is batch statistical learning?

Statistical learning techniques allow learning a function or predictor from a set of observed data that

can make predictions about unseen or future data. These techniques provide guarantees on the

performance of the learned predictor on the future unseen data based on a statistical assumption on

the data generating process.

46) What is PAC Learning?

PAC (Probably Approximately Correct) learning is a learning framework that has been introduced to

analyze learning algorithms and their statistical efficiency.

47) What are the different categories you can categorized the sequence learning process?

a) Sequence prediction

b) Sequence generation

c) Sequence recognition

d) Sequential decision

48) What is sequence learning?

Sequence learning is a method of teaching and learning in a logical manner.

49) What are two techniques of Machine Learning ?

The two techniques of Machine Learning are

a) Genetic Programming

b) Inductive Learning

50) Give a popular application of machine learning that you see on day to day basis?

The recommendation engine implemented by major ecommerce websites uses Machine Learning

También podría gustarte

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeCalificación: 4 de 5 estrellas4/5 (5813)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreCalificación: 4 de 5 estrellas4/5 (1092)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe EverandNever Split the Difference: Negotiating As If Your Life Depended On ItCalificación: 4.5 de 5 estrellas4.5/5 (844)

- Grit: The Power of Passion and PerseveranceDe EverandGrit: The Power of Passion and PerseveranceCalificación: 4 de 5 estrellas4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceCalificación: 4 de 5 estrellas4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeDe EverandShoe Dog: A Memoir by the Creator of NikeCalificación: 4.5 de 5 estrellas4.5/5 (540)

- The Perks of Being a WallflowerDe EverandThe Perks of Being a WallflowerCalificación: 4.5 de 5 estrellas4.5/5 (2104)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersCalificación: 4.5 de 5 estrellas4.5/5 (348)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureCalificación: 4.5 de 5 estrellas4.5/5 (474)

- Her Body and Other Parties: StoriesDe EverandHer Body and Other Parties: StoriesCalificación: 4 de 5 estrellas4/5 (822)

- The Emperor of All Maladies: A Biography of CancerDe EverandThe Emperor of All Maladies: A Biography of CancerCalificación: 4.5 de 5 estrellas4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Calificación: 4.5 de 5 estrellas4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe EverandThe Little Book of Hygge: Danish Secrets to Happy LivingCalificación: 3.5 de 5 estrellas3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyCalificación: 3.5 de 5 estrellas3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)De EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Calificación: 4 de 5 estrellas4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaCalificación: 4.5 de 5 estrellas4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryCalificación: 3.5 de 5 estrellas3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDe EverandTeam of Rivals: The Political Genius of Abraham LincolnCalificación: 4.5 de 5 estrellas4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealDe EverandOn Fire: The (Burning) Case for a Green New DealCalificación: 4 de 5 estrellas4/5 (74)

- Yahoo Hadoop TutorialDocumento28 páginasYahoo Hadoop Tutorialroshan9786Aún no hay calificaciones

- The Unwinding: An Inner History of the New AmericaDe EverandThe Unwinding: An Inner History of the New AmericaCalificación: 4 de 5 estrellas4/5 (45)

- Rise of ISIS: A Threat We Can't IgnoreDe EverandRise of ISIS: A Threat We Can't IgnoreCalificación: 3.5 de 5 estrellas3.5/5 (137)

- Fed STD H28 3 PDFDocumento31 páginasFed STD H28 3 PDFbman0051401Aún no hay calificaciones

- TG NetDocumento89 páginasTG Netroshan9786Aún no hay calificaciones

- Complex Document Layout Segmentation Based On An Encoder-Decoder ArchitectureDocumento7 páginasComplex Document Layout Segmentation Based On An Encoder-Decoder Architectureroshan9786Aún no hay calificaciones

- Docbank: A Benchmark Dataset For Document Layout AnalysisDocumento12 páginasDocbank: A Benchmark Dataset For Document Layout Analysisroshan9786Aún no hay calificaciones

- Dot-Net: Document Layout Classification Using Texture-Based CNNDocumento6 páginasDot-Net: Document Layout Classification Using Texture-Based CNNroshan9786Aún no hay calificaciones

- Document Image Layout Analysis Via Explicit Edge Embedding NetworkDocumento11 páginasDocument Image Layout Analysis Via Explicit Edge Embedding Networkroshan9786Aún no hay calificaciones

- Layoutlm: Pre-Training of Text and Layout For Document Image UnderstandingDocumento9 páginasLayoutlm: Pre-Training of Text and Layout For Document Image Understandingroshan9786Aún no hay calificaciones

- Artificial Neural Networks For Document Analysis and RecognitionDocumento35 páginasArtificial Neural Networks For Document Analysis and Recognitionroshan9786Aún no hay calificaciones

- Solutions To Some Exercises From Bayesian Data Analysis, Third Edition, by Gelman, Carlin, Stern, and RubinDocumento36 páginasSolutions To Some Exercises From Bayesian Data Analysis, Third Edition, by Gelman, Carlin, Stern, and Rubinroshan9786Aún no hay calificaciones

- SSZG554 L11Documento37 páginasSSZG554 L11roshan9786Aún no hay calificaciones

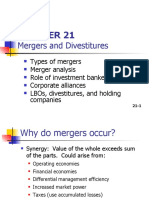

- Mergers and DivestituresDocumento16 páginasMergers and Divestituresroshan9786Aún no hay calificaciones

- Manual: For OperationDocumento99 páginasManual: For Operation이민재Aún no hay calificaciones

- Gujarat Technological UniversityDocumento2 páginasGujarat Technological UniversityBhavik PrajapatiAún no hay calificaciones

- The Harmonic OscillatorDocumento8 páginasThe Harmonic OscillatorEugenio PérezAún no hay calificaciones

- EP - DOC - How To Use The Application IntegratorDocumento23 páginasEP - DOC - How To Use The Application IntegratormcacchiarelliAún no hay calificaciones

- RS299a-Chapter 2-Mathematical Symbols in StatisticsDocumento17 páginasRS299a-Chapter 2-Mathematical Symbols in StatisticsBritney PimentelAún no hay calificaciones

- B31.3 Process Piping Course - 03 Materials-LibreDocumento45 páginasB31.3 Process Piping Course - 03 Materials-LibrejacquesmayolAún no hay calificaciones

- Work Environment, Job Satisfaction and Employee Performance in Health ServicesDocumento14 páginasWork Environment, Job Satisfaction and Employee Performance in Health ServicesApparna BalajiAún no hay calificaciones

- Activating Prior Knowledge and Building Background - Emily PompaDocumento3 páginasActivating Prior Knowledge and Building Background - Emily Pompaapi-532952163Aún no hay calificaciones

- Internship ReportDocumento32 páginasInternship ReportNaman DwivediAún no hay calificaciones

- Design and Simulation of Half Wave Rectifier On MATLABDocumento29 páginasDesign and Simulation of Half Wave Rectifier On MATLABAhmed Ahmed MohamedAún no hay calificaciones

- The Behaviour of Recycled Aggregate Concrete at Elevated TemperaturesDocumento12 páginasThe Behaviour of Recycled Aggregate Concrete at Elevated Temperaturestaramalik07Aún no hay calificaciones

- 07 - Reliable NetworksDocumento2 páginas07 - Reliable NetworksWorldAún no hay calificaciones

- Remedial PhysicsDocumento133 páginasRemedial Physicssharifhass36Aún no hay calificaciones

- Designs For Generating The Jeff Cook Effect: Dimension LaboratoriesDocumento7 páginasDesigns For Generating The Jeff Cook Effect: Dimension LaboratoriesBilisuma ZewduAún no hay calificaciones

- Distillation TowerDocumento182 páginasDistillation TowerMoh HassanAún no hay calificaciones

- Fraunhofer Diffraction at Double SlitDocumento9 páginasFraunhofer Diffraction at Double SlitJigyasa SahniAún no hay calificaciones

- Cat Detect Proximity Awareness For MineStar Onboard PDFDocumento46 páginasCat Detect Proximity Awareness For MineStar Onboard PDFИгорь ИвановAún no hay calificaciones

- Cebex 100Documento2 páginasCebex 100Riyan Aditya NugrohoAún no hay calificaciones

- Sakshi: Complex Numbers and Demoivres TheoremDocumento5 páginasSakshi: Complex Numbers and Demoivres TheoremN C Nagesh Prasad0% (1)

- Catalinas ManualDocumento192 páginasCatalinas ManualFabián CarguaAún no hay calificaciones

- Onload Boiler Cleaning SystemDocumento11 páginasOnload Boiler Cleaning SystemidigitiAún no hay calificaciones

- Fundamentals of Structural Design in TimberDocumento17 páginasFundamentals of Structural Design in TimberTimothy Ryan WangAún no hay calificaciones

- Rotary Table Bill of Materials A: TitleDocumento12 páginasRotary Table Bill of Materials A: TitleComan Cristian MihailAún no hay calificaciones

- MathematicsDocumento1 páginaMathematicsAubrey RegunayanAún no hay calificaciones

- 1238 Francis Runners e PDFDocumento10 páginas1238 Francis Runners e PDFFolpoAún no hay calificaciones

- UNIT3 Part2 (Multithreading)Documento15 páginasUNIT3 Part2 (Multithreading)Abhigna NadupalliAún no hay calificaciones

- Spur Gear Design by IIT MadrasDocumento28 páginasSpur Gear Design by IIT MadrasC.S.ABHILASHAún no hay calificaciones

- Platinum Group Metals and Compounds: Article No: A21 - 075Documento72 páginasPlatinum Group Metals and Compounds: Article No: A21 - 075firda haqiqiAún no hay calificaciones

- 20 Tips To Increase Battery Life of Your Laptop PDFDocumento4 páginas20 Tips To Increase Battery Life of Your Laptop PDFn_yogananth9516Aún no hay calificaciones