Documentos de Académico

Documentos de Profesional

Documentos de Cultura

Step by Rajiv Mural Failover Clustering 2012

Cargado por

RAJIV MURAL0 calificaciones0% encontró este documento útil (0 votos)

29 vistas18 páginasThis documents guide you to configure failover clustering step by step in windows server 2012

you can find so many documents over the internet regarding this.

i post this because i m believe knowledge is for all

Derechos de autor

© © All Rights Reserved

Formatos disponibles

DOCX, PDF, TXT o lea en línea desde Scribd

Compartir este documento

Compartir o incrustar documentos

¿Le pareció útil este documento?

¿Este contenido es inapropiado?

Denunciar este documentoThis documents guide you to configure failover clustering step by step in windows server 2012

you can find so many documents over the internet regarding this.

i post this because i m believe knowledge is for all

Copyright:

© All Rights Reserved

Formatos disponibles

Descargue como DOCX, PDF, TXT o lea en línea desde Scribd

0 calificaciones0% encontró este documento útil (0 votos)

29 vistas18 páginasStep by Rajiv Mural Failover Clustering 2012

Cargado por

RAJIV MURALThis documents guide you to configure failover clustering step by step in windows server 2012

you can find so many documents over the internet regarding this.

i post this because i m believe knowledge is for all

Copyright:

© All Rights Reserved

Formatos disponibles

Descargue como DOCX, PDF, TXT o lea en línea desde Scribd

Está en la página 1de 18

[Step-by-Step] Creating a Windows Server 2012 R2 Failover Cluster using

StarWind iSCSI SAN v8

March 27, 2014 at 10:27 pm | Posted in Cluster, Windows Server 2012, Windows Server 2012 R2 | Leave a comment

Tags: Cluster, Failover Cluster, iSCSI, SAN, StarWind iSCSI SAN, Step-by-Step, Windows Server 2012, Windows Server

2012 R2

If you dont know StarWind iSCSI SAN product and you currently handling clusters that require a

shared storage (not necessarily Windows), I highly recommend to take a look around to this the

platform. To summarize, StarWind iSCSI SAN represents a software which allows you to create your

own shared storage platform without requiring any additional

hardware.

I created a post a while ago about Five Easy Steps to

Configure Windows Server 2008 R2 Failover Cluster

using StarWind iSCSI SAN to explain how can a Failover

Cluster can be easily configured with the help of StarWind iSCSI

SAN. Since there has been some changes in the latest releases of

Windows Server and StarWind iSCSI SAN has a brand new

v8 of its platform, I thought it would be a good idea to create a

new article to achieve an easy way to create our own cluster.

As I did, for the previous post, the main idea about this article is to show a simple step-by-step

process to get a Windows Server 2012 R2 Failover Cluster up and running, and without requiring to

use an expensive shared storage platform to complete it. The steps involved are:

1. Review and complete pre-requisites for the environment.

2. Install StarWind iSCSI SAN software.

3. Configure and create LUNs using StarWind iSCSI SAN.

4. Install Failover Cluster feature and run cluster validation.

5. Create Windows Server 2012 R2 Failover Cluster.

1. Review and Complete Pre-Requisites for the

Environment

Windows Server 2012 introduced some changes into the Failover Cluster scenarios, even though those

are important and improved changes, the basic rules of Failover Cluster has not changed. Here are the

requirements for a Windows Server 2012 R2 Failover Cluster.

Requirements for Windows Server 2012 R2 Failover Cluster

Here are the requirements in Windows Server 2012 R2 for Failover Clusters:

o Two or more compatible servers: You need hardware that is compatible with each other, highly

recommended to always use same type of hardware when you are creating a cluster. Microsoft

requires for the hardware involved to meet the qualification for the Certified for Windows

Server 2012 logo, the information can be retrieved from theWindows Server catalog.

o A shared storage: This is where we can use StarWind iSCSI SAN software.

o [Optional] Three network cards on each server, one public network (from which we usually

access Active Directory), a private for heartbeat between servers and one dedicated to iSCSI

storage communication. This is actually an optional requirement since using one network card is

possible but not suitable in almost any environment.

o All hosts must be member from an Active Directory domain. To install and configure a cluster

we dont need a Domain Admin account, but we do need a Domain account which is included in

the local Administrators of each host.

Here are some notes about some changes introduced in Windows Server 2012 regarding requirements:

We can implement Failover Cluster on all Windows Server 2012 and Windows Server 2012 R2

editions, including of course Core installations. Previously on Windows Server 2008 R2 the Enterprise or

Datacenter Edition were necessary.

Also the concept for Active Directory-detached cluster appears in Windows Server 2012, which

means that a Failover Cluster does not require a Computer object in Active Directory, the access is

performed by a registration in DNS. But, the cluster nodes must still be joined to AD.

Requirements for StarWind iSCSI SAN Software

Here are the requirements for installing the component which will be in charge of receiving the iSCSI

connections:

o Windows Server 2008 R2 or Windows Server 2012

o Intel Xeon E5620 (or higher)

o 4 GB of RAM (or higher)

o 10 GB of disk space for StarWind application data and log files

o Storage available for iSCSI LUNs: SATA/SAS/SSD drive based arrays supported. Software based

arrays are not supported in iSCSI.

o 1 Gigabit Ethernet or 10 Gigabit Ethernet.

o iSCSI ports open between hosts and StarWind iSCSI SAN Server. The iSCSI ports are 3260 and 3261

for the management console.

General Recommendations for the Environment

In this scenario, there are several Microsoft and StarWind recommendations we must fulfill in order to

get the best supportability and results. Keep in mind that each scenario could require different

recommendations.

To mention some of the general recommendations:

o NIC Teaming for adapters, except iSCSI. Windows Server 2012 improved significantly the

performance and of course supportability of network adapters teaming and is highly recommended

to use that option for improved performance and high-availability. But we must avoid configure

teaming on iSCSI network adapters.

Microsoft offers a very detailed document about handling NIC teaming in Windows Server 2012:

Windows Server 2012 NIC Teaming (LBFO) Deployment and Management and also check

this article NIC Teaming Overview.

o Multi-path for iSCSI network adapters. iSCSI network adapters prefer handling MPIO instead of

NIC teaming, because in most scenarios the adapter throughput is not improved and moreover

there could be some increases in response times. Using MPIO is the recommendation with round-

robin.

o Isolate network traffic on the Failover Cluster. It is almost mandatory that we separate iSCSI

traffic from the rest of networks, and highly recommended to isolate the rest of traffic available.

For example: Live Migration in Hyper-V clusters, management network, public network, or Hyper-

V replica traffic (if the feature is enabled in Windows Server 2012).

o Drivers and firmware updated: Most of hardware vendors will require prior to start any

configuration, like a Failover Cluster, to have all drivers and firmware components updated to the

latest version. Keep in mind that having different drivers or firmware between hosts in a Failover

Cluster will cause to fail the validation tool and therefore the cluster wont be supported by

Microsoft.

o Leave one extra LUN empty in the environment for future validations. The Failover Cluster

Validation Tool is a great resource to retrieve detailed status about the health of each cluster

component, we can run the tool whenever we want and it will not generate any disruption. But,

to have a full Storage Validation it is required to have at least one LUN available in the cluster

but not used for any service or application.

For more information about best practices, review the following link: StarWind High Availability

Best Practices.

One important new feature introduced by StarWind iSCSI SAN v8 is the use of Log-Structured File

System (LSFS). LSFS is a specialized file system that stores multiple files of virtual devices and ensures

high performance during writing operations with a random access pattern. This file system resolves the

problem of slow disk operation and writes data at the speed that can be achieved by the underlying

storage during sequential writes.

At this moment LSFS is experimental in v8, use it carefully and validate your cluster services in a lab

scenario if you are planning to deploy LSFS.

2. Install StarWind iSCSI SAN software

After we reviewed and verified the requirements, we can easily start installing StarWind iSCSI SAN

software, which can be downloaded in trial-mode. This represents the simplest step in our list, since

the installation does not have any complex step.

In the process, the Microsoft iSCSI service will be required to add to the server and the driver for the

software.

After the installation is complete we can access our console and we will see as a first step necessary is

to configure the Storage pool necessary.

We must select the path for the hard drive where we are going to store the LUNs to be used in our

shared storage scenario.

3. Configure and create LUNs in StarWind iSCSI SAN

When we have the program installed, we can start managing it from the console and we will see the

options are quite intuitive.

We are going to split the configuration section in two parts: Hosting iSCSI LUNs with StarWind iSCSI SAN

and configuring our iSCSI initiator on each Windows Server 2012 R2 host in the cluster.

Hosting iSCSI LUNs with StarWind iSCSI SAN

We are going to review the basic steps to configure the StarWind iSCSI to start hosting LUNs for our

cluster; the initial task is to add the host:

3.1 Select the Connect option for our local server.

3.2 With the host added, we can start creating the storage that will be published through iSCSI: Right-

click the server and select Add target and a new wizard will appear.

3.3 Select the Target alias from which well identify the LUN we are about to create and then

configure to be able to cluster. The name below will show how we can identify this particular target in

our iSCSI clients. Click on Next and then Create.

3.4 With our target created we can start creating devices or LUNs within that target. Click on Add

Device.

3.5 Select Hard Disk Device.

3.6 Select Virtual Disk. The other two possibilities to use here are Physical Disk from which we

can select a hard drive and work in a pass-through model.

And RAM Disk is a very interesting option from which we can use a block of RAM to be treated as a

hard drive or LUN in this case. Because the speed of RAM is much faster than most other types of

storage, files on a RAM disk can be accessed more quickly. Also because the storage is actually in RAM,

it is volatile memory and will be lost when the computer powers off.

3.7 In the next section we can select the disk location and size. In my case Im using E:\ drive and 1GB.

3.8 Since this is a virtual disk, we can select from either thick-provision (space is allocated in

advance) or thin-provision (space is allocated as is required). Thick provisioning could represent, for

some applications, as a little bit faster than thin provisioning.

The LSFS options we have available in this case are: Deduplication enabled (procedure to save space

since only unique data is stored, duplicated data are stored as links) and Auto defragmentation

(helps to make space reclaim when old data is overwritten or snapshots are deleted).

3.9 In the next section we can select if we are going to use disk caching to improve performance for

read and writes in this disk. The first opportunity we have works with thememory cache, from which

we can select write-back (asynchronous, with better performance but more risk about

inconsistencies), write-through (synchronous, slow performance but no risk about data inconsistency)

or no cache at all.

Using caching can significantly increase the performance of some applications, particularly databases,

that perform large amounts of disk I/O. High Speed Cahing operates on the principle that server

memory is faster than disk. The memory cache stores data that is more likely to be required by

applications. If a program turns to the disk for data, a search is first made for the relevant block in the

cache. If the block is found the program uses it, otherwise the data from the disk is loaded into a new

block of memory cache.

3.10 StarWind v8 adds a new layer in the caching concept, using L2 cache. This type of cache is

represented in a virtual file intended to be placed in SSD drives, for high-performance. In this section

we have the opportunity to create an L2 cache file, from which again we can select to use it as write-

back or write-through.

3.11 Also, we will need to select a path for the L2 cache file.

3.12 Click on Finish and the device will be ready to be used.

3.13 In my case Ive also created a second device in the same target.

Configure Windows Server 2012 R2 iSCSI Initiator

Each host must have access to the file weve just created in order to be able to create our Failover

Cluster. On each host, execute the following:

3.14 Access Administrative Tools, iSCSI Initiator.

We will also receive a notification about The Microsoft iSCSI service is not running, click Yes to

start the service.

3.15 In the Target pane, type in the IP address used for the target host, our iSCSI server, to receive

the connections. Remember to use the IP address dedicated to iSCSI connections, if the StarWind iSCSI

SAN server also has a public connection we can also use it, but the traffic will be directed using that

network adapter.

3.16 Click on Quick Connect to be authorized by the host to use these files.

Once weve connected to the files, access Disk Management to verify we can now use these files as

storage attached to the operating system.

3.17 And as a final step, just using the first host in the cluster, put Online the storage file and select

also Initialize Disk. Since these are treated as normal hard disks, the process for initializing a LUN is

no different than initializing a physical and local hard drive in the server.

Now, lets take a look about the Failover Cluster feature.

4. Install Failover Cluster feature and Run Cluster

Validation

Prior to configure the cluster, we need to enable the Failover Cluster feature on all hosts in the

cluster and well also run the verification tool provided by Microsoft to validate the consistency and

compatibility of our scenario.

4.1 In Server Manager, access the option Add Roles and Features.

4.2 Start the wizard, do not add any role in Server Roles. And in Features enable the Failover

Clustering option.

4.3 Once installed, access the console from Administrative Tools. Within the console, the option we

are interested in this stage is Validate a Configuration.

4.4 In the new wizard, we are going to add the hosts that will represent the Failover Cluster in order to

validate the configuration. Type in the servers FQDN names or browse for their names; click on

Next.

4.5 Select Run all tests (recommended) and click on Next.

4.6 In the following screen we can see a detailed list about all the tests that will be executed, take

note that the storage tests take some time; click on Next.

If weve fulfilled the requirements reviewed earlier then the test will be completed successfully. In my

case the report generated a warning, but the configuration is supported for clustering.

Accessing the report we can get a detailed information, in this scenario the Network section

generated a warning for Node <1> is reachable from Node <2> by only one pair of network

interfaces. It is possible that this network path is a single point of failure for communication within

the cluster. Please verify that this single path is highly available, or consider adding additional

networks to the cluster. This is not a critical error and can easily be solved by adding at least one new

adapter in the cluster configuration.

4.7 Leaving the option Create the cluster now using the validated nodes enabled will start the

Create Cluster as soon as we click Finish.

5. Create Windows Server 2012 R2 Failover Cluster

At this stage, weve completed all the requirements and validated our configuration successfully. In

the next following steps, we are going to see the simple procedure to configure our Windows Server

2012 R2 Failover Cluster.

5.1 In the Failover Cluster console, select the option for Create a cluster.

5.2 A similar wizard will appear as in the validation tool. The first thing to do is add the servers we

would like to cluster; click on Next.

5.3 In the next screen we have to select the cluster name and the IP address assigned. Remember

that in a cluster, all machines are represented by one name and one IP.

5.4 In the summary page click on Next.

After a few seconds the cluster will be created and we can also review the report for the process.

Now in our Failover Cluster console, well get the complete picture about the cluster weve created:

Nodes involved, storage associated to the cluster, networks and the events related to cluster.

The default option for a two-node cluster is to use a disk as a witness to manage cluster quorum. This

is usually a disk we assign the letter Q:\ and does not store a large amount of data. The quorum disk

stores a very small information containing the cluster configuration, its main purpose is for cluster

voting.

To perform a backup for the Failover Cluster configuration we only need to backup the Q:\ drive. This,

of course, does not backup the services configured in the Failover Cluster.

Cluster voting is used to determine, in case of a disconnection, which nodes and services will be online.

For example, if a node is disconnected from the cluster and shared storage, the remaining node with

one vote and the quorum disk with also one vote decides that the cluster and its services will remain

online.

This voting is used as a default option but can be modified in the Failover Cluster console. Modifying it

depends and is recommended in various scenarios: Having an odd number of nodes, this case will be

required to use as a Node Majority quorum; or a cluster stretched in different geographically

locations will be recommended to use an even number of nodes but using a file share as a witness in a

third site.

También podría gustarte

- Cisco Certified Entry NetworkingDocumento1 páginaCisco Certified Entry NetworkingRAJIV MURALAún no hay calificaciones

- SC LabsDocumento8 páginasSC LabsRAJIV MURALAún no hay calificaciones

- Windows Server 2012 Feature ComparisonDocumento38 páginasWindows Server 2012 Feature Comparisonhangtuah79Aún no hay calificaciones

- Implement An eBGP Based Solution, Given A Network Design and A Set of RequirementsDocumento19 páginasImplement An eBGP Based Solution, Given A Network Design and A Set of RequirementsJuan CarlosAún no hay calificaciones

- Wsus ConfigurationDocumento45 páginasWsus ConfigurationRAJIV MURALAún no hay calificaciones

- Haryana Vehicle NoDocumento1 páginaHaryana Vehicle NoRAJIV MURALAún no hay calificaciones

- Security PDFDocumento11 páginasSecurity PDFElja MohcineAún no hay calificaciones

- HP Proliant TeamingDocumento13 páginasHP Proliant TeamingRAJIV MURALAún no hay calificaciones

- EWAN Student Lab Skills Based AssessmentDocumento4 páginasEWAN Student Lab Skills Based AssessmentLisa SchneiderAún no hay calificaciones

- Electric Motor Test RepairDocumento160 páginasElectric Motor Test Repairrotorbrent100% (2)

- Haryana Vehicle NoDocumento1 páginaHaryana Vehicle NoRAJIV MURALAún no hay calificaciones

- Step by Step Guide To Download and Install Backup Exec 2012Documento20 páginasStep by Step Guide To Download and Install Backup Exec 2012RAJIV MURALAún no hay calificaciones

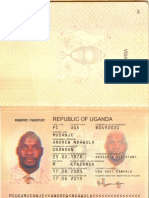

- PassPort DetailsDocumento1 páginaPassPort DetailsRAJIV MURALAún no hay calificaciones

- Jameen Related TermsDocumento1 páginaJameen Related TermsRAJIV MURALAún no hay calificaciones

- Win7 Troubleshooting Training PlanDocumento3 páginasWin7 Troubleshooting Training PlanRAJIV MURALAún no hay calificaciones

- CricketDocumento1 páginaCricketRAJIV MURALAún no hay calificaciones

- Taxes in IndiaDocumento6 páginasTaxes in IndiaRAJIV MURALAún no hay calificaciones

- Vmware Vsphere TrainingDocumento10 páginasVmware Vsphere TrainingRaj YadavAún no hay calificaciones

- CSD RatesDocumento6 páginasCSD RatesRAJIV MURALAún no hay calificaciones

- Price ListDocumento4 páginasPrice ListRAJIV MURALAún no hay calificaciones

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeCalificación: 4 de 5 estrellas4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe EverandThe Little Book of Hygge: Danish Secrets to Happy LivingCalificación: 3.5 de 5 estrellas3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryCalificación: 3.5 de 5 estrellas3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceCalificación: 4 de 5 estrellas4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)De EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Calificación: 4 de 5 estrellas4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeDe EverandShoe Dog: A Memoir by the Creator of NikeCalificación: 4.5 de 5 estrellas4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureCalificación: 4.5 de 5 estrellas4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe EverandNever Split the Difference: Negotiating As If Your Life Depended On ItCalificación: 4.5 de 5 estrellas4.5/5 (838)

- Grit: The Power of Passion and PerseveranceDe EverandGrit: The Power of Passion and PerseveranceCalificación: 4 de 5 estrellas4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaCalificación: 4.5 de 5 estrellas4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerDe EverandThe Emperor of All Maladies: A Biography of CancerCalificación: 4.5 de 5 estrellas4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealDe EverandOn Fire: The (Burning) Case for a Green New DealCalificación: 4 de 5 estrellas4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersCalificación: 4.5 de 5 estrellas4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnDe EverandTeam of Rivals: The Political Genius of Abraham LincolnCalificación: 4.5 de 5 estrellas4.5/5 (234)

- Rise of ISIS: A Threat We Can't IgnoreDe EverandRise of ISIS: A Threat We Can't IgnoreCalificación: 3.5 de 5 estrellas3.5/5 (137)

- The Unwinding: An Inner History of the New AmericaDe EverandThe Unwinding: An Inner History of the New AmericaCalificación: 4 de 5 estrellas4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyCalificación: 3.5 de 5 estrellas3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreCalificación: 4 de 5 estrellas4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Calificación: 4.5 de 5 estrellas4.5/5 (119)

- The Perks of Being a WallflowerDe EverandThe Perks of Being a WallflowerCalificación: 4.5 de 5 estrellas4.5/5 (2099)

- Her Body and Other Parties: StoriesDe EverandHer Body and Other Parties: StoriesCalificación: 4 de 5 estrellas4/5 (821)

- CTR 8540 3.4.0 Getting Started Configuration - June2017Documento201 páginasCTR 8540 3.4.0 Getting Started Configuration - June2017Sandra Milena Viracacha100% (2)

- PST-VT103A & PST-VT103B User ManualDocumento32 páginasPST-VT103A & PST-VT103B User ManualMario FaundezAún no hay calificaciones

- Aruba Instant On AP11: Wi-Fi Designed With Small Businesses in MindDocumento5 páginasAruba Instant On AP11: Wi-Fi Designed With Small Businesses in MindAchmad AminAún no hay calificaciones

- Configuring Cisco Easy VPN and Easy VPN Server Using SDM: Ipsec VpnsDocumento56 páginasConfiguring Cisco Easy VPN and Easy VPN Server Using SDM: Ipsec VpnsrajkumarlodhAún no hay calificaciones

- Sakura Taisen 4 - Act 2Documento64 páginasSakura Taisen 4 - Act 2Sakura Wars50% (2)

- SAP Material DetailsDocumento12 páginasSAP Material DetailsRanjeet GuptaAún no hay calificaciones

- Cisco Cybersecurity Scholarship Agreement: 1. ScopeDocumento8 páginasCisco Cybersecurity Scholarship Agreement: 1. ScopecabronestodosAún no hay calificaciones

- 10 - HeatDocumento17 páginas10 - HeatPedro BrizuelaAún no hay calificaciones

- Braindump2go 312-50v11 Exam Dumps Guarantee 100% PassDocumento8 páginasBraindump2go 312-50v11 Exam Dumps Guarantee 100% PassLê Huyền MyAún no hay calificaciones

- Li FiDocumento6 páginasLi FiRakesh ChinthalaAún no hay calificaciones

- SAP Security GuideDocumento65 páginasSAP Security Guidegreat_indianAún no hay calificaciones

- Skype For Business A95B-EE5E ATHDocumento15 páginasSkype For Business A95B-EE5E ATHMaria Jose Garcia CifuentesAún no hay calificaciones

- Avaya SBCE SME Platform Fact SheetDocumento2 páginasAvaya SBCE SME Platform Fact SheetlykorianAún no hay calificaciones

- Data CommunicationDocumento18 páginasData CommunicationSiddhantSinghAún no hay calificaciones

- Fortiap S Fortiap w2 v6.0.5 Release Notes PDFDocumento10 páginasFortiap S Fortiap w2 v6.0.5 Release Notes PDFtorr123Aún no hay calificaciones

- Unit 2Documento42 páginasUnit 2Parul BakaraniyaAún no hay calificaciones

- Node-Irc Documentation: Release 2.1Documento22 páginasNode-Irc Documentation: Release 2.1Benjamin3HarrisAún no hay calificaciones

- 20765C 07Documento29 páginas20765C 07douglasAún no hay calificaciones

- Telecontrol 84i+Documento76 páginasTelecontrol 84i+Hans Jørn ChristensenAún no hay calificaciones

- Azin Dastpak Cognitive Radio Networks PaperDocumento39 páginasAzin Dastpak Cognitive Radio Networks PaperMaha MohamedAún no hay calificaciones

- 1511 MAX DsDocumento4 páginas1511 MAX DsOchie RomeroAún no hay calificaciones

- Low Power Sub-1 GHZ RF TransmitterDocumento61 páginasLow Power Sub-1 GHZ RF Transmitterkamesh_patchipala9458Aún no hay calificaciones

- Firewalls: Dr.P.V.Lakshmi Information Technology GIT, GITAM UniversityDocumento31 páginasFirewalls: Dr.P.V.Lakshmi Information Technology GIT, GITAM UniversityNishant MadaanAún no hay calificaciones

- Troubleshooting Ccme Ip Phone RegistrationDocumento14 páginasTroubleshooting Ccme Ip Phone RegistrationganuiyerAún no hay calificaciones

- Continuous Monitoring of Train ParametersDocumento61 páginasContinuous Monitoring of Train ParametersPraveen MathiasAún no hay calificaciones

- Datasheet of DS E04HGHI B - V4.71.200 - 20230413Documento4 páginasDatasheet of DS E04HGHI B - V4.71.200 - 20230413jose paredesAún no hay calificaciones

- PRB MLB Baseline ParameterDocumento12 páginasPRB MLB Baseline ParameterAdil Murad100% (1)

- Manual Ticket Printer - FutureLogic GEN2 (PSA-66-ST2R) 2.0Documento16 páginasManual Ticket Printer - FutureLogic GEN2 (PSA-66-ST2R) 2.0Marcelo LambreseAún no hay calificaciones

- Installation License File PDFDocumento2 páginasInstallation License File PDFPhanChauTuanAún no hay calificaciones

- Chapter 2 Network TopologyDocumento22 páginasChapter 2 Network TopologyAbir HasanAún no hay calificaciones